- Home›

- Amazon SageMaker›

- Clarify

Amazon SageMaker Clarify

Detect bias in ML models and understand model predictions

Overview

Amazon SageMaker Clarify provides machine learning developers with greater visibility into their training data and models so they can identify and limit bias and explain predictions.

Biases are imbalances in the training data or the prediction behavior of the model across different groups, such as age or income bracket. Biases can result from the data or algorithm used to train your model. For instance, if an ML model is trained primarily on data from middle-aged individuals, it may be less accurate when making predictions involving younger and older people. The field of machine learning provides an opportunity to address biases by detecting them and measuring them in your data and model. You can also look at the importance of model inputs to explain why models make the predictions they do.

Amazon SageMaker Clarify detects potential bias during data preparation, after model training, and in your deployed model by examining attributes you specify. For instance, you can check for bias related to age in your initial dataset or in your trained model and receive a detailed report that quantifies different types of possible bias. SageMaker Clarify also includes feature importance graphs that help you explain model predictions and produces reports which can be used to support internal presentations or to identify issues with your model that you can take steps to correct.

Detect bias in your data and model

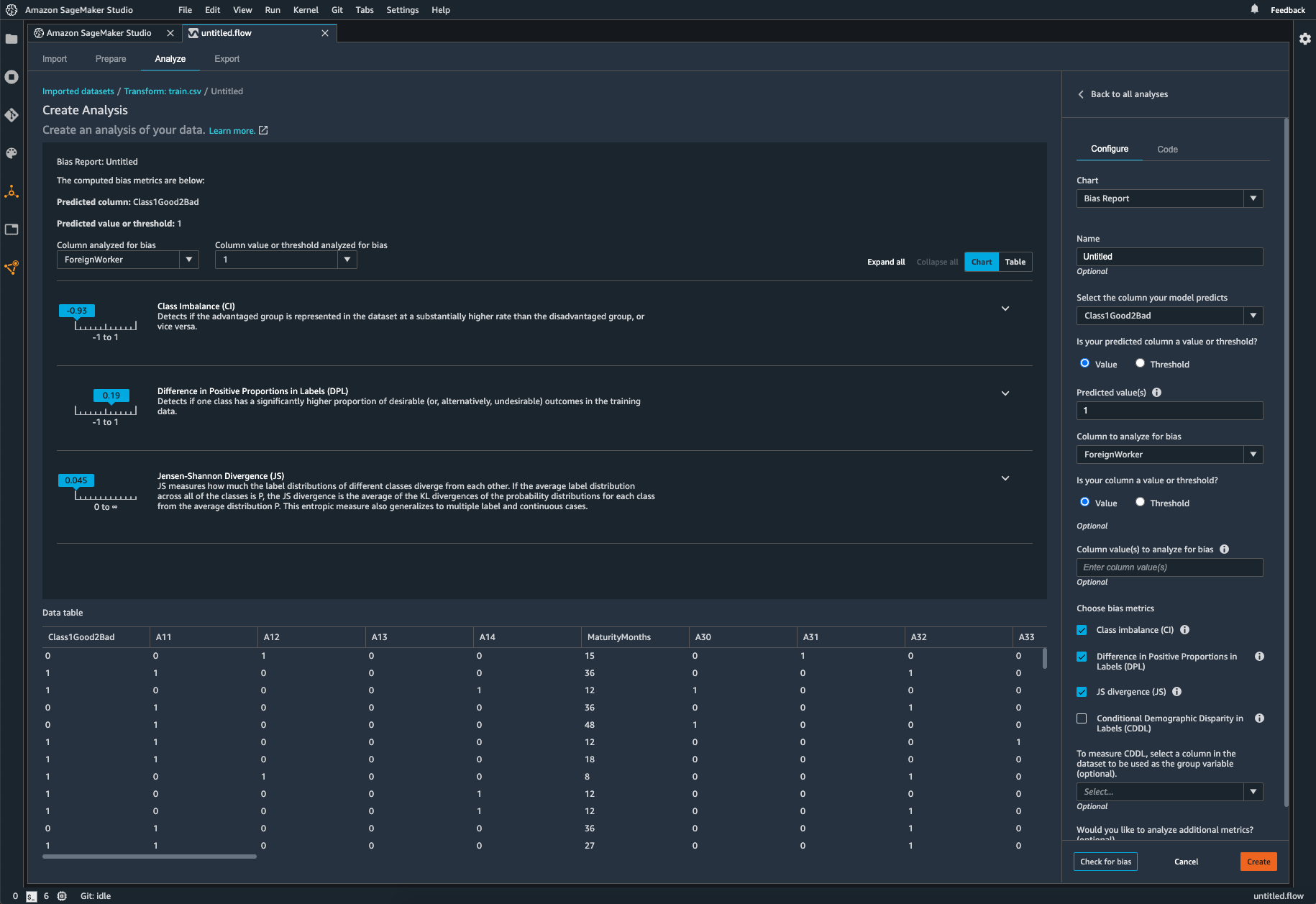

Identify imbalances in data

SageMaker Clarify is integrated with Amazon SageMaker Data Wrangler, making it easier to identify bias during data preparation. You specify attributes of interest, such as gender or age, and SageMaker Clarify runs a set of algorithms to detect any presence of bias in those attributes. After the algorithm runs, SageMaker Clarify provides a visual report with a description of the sources and measurements of possible bias so that you can identify steps to remediate the bias. For example, in a financial dataset that contains only a few examples of business loans to one age group as compared to others, SageMaker will flag the imbalance so that you can avoid a model that disfavors that age group.

Check your trained model for bias

You can also check your trained model for bias, such as predictions that produce a negative result more frequently for one group than they do for another. SageMaker Clarify is integrated with SageMaker Experiments so that after a model has been trained, you can identify attributes you would like to check for bias, such as age. SageMaker runs a set of algorithms to check the trained model and provides you with a visual report that identifies the different types of bias for each attribute, such as whether older groups receive more positive predictions compared to younger groups.

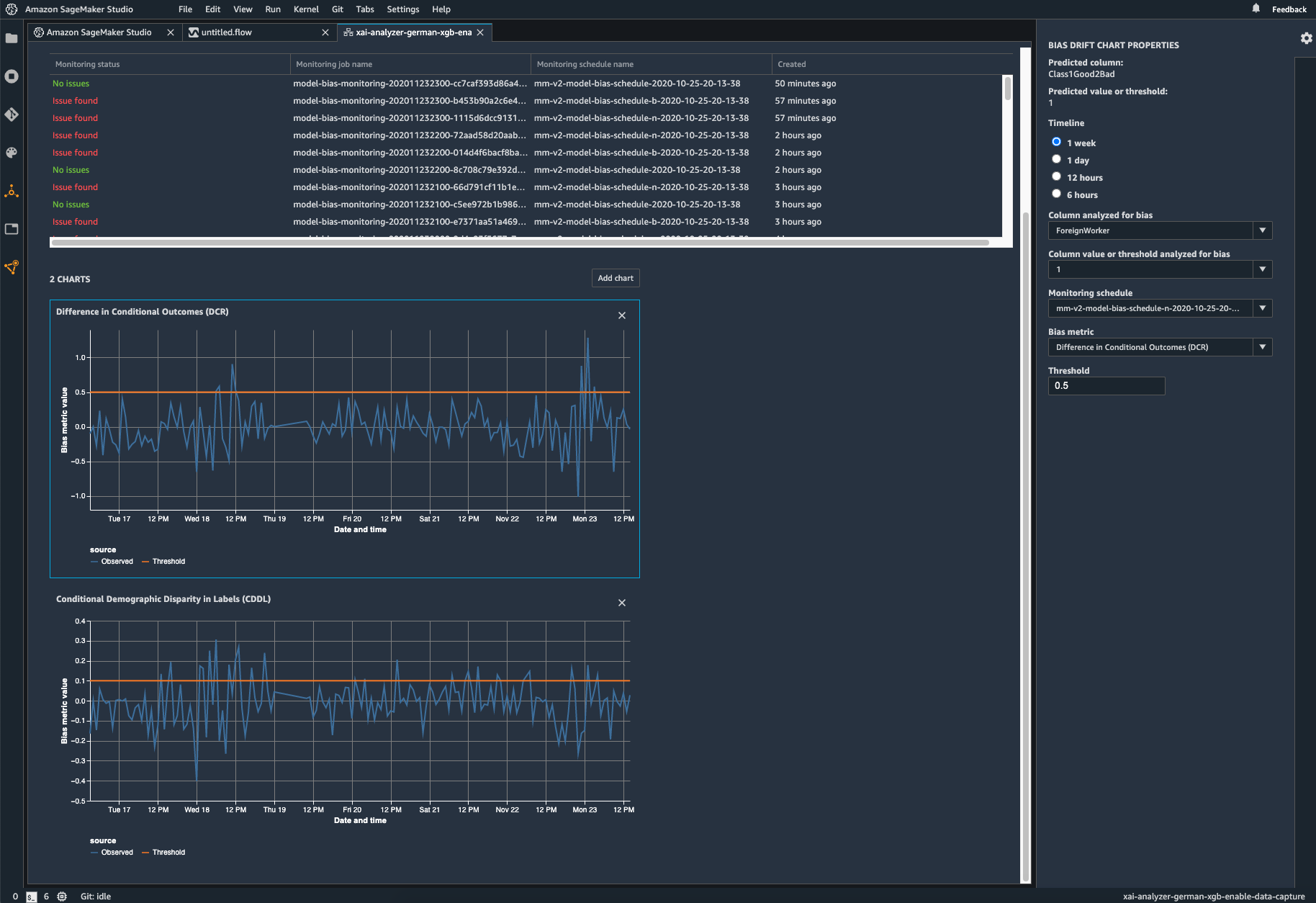

监控模型是否存在偏差

Although your initial data or model may not have been biased, changes in the world may introduce bias to a model that has already been trained. For example, a substantial change in home buyer demographics could cause a home loan application model to become biased if certain groups were not present or accurately represented in the original training data. SageMaker Clarify is integrated with SageMaker Model Monitor, enabling you to configure alerting systems like Amazon CloudWatch to notify you if your model exceeds certain bias metric thresholds.

Explain model behavior

Understand your model

Trained models may consider some model inputs more strongly than others when generating predictions. For example, a loan application model may weigh credit history more heavily than other factors. SageMaker Clarify is integrated with SageMaker Experiments to provide a graph detailing which features contributed most to your model’s overall prediction-making process after the model has been trained. These details may be useful for compliance requirements or can help determine if a particular model input has more influence than it should on overall model behavior.

Monitor your model for changes in behavior

Changes in real-world data can cause your model to give different weights to model inputs, changing its behavior over time. For example, a decline in home prices could cause a model to weigh income less heavily when making loan predictions. Amazon SageMaker Clarify is integrated with SageMaker Model Monitor to alert you if the importance of model inputs shift, causing model behavior to change.

Explain individual model predictions

Customers and internal stakeholders both want transparency into how models make their predictions. SageMaker Clarify integrates with SageMaker Experiments to show you the importance of each model input for a specific prediction. Results can be made available to customer-facing employees so that they have an understanding of the model’s behavior when making decisions based on model predictions.

Use Case

Regulatory Compliance

Regulations such as the Equal Credit Opportunity Act (ECOA) or Fairness in Housing Act may require companies to be able to explain financial decisions and take steps around model risk management. Amazon SageMaker Clarify can help flag any potential bias present in the initial data or in the model after training and can also help explain which model features contributed the most to an ML model’s prediction.

Internal Reporting & Compliance

Data science teams are often required to justify or explain ML models to internal stakeholders, such as internal auditors or executives. Amazon SageMaker Clarify can provide data science teams with a graph of feature importance when requested and can help quantify potential bias in an ML model or the data used to train it in order to provide additional information needed to support internal requirements.

Customer Service

Customer-facing employees, such as financial advisors or loan officers, may review a prediction made by an ML model as part of the course of their work. Working with the data science team, these employees can get a visual report via API directly from Amazon SageMaker Clarify with details on which features were most important to a given prediction in order to review it before making decisions that may impact customers.