We use machine learning technology to do auto-translation. Click "English" on top navigation bar to check Chinese version.

How to build smart applications using Protocol Buffers with Amazon Web Services IoT Core

Introduction to Protocol Buffers

Protocol Buffers, or Protobuf, provide a platform-neutral approach for serializing structured data. Protobuf is similar to JSON, except it is smaller, faster, and is capable of automatically generating bindings in your preferred programming language.

Agility and security in IoT with Protobuf code generation

A key advantage comes from the ease and security of software development using Protobuf’s code generator. You can write a schema to describe messages exchanged between the components of your application. A code generator (

Automated code generation frees developers from writing the encoding and decoding functions, and ensures its compatibility between programming languages. Allied with the new launch of

Other advantages of using Protocol Buffers over JSON with Amazon Web Services IoT Core are:

- Schema and validation : The schema is enforced both by the sender and receiver, ensuring that proper integration is achieved. Since messages are encoded and decoded by the auto-generated code, bugs are eliminated.

- Adaptability : The schema is mutable and it is possible to change message content maintaining backward and forward compatibility.

-

Bandwidth optimization

: For the same content, message length is smaller using Protobuf, since you are not sending headers, only data. Over time this provides better device autonomy and less bandwidth usage. A recent research on

Messaging Protocols and Serialization Formats revealed that a Protobuf formatted message can be up to 10 times smaller than its equivalent JSON formatted message. This means fewer bytes effectively go through the wire to transmit the same content. -

Efficient decoding

: Decoding Protobuf messages is more efficient than decoding JSON, which means recipient functions run in less time.

A benchmark run by Auth0 revealed that Protobuf can be up to 6 times more performant than JSON for equivalent message payloads.

This blog post will walk you through deploying a sample application that publishes messages to Amazon Web Services IoT Core using Protobuf format. The messages are then selectively filtered by the

Let’s review some of the basics of Protobuf.

Protocol Buffers in a nutshell

The message schema is a key element of Protobuf. A schema may look like this:

The first line of the schema defines the version of Protocol Buffers you are using. This post will use

The following line indicates that a new message definition called

Telemetry

will be described.

This message in particular has four distinct fields:

-

A

msgTypefield, which is of typeMsgTypeand can only take on enumerated values"MSGTYPE_NORMAL"or"MSGTYPE_ALERT" -

An

instrumentTagfield, which is of typestringand identifies the measuring instrument sending telemetry data -

A

timestampfield of typegoogle.protobuf.Timestampwhich indicates the time of the measurement -

A

valuefield of typedoublewhich contains the value measured

Please consult the

A

Telemetry

message written in JSON looks like this:

{

"msgType": "MSGTYPE_ALERT",

"instrumentTag": "Temperature-001",

"timestamp": 1676059669,

"value": 72.5

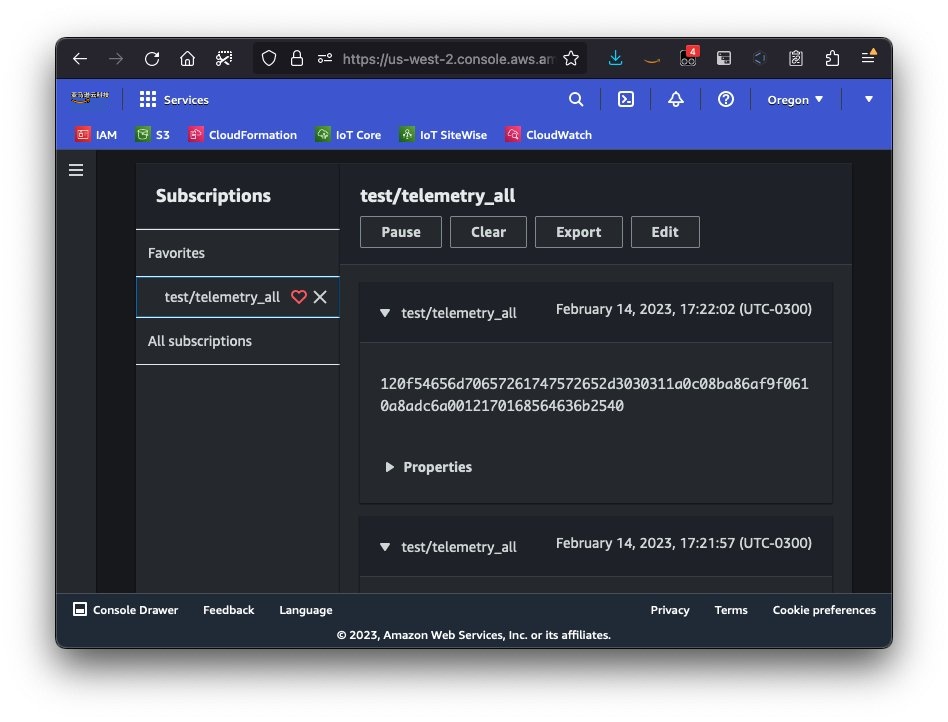

}The same message using protocol Buffers (encoded as base64 for display purposes) looks like this:

0801120F54656D70657261747572652D3030311A060895C89A9F06210000000000205240

Note that the JSON representation of the message is 115 bytes, versus the Protobuf one at only 36 bytes.

Once the schema is defined

protoc

can be used to:

- Create bindings in your programming language of choice

-

Create a

FileDescriptorSet, that is used by Amazon Web Services IoT Core to decode received messages.

Using Protocol Buffers with Amazon Web Services IoT Core

Protobuf can be used in multiple ways with Amazon Web Services IoT Core. The simplest way is to publish the message as

However, you get the most value when you want to decode Protobuf messages for filtering and forwarding. Filtered messages can be forwarded as Protobuf, or even decoded to JSON for compatibility with applications that only understand this format.

The recently launched

Prerequisites

To run this sample application you must have the following:

-

A computer with

protocinstalled. Refer to theofficial installation instructions for your Operating System. -

Amazon Web Services CLI (

installation instructions ) - Amazon Web Services account and valid credentials with full permissions on Amazon S3, Amazon Web Services IAM, Amazon Web Services IoT Core and Amazon Web Services CloudFormation

- Python 3.7 or newer

Sample application: Filtering and forwarding Protobuf messages as JSON

To deploy and run the sample application, we will perform 7 simple steps:

- Download the sample code and install Python requirements

-

Configure your

IOT_ENDPOINTandAWS_REGIONenvironment variables -

Use

protocto generate Python bindings and message descriptors - Run a simulated device using Python and the Protobuf generated code bindings

- Create Amazon Web Services Resources using Amazon Web Services CloudFormation and upload the Protobuf file descriptor

- Inspect the Amazon Web Services IoT Rule that matches, filters and republishes Protobuf messages as JSON

- Verify transformed messages are being republished

Step 1: Download the sample code and install Python requirements

To run the sample application, you need to download the code and install its dependencies:

-

First, download and extract the sample application from our Amazon Web Services github repository:

https://github.com/aws-samples/aws-iotcore-protobuf-sample - If you downloaded it as a ZIP file, extract it

- To install the necessary python requirements, run the following command within the folder of the extracted sample application

The command above will install two required Python dependencies:

boto3

(the Amazon Web Services SDK for Python) and

protobuf

.

Step 2: Configure your

IOT_ENDPOINT

and

AWS_REGION

environment variables

Our simulated IoT device will connect to the Amazon Web Services IoT Core endpoint to send Protobuf formatted messages.

If you are running Linux or Mac, run the following command. Make sure to replace

<AWS_REGION>

with the Amazon Web Services Region of your choice.

Step 3: Use

protoc

to generate Python bindings and message descriptor

The extracted sample application contains a file named

msg.proto

similar to the schema example we presented earlier.

Run the commands below to generate the code bindings your simulated device will use to generate the file descriptor.

After running these commands, you should see in your current folder two new files:

filedescriptor.desc

msg_pb2.py

Step 4: Run the simulated device using Python and the Protobuf generated code bindings

The extracted sample application contains a file named

simulate_device.py

.

To start a simulated device, run the following command:

Verify that messages are being sent to Amazon Web Services IoT Core using the MQTT Test Client on the Amazon Web Services console.

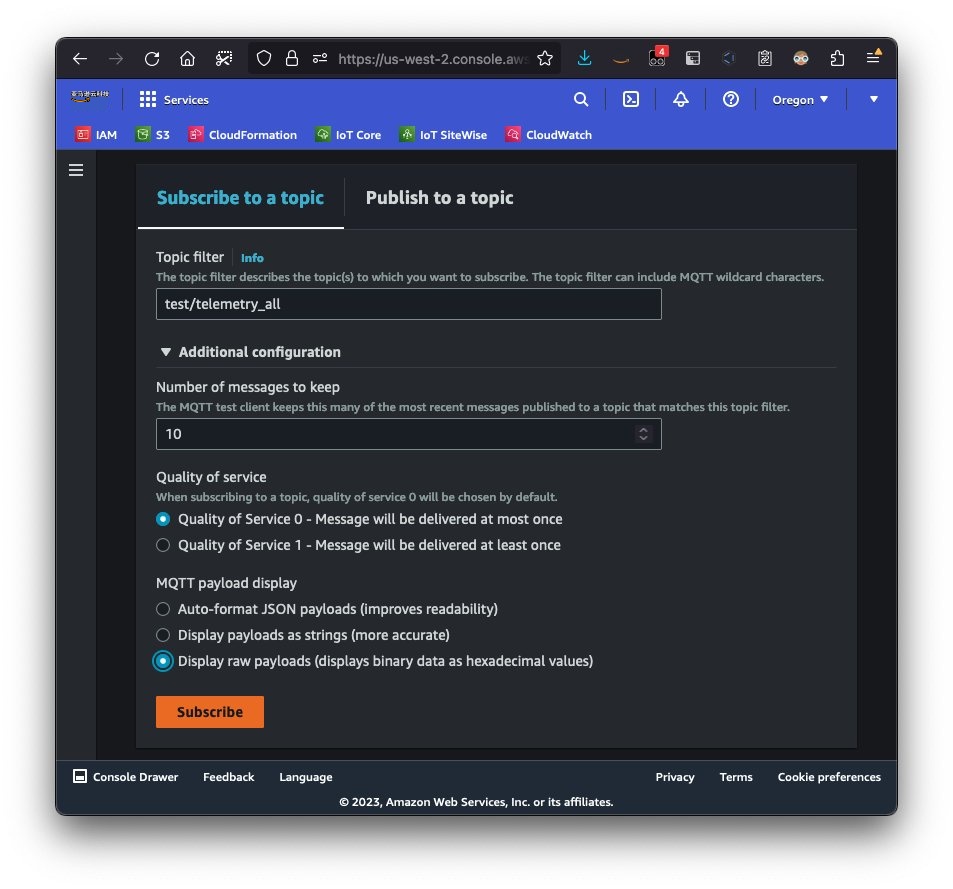

-

Access the Amazon Web Services IoT Core service console:

https://console.aws.amazon.com/iot ; make sure you are in the correct Amazon Web Services Region. - Under Test , select MQTT test client .

-

Under

the Topic filter

, fill in

test/telemetry_all - Expand the Additional configuration section and under MQTT payload display select Display raw payloads .

- Click Subscribe and watch as Protobuf formatted messages arrive into the Amazon Web Services IoT Core MQTT broker.

Step 5: Create Amazon Web Services Resources using Amazon Web Services CloudFormation and upload the Protobuf file descriptor

The extracted sample application contains an

support-infrastructure-template.yaml

.

This template defines an Amazon S3 Bucket, an Amazon Web Services IAM Role and an Amazon Web Services IoT Rule.

Run the following command to deploy the CloudFormation template to your Amazon Web Services account. Make sure to replace

<YOUR_BUCKET_NAME>

and

<AWS_REGION>

with a unique name for your S3 Bucket and the Amazon Web Services Region of your choice.

Amazon Web Services IoT Core’s support for Protobuf formatted messages requires the file descriptor we generated with

protoc

. To make it available we will upload it to the created S3 bucket. Run the following command to upload the file descriptor. Make sure to replace

<YOUR_BUCKET_NAME>

with the same name you chose when deploying the CloudFormation template.

aws s3 cp filedescriptor.desc s3://<YOUR_BUCKET_NAME>/msg/filedescriptor.desc

Step 6: Inspect the Amazon Web Services IoT Rule that matches, filters, and republishes Protobuf messages as JSON

Let’s assume you want to filter messages that have a

msgType

of

MSGTYPE_ALERT

, because these indicate there might be dangerous operating conditions. The CloudFormation template creates an Amazon Web Services IoT Rule that decodes the Protobuf formatted message our simulated device is sending to Amazon Web Services IoT Core, it then selects those that are alerts and republishes, in JSON format, so that another MQTT topic responder can subscribe to. To inspect the Amazon Web Services IoT Rule, perform the following steps:

-

Access the Amazon Web Services IoT Core service console:

https://console.aws.amazon.com/iot - On the left-side menu, under Message Routing , click Rules

- The list will contain an Amazon Web Services IoT Rule named ProtobufAlertRule , click to view the details

- Under the SQL statement, note the SQL statement , we will go over the meaning of each element shortly

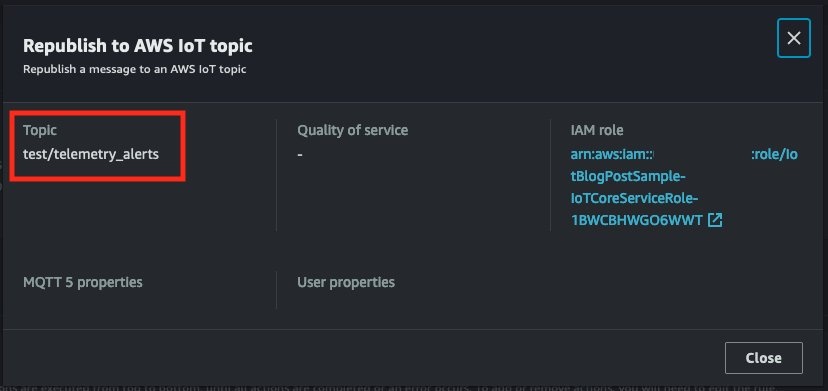

- Under Actions, note the single action to Republish to Amazon Web Services IoT topic

SELECT

VALUE decode(encode(*, 'base64'), "proto", "<YOUR_BUCKET_NAME>", "msg/filedescriptor.desc", "msg", "Telemetry")

FROM

'test/telemetry_all'

WHERE

decode(encode(*, 'base64'), "proto", "<YOUR_BUCKET_NAME>", "msg/filedescriptor.desc", "msg", "Telemetry").msgType = 'MSGTYPE_ALERT'This SQL statement does the following:

-

The

SELECT VALUE decode(...)indicates that the entire decoded Protobuf payload will be republished to the destination Amazon Web Services IoT topic as a JSON payload. If you wish to forward the message still in Protobuf format, you can replace this with a simpleSELECT * -

The

WHERE decode(...).msgType = 'MSGTYPE_ALERT'will decode the incoming Protobuf formatted message and only messages containing fieldmsgTypewith valueMSGTYPE_ALERTwill be forwarded

Step 7: Verify transformed messages are being republished

If you click on the single action present in this Amazon Web Services IoT Rule, you will note that it republishes messages to the

topic/telemetry_alerts

topic.

The destination topic

test/telemetry_alerts

is part of the definition of the Amazon Web Services IoT Rule action, available in the Amazon Web Services CloudFormation template of the sample application.

To subscribe to the topic and see if JSON formatted messages are republished, follow these steps:

-

Access the Amazon Web Services IoT Core service console:

https://console.aws.amazon.com/iot - Under Test , select MQTT test client

-

Under the

Topic filter

, fill in

test/telemetry_alerts - Expand the Additional configuration section and under MQTT payload display make sure Auto-format JSON payloads option is selected

-

Click

Subscribe

and watch as JSON-converted messages with

msgType MSGTYPE_ALERTarrive

If you inspect the code of the simulated device, you will notice approximately 20% of the simulated messages are of

MSGTYPE_ALERT

type and messages are sent every 5 seconds. You may have to wait to see an alert message arrive.

Clean Up

To clean up after running this sample, run the commands below:

Conclusion

As shown, working with Protobuf on Amazon Web Services IoT Core is as simple as writing a SQL statement. Protobuf messages provide advantages over JSON both in terms of cost savings (reduced bandwidth usage, greater device autonomy) and ease of development in any of the

protoc

supported programming languages.

For additional details on decoding Protobuf formatted messages using Amazon Web Services IoT Core Rules Engine, consult the

The example code can be found in the github repository:

The

decode

function is particularly useful when forwarding data to Amazon Kinesis Data Firehose since it will accept JSON input without the need for you to write an Amazon Web Services Lambda Function to perform the decoding.

For additional details on available service integrations for Amazon Web Services IoT Rule actions, consult the

About the authors

José Gardiazabal

José Gardiazabal is a Prototyping Architect with the Prototyping And Cloud Engineering team at Amazon Web Services where he helps customers realize their full potential by showing the art of the possible on Amazon Web Services. He holds a BEng. degree in Electronics and a Doctoral degree in Computer Science. He has previously worked in the development of medical hardware and software.

Donato Azevedo

Donato Azevedo is a Prototyping Architect with the Prototyping And Cloud Engineering team at Amazon Web Services where he helps customers realize their full potential by showing the art of the possible on Amazon Web Services. He holds a BEng. degree in Control Engineering and has previously worked with Industrial Automation for Oil & Gas and Metals & Mining companies.

The mentioned AWS GenAI Services service names relating to generative AI are only available or previewed in the Global Regions. Amazon Web Services China promotes AWS GenAI Services relating to generative AI solely for China-to-global business purposes and/or advanced technology introduction.