Free Tier

Gain low-cost, high-performance experience with cloud-based products and services

Always free

These free tier offers do not automatically expire.

12 months free

These free tier offers are available for 12 months to new Amazon Web Services customers.

Free Trial

These free tier offers are available within a limited period of time after you activate the services.

Explore our products

Satisfy different technology preferences and business requirements

Explore our solutions

Help enterprises implement efficient operations and business growth.

Automotive

Redefine the value chain of the automotive industry and create new core competencies for automotive enterprises with vehicle-cloud integration. Learn more »

Redefine the value chain of the automotive industry and create new core competencies for automotive enterprises with vehicle-cloud integration. Learn more »

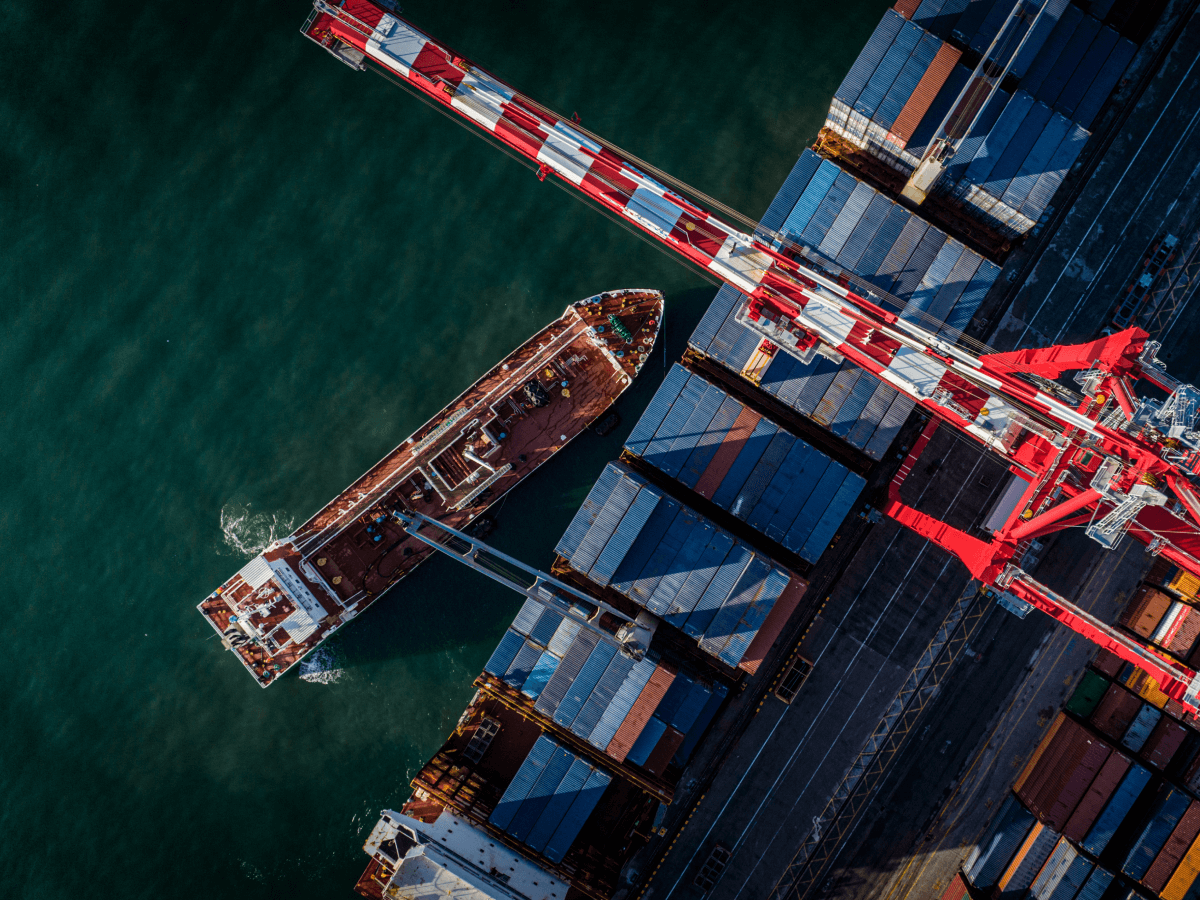

Manufacturing

In the era of Industry 4.0, intelligent solutions enable manufacturing enterprises to unleash new quality productive forces. Learn more »

In the era of Industry 4.0, intelligent solutions enable manufacturing enterprises to unleash new quality productive forces. Learn more »

Retail and FMCG

Revolutionize traditional retail and expedite digital transformation of enterprises. Learn more »

Revolutionize traditional retail and expedite digital transformation of enterprises. Learn more »

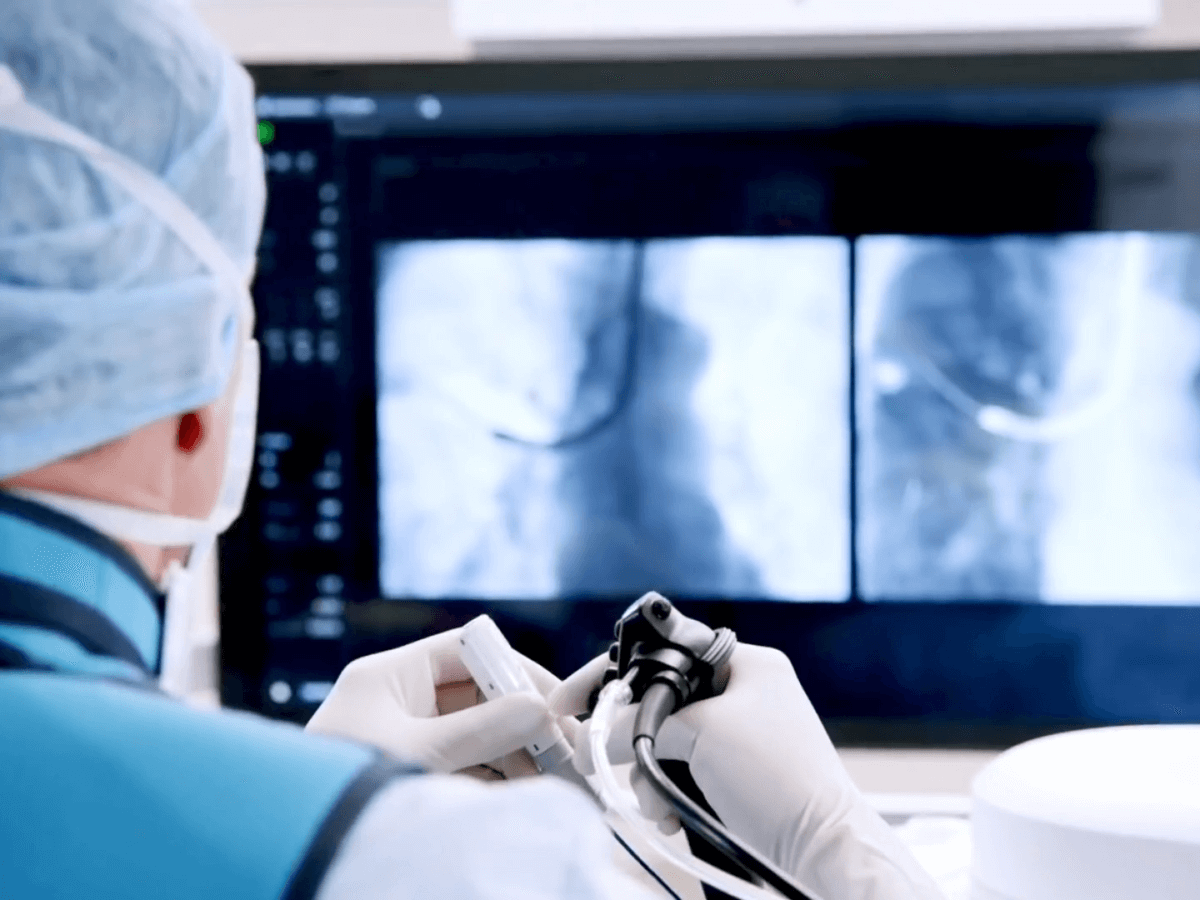

Healthcare and life sciences

Accelerate the lab-to-practice digital innovation process with high reliability, paving the way for a future of smart healthcare. Learn more »

Accelerate the lab-to-practice digital innovation process with high reliability, paving the way for a future of smart healthcare. Learn more »

Games

Provide fully managed solutions to maximize the player lifetime value and accelerate the globalization of games. Learn more »

Provide fully managed solutions to maximize the player lifetime value and accelerate the globalization of games. Learn more »

Media and entertainment

Revolutionize content creation methods by offering six types of media and entertainment application solutions. Learn more »

Revolutionize content creation methods by offering six types of media and entertainment application solutions. Learn more »

Powering customer innovation

Provide replicable and referenceable use cases for customers

Training and certification

Amazon Web Services offers systematic courses and practical demonstrations to help developers learn how to use cloud services and continuously enhance their knowledge.

3rd-party apps to drive growth

Global pioneer of cloud computing

Amazon Web Services has a reputation for its innovation, services, and broad usage.