We use machine learning technology to do auto-translation. Click "English" on top navigation bar to check Chinese version.

Set up alerts and orchestrate data quality rules with Amazon Web Services Glue Data Quality

Alerts and notifications play a crucial role in maintaining data quality because they facilitate prompt and efficient responses to any data quality issues that may arise within a dataset. By establishing and configuring alerts and notifications, you can actively monitor data quality and receive timely alerts when data quality issues are identified. This proactive approach helps mitigate the risk of making decisions based on inaccurate information. Furthermore, it allows for necessary actions to be taken, such as rectifying errors in the data source, refining data transformation processes, and updating data quality rules.

We are excited to announce that

This post is Part 4 of a five-post series to explain how to set up alerts and orchestrate data quality rules with Amazon Web Services Glue Data Quality:

-

Part 1: Getting started with Amazon Web Services Glue Data Quality from the Amazon Web Services Glue Data Catalog -

Part 2: Getting started with Amazon Web Services Glue Data Quality for ETL Pipelines -

Part 3: Set up data quality rules across multiple datasets using Amazon Web Services Glue Data Quality - Part 4: Set up alerts and orchestrate data quality rules with Amazon Web Services Glue Data Quality

-

Part 5: Visualize data quality score and metrics generated by Amazon Web Services Glue Data Quality

Solution overview

In this post, we provide a comprehensive guide on enabling alerts and notifications using

To expedite the implementation of the solution, we have prepared an

The solution aims to automate data quality evaluation for

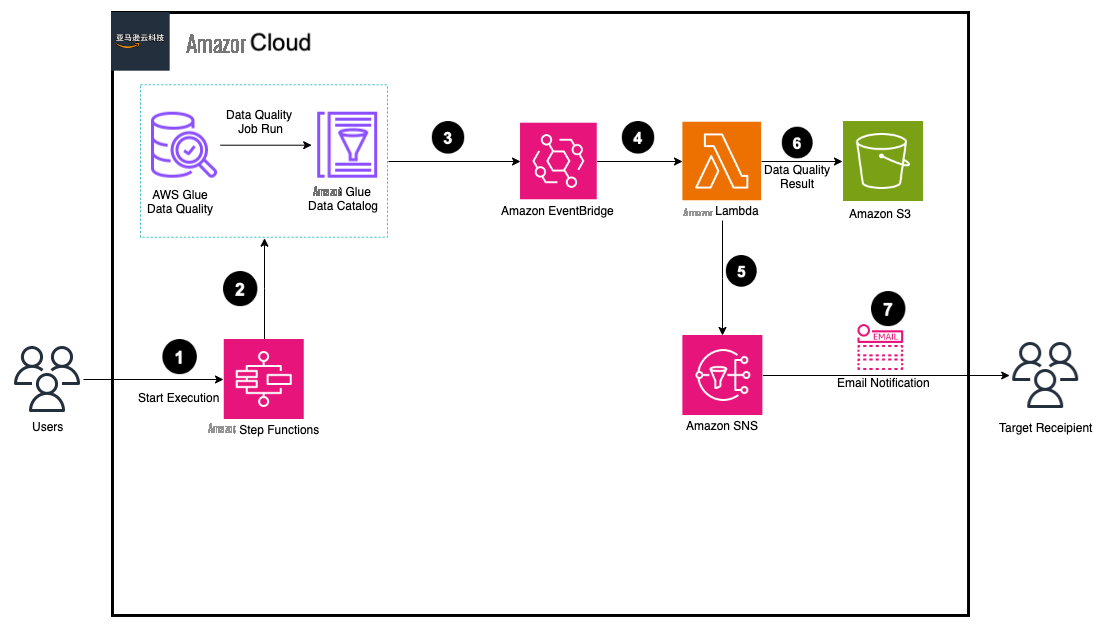

The following architecture diagram provides an overview of the complete pipeline.

The data pipeline consists of the following key steps:

-

The first step involves Amazon Web Services Glue Data Quality evaluations that are automated using Step Functions. The workflow is designed to start the evaluations based on the rulesets defined on the dataset (or table). The workflow accepts

input parameters provided by the user. - An EventBridge rule receives an event notification from the Amazon Web Services Glue Data Quality evaluations including the results. The rule evaluates the event payload based on the predefined rule and then triggers a Lambda function for notification.

-

The Lambda function sends an SNS notification containing data quality statistics to the designated email address. Additionally, the function writes the customized result to the specified

Amazon Simple Storage Service (Amazon S3) bucket, ensuring its persistence and accessibility for further analysis or processing.

The following sections discuss the setup for these steps in more detail.

Deploy resources with Amazon Web Services CloudFormation

We create several resources with Amazon Web Services CloudFormation, including a Lambda function, EventBridge rule, Step Functions state machine, and

-

To launch the CloudFormation stack, choose

Launch Stack

:

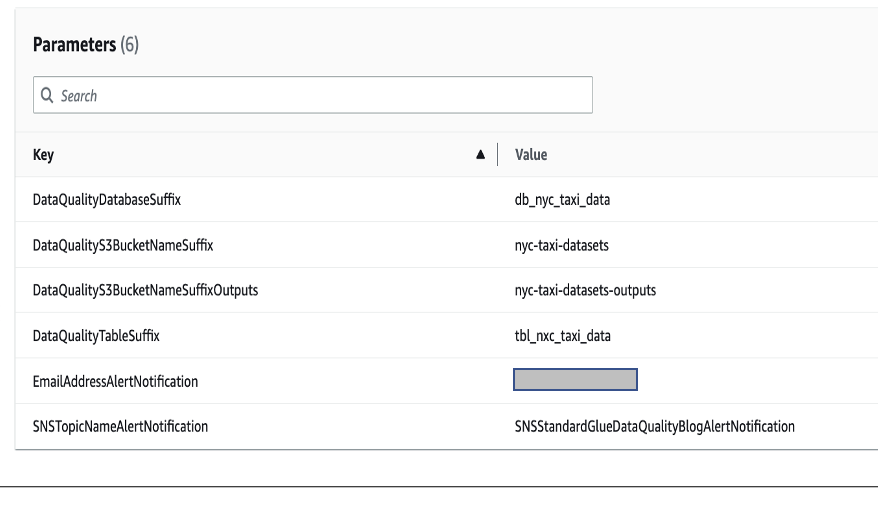

- Provide your email address for EmailAddressAlertNotification , which will be registered as the target recipient for data quality notifications.

-

Leave the other parameters at their default values and create the stack.

The stack takes about 4 minutes to complete.

-

Record the outputs listed on the

Outputs

tab on the Amazon Web Services CloudFormation console.

-

Navigate to the S3 bucket created by the stack (

DataQualityS3BucketNameStaging) and upload the fileyellow_tripdata_2022-01.parquet file. - Check your email for a message with the subject “Amazon Web Services Notification – Subscription Confirmation” and confirm your subscription.

Now that the CloudFormation stack is complete, let’s update the Lambda function code before running the Amazon Web Services Glue Data Quality pipeline using Step Functions.

Update the Lambda function

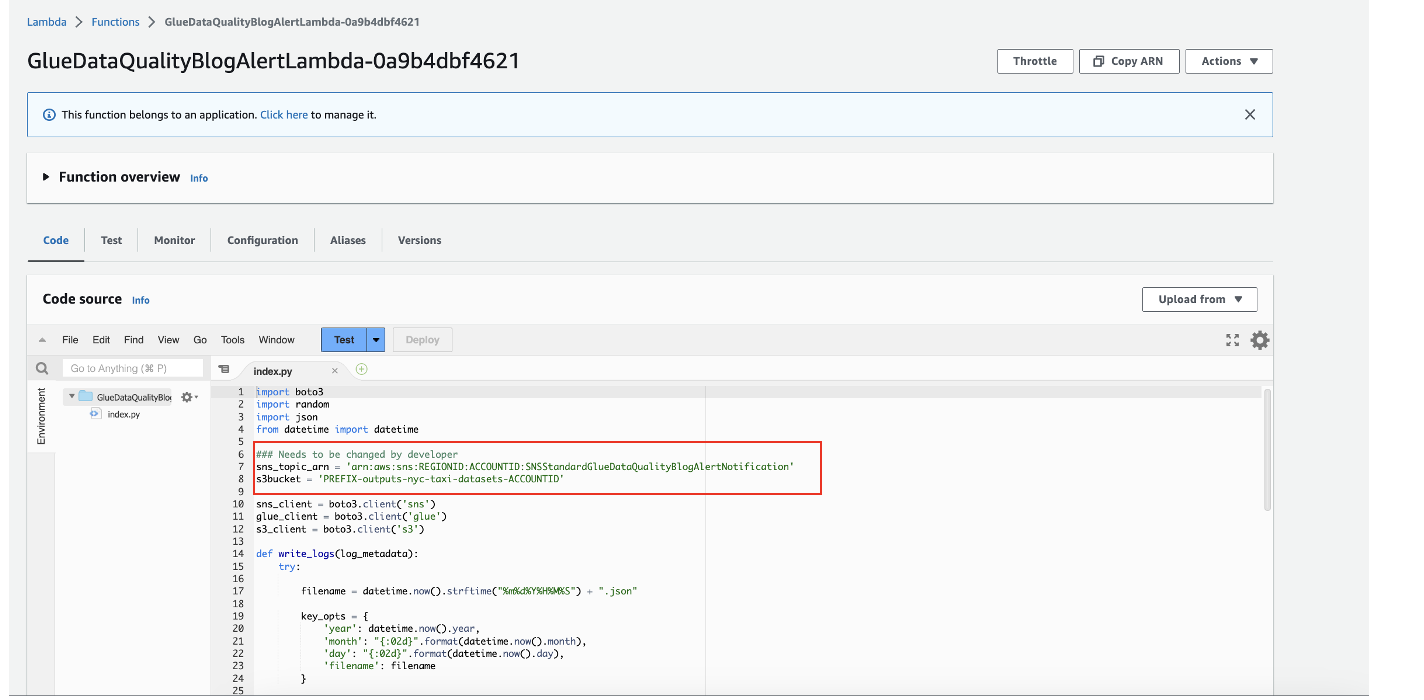

This section explains the steps to update the Lambda function. We modify the ARN of Amazon SNS and the S3 output bucket name based on the resources created by Amazon Web Services CloudFormation.

Complete the following steps:

- On the Lambda console, choose Functions in the navigation pane.

-

Choose the function

GlueDataQualityBlogAlertLambda-xxxx(created by the CloudFormation template in the previous step). -

Modify the values for

sns_topic_arnands3bucketwith the corresponding values from the CloudFormation stack outputs forSNSTopicNameAlertNotificationandDataQualityS3BucketNameOutputs, respectively.

- On the File menu, choose Save .

- Choose Deploy .

Now that we’ve updated the Lambda function, let’s check the EventBridge rule created by the CloudFormation template.

Review and analyze the EventBridge rule

This section explains the significance of the EventBridge rule and how rules use event patterns to select events and send them to specific targets. In this section, we create a rule with an event pattern set as

Data Quality Evaluations Results Available

and configure the target as a Lambda function.

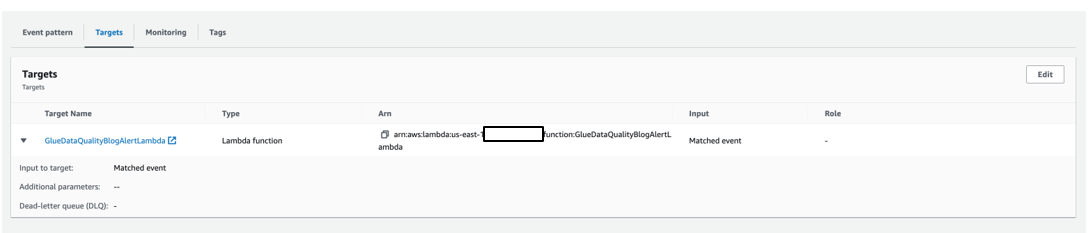

- On the EventBridge console, choose Rules in the navigation pane.

-

Choose the rule

GlueDataQualityBlogEventBridge-xxxx.

On the Event pattern tab, we can review the source event pattern. Event patterns are based on the structure and content of the events generated by various Amazon Web Services services or custom applications.

-

We set the source as

aws-glue-dataqualitywith the event pattern detail typeData Quality Evaluations Results Available.

On the Targets tab, you can review the specific actions or services that will be triggered when an event matches a specified pattern.

- Here, we configure EventBridge to invoke a specific Lambda function when an event matches the defined pattern.

This allows you to run serverless functions in response to events.

Now that you understand the EventBridge rule, let’s review the Amazon Web Services Glue Data Quality pipeline created by Step Functions.

Set up and deploy the Step Functions state machine

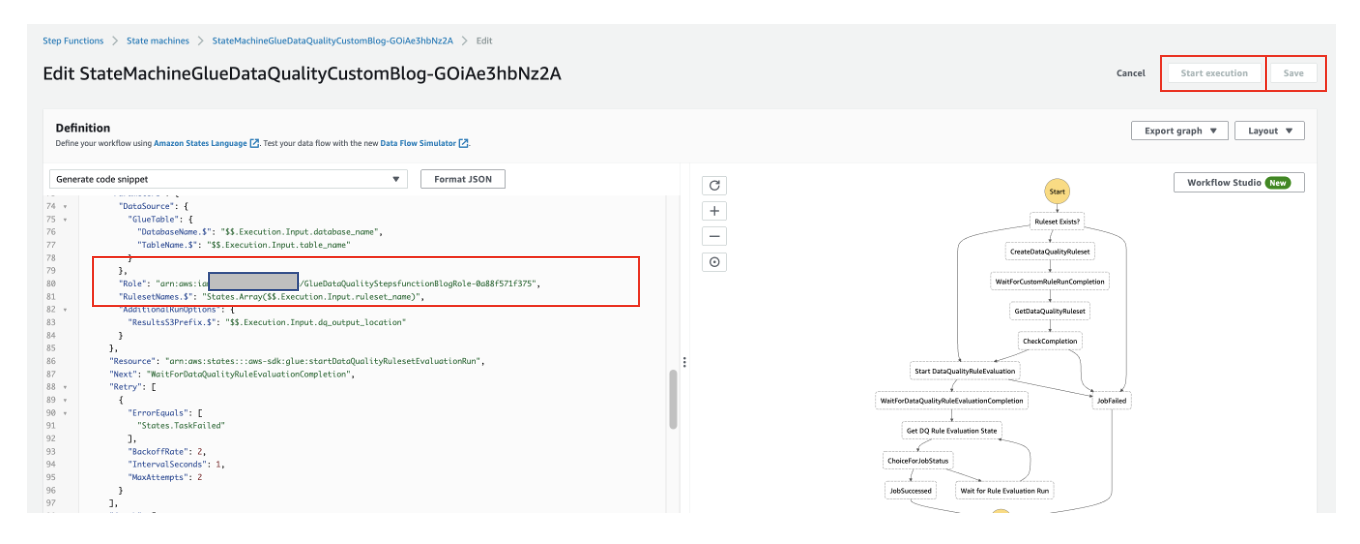

Amazon Web Services CloudFormation created the

StateMachineGlueDataQualityCustomBlog-xxxx

state machine to orchestrate the evaluation of existing Amazon Web Services Glue Data Quality rules, creation of custom rules if needed, and subsequent evaluation of the ruleset. Complete the following steps to configure and run the state machine:

- On the Step Functions console, choose State machines in the navigation pane.

-

Open the state machine

StateMachineGlueDataQualityCustomBlog-xxxx. -

Choose

Edit

.

-

Modify row 80 with the IAM role ARN starting with

GlueDataQualityBlogStepsFunctionRole-xxxxand choose Save .

Step Functions needs certain permissions (least priviledge) to run the state machine and evaluate the Amazon Web Services Glue Data Quality ruleset.

-

Choose

Start execution

.

- Provide the following input:

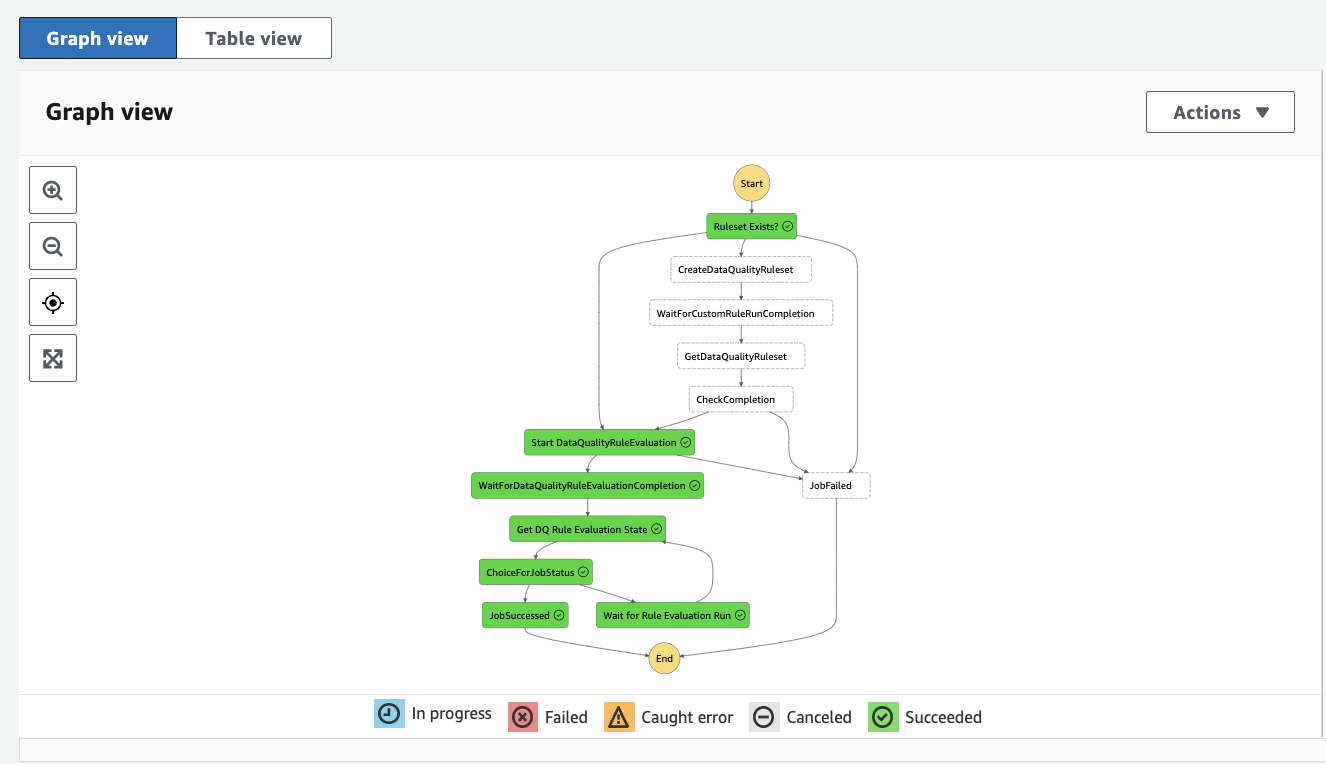

This step assumes the existence of the ruleset and runs the workflow as depicted in the following screenshot. It runs the data quality ruleset evaluation and writes results to the S3 bucket.

If it doesn’t find the ruleset name in the data quality rules, it will create a custom ruleset for you and perform the data quality ruleset evaluation. Amazon Web Services Step Functions is creating the custom ruleset. Below is a code snippet from the state machine code.

State machine results and run options

The Step Functions state machine has run Amazon Web Services the Glue Data Quality evaluation. Now EventBridge matches the pattern

Data Quality Evaluations Results Available

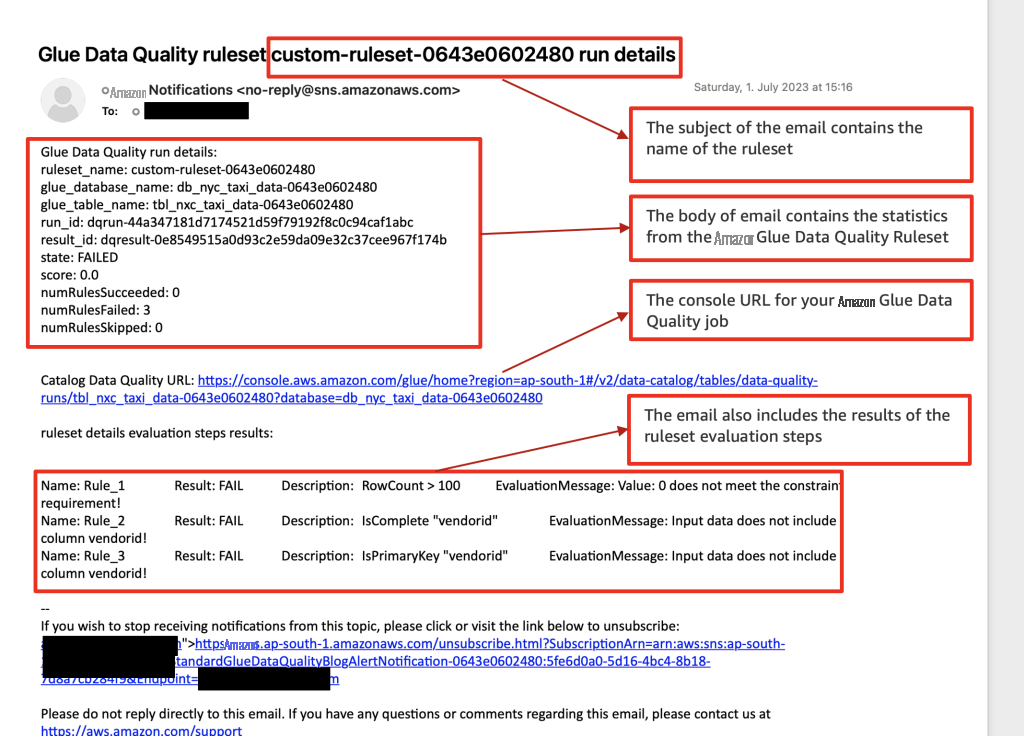

and triggers the Lambda function. The Lambda function writes customized Amazon Web Services Glue Data Quality metrics results to the S3 bucket and sends an email notification via Amazon SNS.

The following sample email provides operational metrics for the Amazon Web Services Glue Data Quality ruleset evaluation. It provides details about the ruleset name, the number of rules passed or failed, and the score. This helps you visualize the results of each rule along with the evaluation message if a rule fails.

You have the flexibility to choose between two run modes for the Step Functions workflow:

- The first option is on-demand mode, where you manually trigger the Step Functions workflow whenever you want to initiate the Amazon Web Services Glue Data Quality evaluation.

-

Alternatively, you can schedule the entire Step Functions workflow using EventBridge. With EventBridge, you can define a schedule or specific triggers to automatically initiate the workflow at predetermined intervals or in response to specific events. This automated approach reduces the need for manual intervention and streamlines the data quality evaluation process. For more details, refer to

Schedule a Serverless Workflow .

Clean up

To avoid incurring future charges and to clean up unused roles and policies, delete the resources you created:

- On the Amazon Web Services CloudFormation console, choose Stacks in the navigation pane.

- Select your stack and delete it.

If you’re continuing to

Conclusion

In this post, we discussed three key steps that organizations can take to optimize data quality and reliability on Amazon Web Services:

- Create a CloudFormation template to ensure consistency and reproducibility in deploying Amazon Web Services resources.

- Integrate Amazon Web Services Glue Data Quality ruleset evaluation and Lambda to automatically evaluate data quality and receive event-driven alerts and email notifications via Amazon SNS. This significantly enhances the accuracy and reliability of your data.

- Use Step Functions to orchestrate Amazon Web Services Glue Data Quality ruleset actions. You can create and evaluate custom and recommended rulesets, optimizing data quality and accuracy.

These steps form a comprehensive approach to data quality and reliability on Amazon Web Services, helping organizations maintain high standards and achieve their goals.

To dive into the Amazon Web Services Glue Data Quality APIs, refer to

If you require any assistance in constructing this pipeline within the

About the authors

Avik Bhattacharjee

is a Senior Partner Solution Architect at Amazon Web Services. He works with customers to build IT strategy, making digital transformation through the cloud more accessible, focusing on big data and analytics and AI/ML.

Avik Bhattacharjee

is a Senior Partner Solution Architect at Amazon Web Services. He works with customers to build IT strategy, making digital transformation through the cloud more accessible, focusing on big data and analytics and AI/ML.

Amit Kumar Panda

is a Data Architect at Amazon Web Services Professional Services who is passionate about helping customers build scalable data analytics solutions to enable making critical business decisions.

Amit Kumar Panda

is a Data Architect at Amazon Web Services Professional Services who is passionate about helping customers build scalable data analytics solutions to enable making critical business decisions.

Neel Patel

is a software engineer working within GlueML. He has contributed to the Amazon Web Services Glue Data Quality feature and hopes it will expand the repertoire for all Amazon Web Services CloudFormation users along with displaying the power and usability of Amazon Web Services Glue as a whole.

Neel Patel

is a software engineer working within GlueML. He has contributed to the Amazon Web Services Glue Data Quality feature and hopes it will expand the repertoire for all Amazon Web Services CloudFormation users along with displaying the power and usability of Amazon Web Services Glue as a whole.

Edward Cho

is a Software Development Engineer at Amazon Web Services Glue. He has contributed to the Amazon Web Services Glue Data Quality feature as well as the underlying open-source project Deequ.

Edward Cho

is a Software Development Engineer at Amazon Web Services Glue. He has contributed to the Amazon Web Services Glue Data Quality feature as well as the underlying open-source project Deequ.

The mentioned AWS GenAI Services service names relating to generative AI are only available or previewed in the Global Regions. Amazon Web Services China promotes AWS GenAI Services relating to generative AI solely for China-to-global business purposes and/or advanced technology introduction.