We use machine learning technology to do auto-translation. Click "English" on top navigation bar to check Chinese version.

Improving Reliability and Performance for Teleoperations for Autonomous Driving Vehicles on Amazon Web Services

The rapid advancement of autonomous driving technology is transforming the automotive industry, leading to improved safety, efficiency, and convenience. As self-driving vehicles evolve, automakers must address several challenges to ensure their widespread adoption. Among these challenges, reliable, secure, and low-latency communication between vehicles and teleoperation centers is critical for real-time responsiveness and decision-making in complex scenarios.

Teleoperation systems, as the ones used to control the

In this blog post, we explore how automakers and teleoperation providers can enhance the reliability and performance of teleoperations for autonomous driving vehicles by leveraging innovative technologies and services on Amazon Web Services, paving the way for a more connected and intelligent transportation future.

Technical and regulatory challenges faced by autonomous driving vehicles

Autonomous vehicles face technical challenges, especially in unexpected situations on the road. Current self-driving car technology struggles with a variety of issues, including road closures, accidents, or weather, often requiring human intervention. A solution to these challenges is human teleoperation, where a trained autonomous vehicle remote operator takes control when the autonomous system can’t handle a situation. For example, in the image below, you can see a road affected by heavy snow, where trucks provided by the traffic authority are cleaning it to provide safe passage to vehicles. In this situation a human driver may not have the support of the usual road lines and signs, need to follow instructions from the on-site traffic agents, communicate with other drivers, and pay attention to an ever-changing set of variables to overcome safely this scenario, what is challenging for the current autonomous driving technologies.

Figure 1: Road affected by heavy snow

The

- Lack of physical sensing: Difficulty estimating acceleration, speed, road inclination, or pedal feedback

- Human cognition and perception: Requires constant situation and spatial awareness, cognitive load management, depth perception, and mental model development

- Video communication and quality: Latency and variability, low frame rate, poor video resolution, absent image stitching or uncalibrated cameras

- Remote interaction with humans: Communicating with passengers, other drivers, pedestrians, and agency representatives

- Impaired visibility: Limited Field of View (FOV) and geometric FOV, lack of peripheral vision, fixed point of view, changing light conditions

- Lack of sounds: Absence of ambient sounds, sounds from other entities, and internal sounds

Thus, physical sensing, cognitive overload, and video communication and quality are top issues in teleoperations, with the latter being the focus of this blog post. Incorporating human teleoperation into autonomous driving systems requires robust communication and data transfer systems to support near real-time communication between the vehicle and the remote operator, with latencies an order of magnitude lower than the

The rapid development of autonomous driving technology has led to new regulatory frameworks in countries such as the

Those are areas where technologies like WebRTC, in combination with Amazon Web Services services, are beneficial. WebRTC (Web Real-Time Communication), provides low-latency, real-time video/audio communication capabilities to applications, while Amazon Web Services offers teleoperations system operators a scalable and secure cloud-based infrastructure they can use for transmitting and processing application data. In the next section, we will briefly overview teleoperations’ history and current status in different contexts.

WebRTC: Real-time Communication for Teleoperations

Web-based remote control has evolved, with new technologies and protocols being developed by vendors and the open-source community to enable more sophisticated and reliable remote-control applications. One of the essential technologies in this area is

WebRTC is an open-source technology that enables real-time communication over the web, including audio and video chat, file sharing, and data transfer. One of the key features of WebRTC is its support for peer-to-peer communication, which enables direct communication between two devices with no centralized server.

WebRTC has significantly impacted telemedicine, online education, and remote work industries by facilitating secure and efficient real-time communication, and its importance in our connected world will only grow.

Key features of WebRTC

WebRTC boasts several features that make it an ideal choice for real-time communication so crucial for remote teleoperations for autonomous vehicles, as we will see in the next sections.

Peer-to-peer connectivity

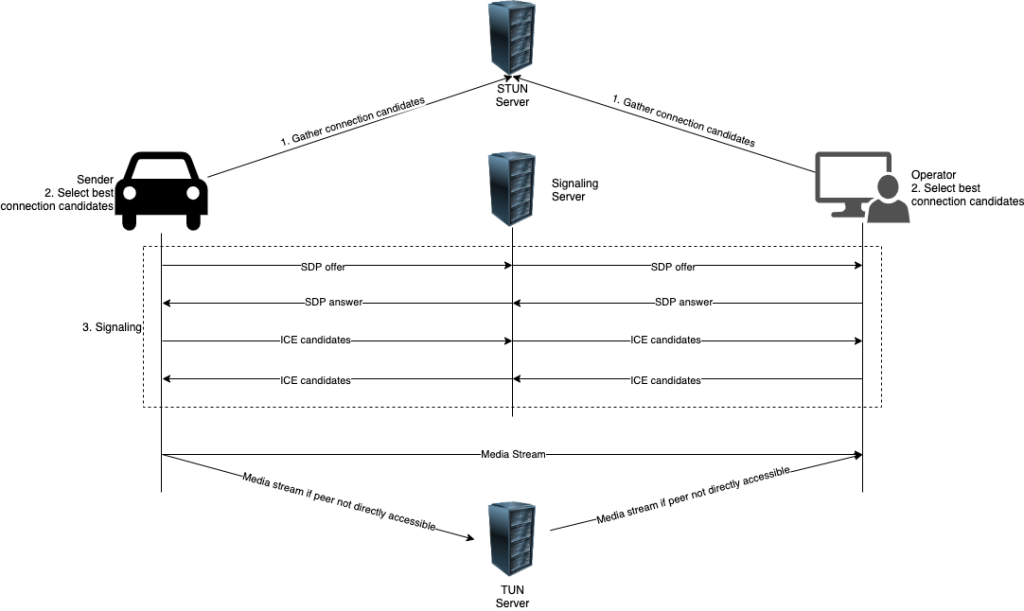

As mentioned before, one of the WebRTC key features is direct peer-to-peer connectivity, even if they are behind Network Address Translators (NATs) or firewalls. We can see the connection process in the figure below.

Figure 2: WebRTC connection steps

WebRTC uses a collection of protocols and algorithms to establish the best network connection path between peers. It starts with peers collecting their IP addresses as seen by applications in different networks, selecting the best connection candidates, and negotiating with peers the transport protocols, network addresses and ports. If a direct connection is not possible, then both peers use a relay to bypass the connectivity issues.

The design decision to establish peer-to-peer communication and not a client-server architecture has multiple advantages:

- a smaller latency if compared with client-server architectures

- it makes the connection more secure, as it removes any intermediate components

- as it doesn’t demand a central server, it doesn’t require central infrastructure and its related operations, making it is more cost effective

- It can more easily scale to attend to the evolving business needs

Those points explain much of the WebRTC success in so many real-time communication use cases.

Support to multiple video and audio codecs

Video codecs, which compress and decompress video data for efficient transmission, affect real-time communication quality, latency, and bandwidth requirements. The WebRTC specification doesn’t mandate specific codecs, with

Each codec has strengths and trade-offs, making them suited for different real-time communication scenarios. A

So, as we can see by those examples, the codec choice is not only a critical choice, but also not an easy one. Experimentation here is key. Important also to consider the difference in licensing, what can play a role on the selection of a codec, as some are royalty free (VP8, VP9 and AV1) and some are not (H.264 and H.265).

Data channels

WebRTC’s data channel enables bidirectional communication of arbitrary data between peers. WebRTC designers implemented it to work alongside audio and video channels, exchanging control signals, text messages, or files without a separate connection or server. The data channel operates over the same peer-to-peer connection as audio and video channels, utilizing the same NAT traversal techniques and security measures. Yet, it functions independently, allowing performance customization based on use cases. In teleoperations for autonomous vehicles, the data channel is vital in transmitting control signals between the remote operator and the self-driving vehicle, including commands to start, stop, change speed or direction, or trigger emergency actions. Those features facilitate low-latency communication with the vehicle, ensuring real-time responsiveness and enhancing system safety and performance.

Adaptive streaming

WebRTC implements adaptive streaming by adjusting media stream quality in real time based on changing network conditions, ensuring a consistent user experience. It employs congestion control algorithms like

Low latency

WebRTC utilizes peer-to-peer (P2P) capabilities, adaptive streaming, and efficient transport protocols, such as User Datagram Protocol (UDP), to provide low-latency communication between devices. Establishing a P2P connection removes the need for intermediary servers, reducing latency. Adaptive streaming allows WebRTC to adjust the media quality based on network conditions, ensuring smooth streaming with minimal delays.

UDP plays a crucial role in achieving low latency in WebRTC. Unlike Transmission Control Protocol (TCP), UDP is connectionless and has lower overhead, leading to faster data packet transmission. Using UDP as the underlying transport protocol, WebRTC can deliver audio and video streams with minimal latency, enabling real-time communication.

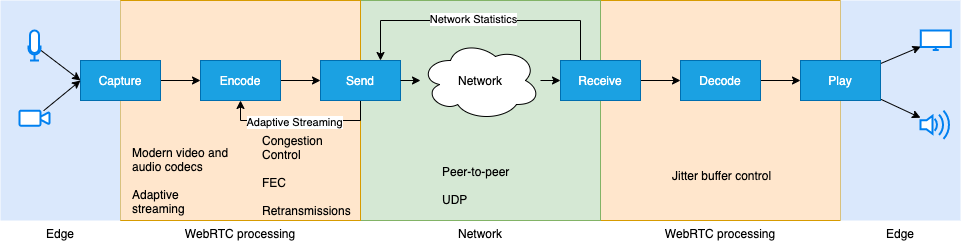

All the previous features give us the following picture of the entire chain, from the video capturing process to showing it back in the operator screen, also called the glass-to-glass video latency:

Figure 3: WebRTC key features to solve glass-to-glass latency

In combination with P2P connectivity, adaptive streaming, and UDP-based transport, WebRTC also manages jitter buffers and implements error recovery mechanisms to maintain low-latency communication even in challenging network conditions. A jitter buffer queues the video packages as they arrive over the internet to provide a more smoothly experience to the end-user. As the internet network path between peers is ever changing, video packages can suffer delays or arrive out-of-order, and the jitter buffer helps to mitigate those situations. As the jitter buffer can add more delays if it is too deep, there’s a fine line between improving the end-user experience but at the same time keeping latencies inside the thresholds for real-time remote operations, what makes the jitter buffer tunning another key element in WebRTC-based systems applied to remote operations.

In summary, to successfully implement a WebRTC solution for teleoperations in autonomous driving, it is crucial to focus on three essential components:

-

Client code: teleoperation providers must develop teleoperation systems using efficient and robust client-side code to manage video, audio, data streams, and all the network-related optimizations. Amazon Web Services supports customers with Software Development Kits (SDKs), please check our

Amazon Kinesis Video Streams WebRTC Developer Guide. - Support servers: Establish a highly available infrastructure to facilitate peer communication, manage user authentication, and handle network traversal.

- Reliable, low latency network path between peers: implement a network path that guarantees minimal latency and high-quality data transmission between peers.

In the next section, let’s see how automakers and teleoperation providers can create a teleoperation solution using Amazon Web Services services that helps address the challenges of autonomous driving and can help enhance the overall reliability and performance of autonomous vehicles.

Building a Reliable and Low-Latency Network for Teleoperations using Amazon Web Services Services and Partner Solutions

By using a combination of Amazon Web Services services and Amazon Web Services Partner solutions, teleoperation system providers can establish a more reliable and low-latency network between self-driving vehicles and remote operators. This section will present key solutions as

Amazon Kinesis Video Streams with WebRTC

Amazon

Amazon Web Services Direct Connect

Amazon Web Services Partner Solutions

Amazon Web Services Partners with leading mobile network operators (MNOs) like

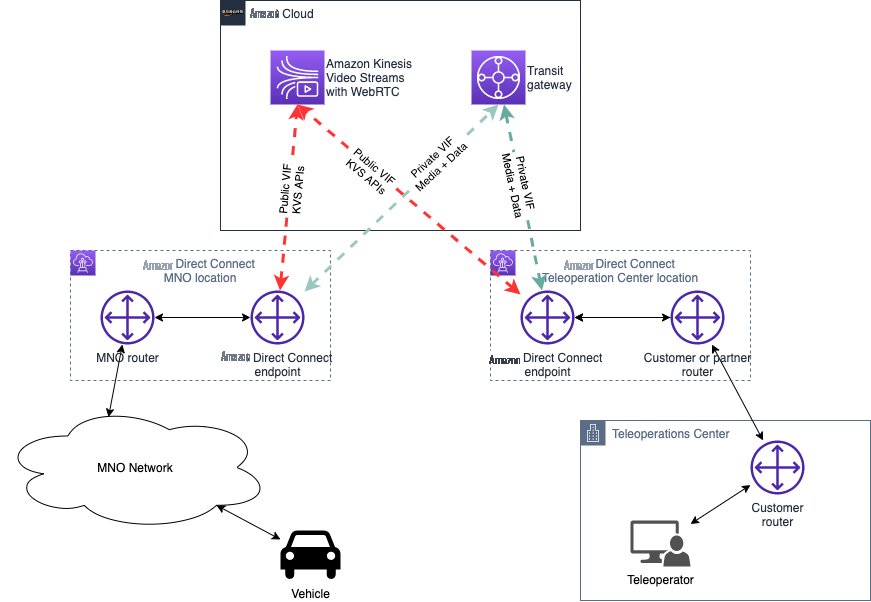

Combining MNO private networks and a direct connection to the Amazon Web Services network using Amazon Web Services Direct Connect, we can have an end-to-end low latency network as shown in the picture below:

Figure 4: Overview end-to-end network connectivity vehicle-cloud-teleoperations center

By utilizing Amazon Web Services services such as Amazon Kinesis Video Stream with WebRTC, Amazon Direct Connect along with Amazon Web Services Partner solutions, you can build a more reliable and low-latency network infrastructure for teleoperations. This combination of services and solutions helps customers build a more seamless, real-time communication between autonomous vehicles and remote operators, which can help enhance the autonomous driving solutions offered by automotive customers.

Conclusion

In conclusion, teleoperations are vital in developing and integrating autonomous driving technology. They serve as a critical link between human operators and self-driving vehicles, which helps automakers provide more reliable and seamless operations of their autonomous vehicles. As the autonomous vehicle industry grows, it becomes increasingly important to address the challenges associated with teleoperations, particularly regarding communication, performance, and security.

In teleoperations, leveraging WebRTC and Amazon Web Services services can offer many benefits to customers and the solutions they provide to their end customers, including real-time video streaming, reliable communication, low-latency data transfer, and customizable network configurations. Automotive companies can create a more robust and high-performance teleoperation infrastructure that helps enhances the efficiency and overall performance of their vehicles by using, in part, services such as Amazon Kinesis Video Streams with WebRTC, Amazon Direct Connect, together with Amazon Web Services Partner solutions.

Contact us via the

The mentioned AWS GenAI Services service names relating to generative AI are only available or previewed in the Global Regions. Amazon Web Services China promotes AWS GenAI Services relating to generative AI solely for China-to-global business purposes and/or advanced technology introduction.