我们使用机器学习技术将英文博客翻译为简体中文。您可以点击导航栏中的“中文(简体)”切换到英文版本。

LightOn Lyra-FR 型号现已在亚马逊 SageMaker 上市

我们很高兴地宣布,LightOn Lyra-FR基础型号已向使用亚马逊SageMaker的客户推出。LightOn 是专门使用欧洲语言构建基础模型的领导者。Lyra-FR 是一种最先进的法语模型,可用于构建对话式 AI、文案写作工具、文本分类器、语义搜索等。你可以轻松地试用这个模型,然后将其与

在这篇博客中,我们将演示如何在 SageMaker 中使用 Lyra-FR 模型。

基础模型

基础模型通常根据数十亿个参数进行训练,可适应各种用例。当今最知名的基础模型用于总结文章、创作数字艺术以及根据简单的文本说明生成代码。这些模型的训练成本很高,因此客户希望使用现有的预训练基础模型并根据需要对其进行微调,而不是自己训练这些模型。SageMaker 提供了精选的模型列表,您可以在 SageMaker 控制台上从中进行选择。您可以直接在 Web 界面上测试这些模型。当您想要大规模使用基础模型时,无需离开 SageMaker 即可使用模型提供商提供的预建笔记本电脑轻松使用。由于模型在 亚马逊云科技 上托管和部署,因此您可以放心,您的数据,无论是用于评估还是大规模使用模型,都不会与第三方共享。

Lyra-FR 是当今市场上最大的法语型号。它是一个100亿个参数模型,由LightOn训练并可供访问。Lyra-FR 在大型法语精选数据库上接受过训练,它能够编写类似人类的文本并解决诸如分类、问答和摘要之类的复杂任务。所有这些都是在保持合理的推理速度的同时,平均请求的推理速度在 1—2 秒范围内。你可以简单地用自然语言描述你想执行的任务,Lyra-FR 将生成与母语为法语的人一样的回应。Lyra-FR 仅需几行代码即可提供业务就绪型情报原语,例如可操控生成和文本分类。对于更具挑战性的任务,可以在 “几枪” 学习模式下提高性能,在提示符中提供几个输入输出示例。

在 SageMaker 上使用 Lyra-FR

我们将通过 3 个简单步骤向您介绍如何使用 Lyra-FR 模型:

- 发现 — 在适用于 SageMaker 的 亚马逊云科技 管理控制台上查找 Lyra-FR 模型。

- 测试 -使用 Web 界面测试模型。

- 部署 -使用笔记本部署和测试模型的高级功能。

探索

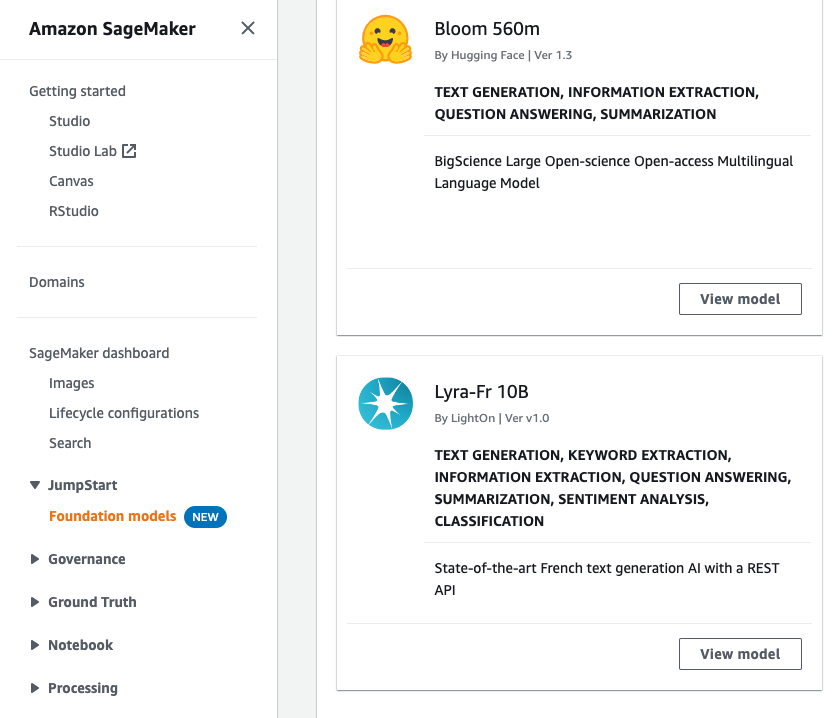

为了便于发现像 Lyra-FR 这样的基础模型,我们将所有基础模型整合到一个地方。要查找 Lyra-FR 模型,请执行以下操作:

-

登录

适用于 Sage Mak er 的 亚马逊云科技 管理控制台 。 - 在左侧导航面板上,您应该看到一个名为 JumpStart 的部分,其 下方 有 基础模型 。如果您还没有访问权限,请申请访问此功能。

-

一旦您的账户被列入白名单,您将在右侧看到模特列表。在这里你可以找到 Lyra-FR 10B 模型。

-

单击 “

查看模型

” 将显示包含其他选项的完整模型卡。

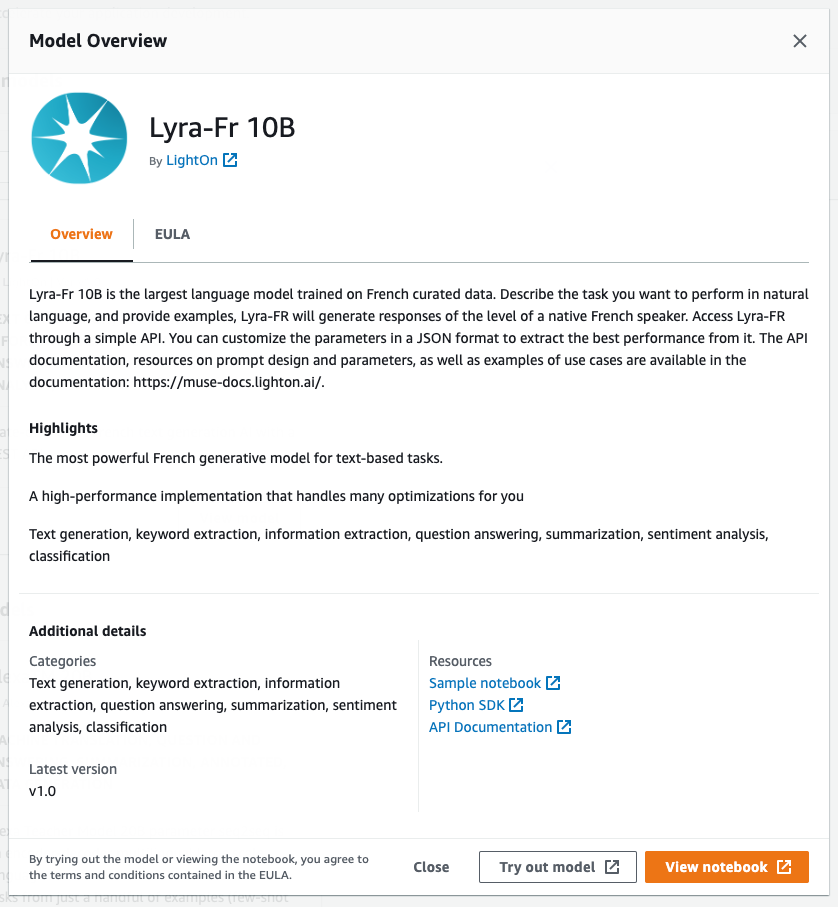

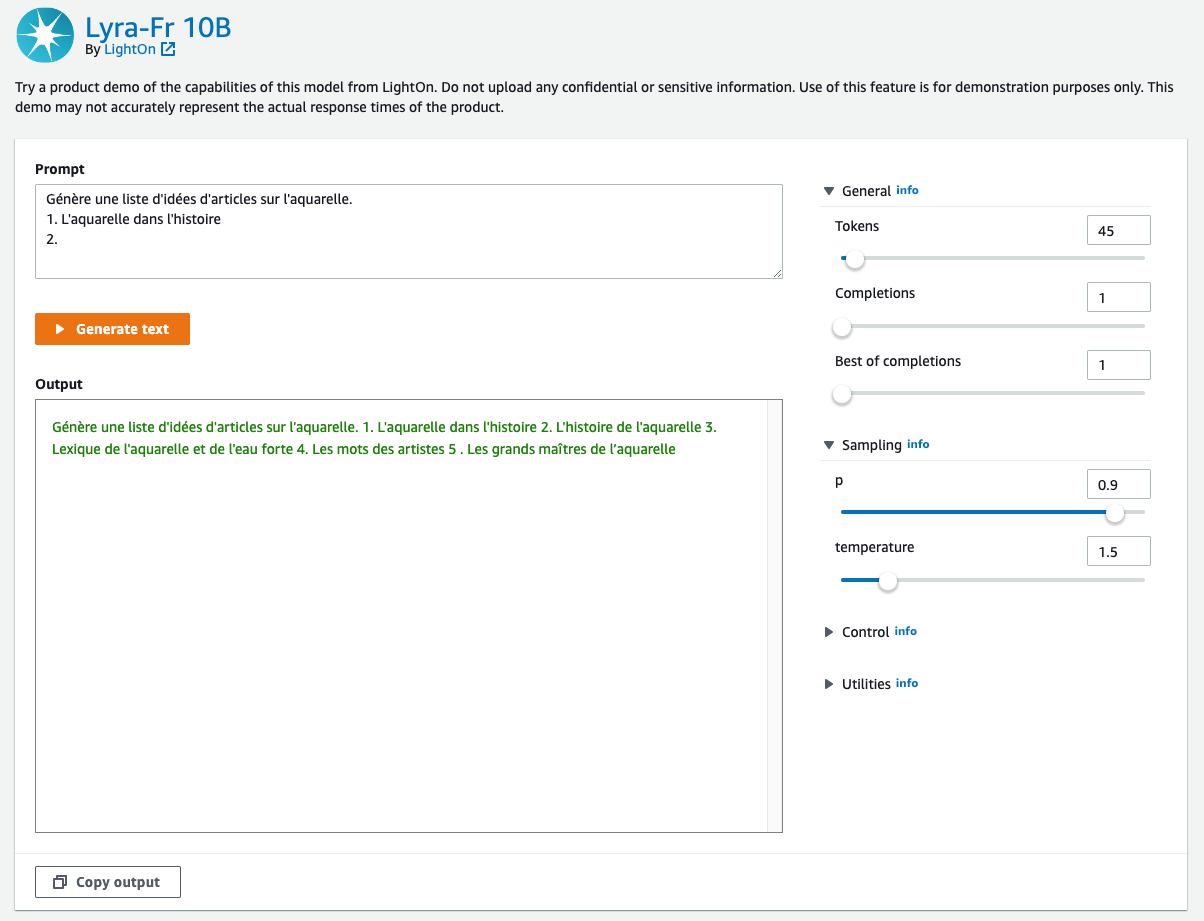

测试

一个常见的用例是运行临时测试以确保模型满足您的需求。你可以直接从 SageMaker 控制台测试 Lyra-FR 模型。在此示例中,我们将使用一个简单的文本提示,要求模型生成一个以 “水彩” 或 “l'aquarelle” 为主题的法语文章创意列表。

- 从上一节显示的模型卡中,选择 试用模型 。这将打开一个带有测试界面的新选项卡。

-

在此界面上,提供您想要传递给模型的文本输入。你还可以使用右侧的滑块调整任何你想要的参数。满意后,选择 “

生成文本

” 。

请注意,基础模型及其输出来自模型提供商,亚马逊云科技 对其中的内容或准确性不承担任何责任。

部署

当你提供你希望模型提供的信息示例时,文本生成模型效果最好。这被称为少量学习。我们将使用 Lyra-FR 示例笔记本来演示此功能。示例笔记本介绍了如何在 SageMaker 上部署 Lyra-FR 模型、如何汇总和生成文本以及少量学习。

它还包括直接使用 JSON 或 Lyra Python SDK 发出推理请求的示例。Lyra Python SDK 负责格式化输入、调用端点和解压缩输出。每个端点都有一个类:创建、分析、选择、嵌入、比较和令牌化。请注意,此示例使用的是 ml.p4d.24xlarge 实例。如果您的 亚马逊云科技 账户的默认限额为 0,则需要请求提高此 GPU 实例的限制。

SageMaker 通过 SageMaker Studio 提供托管笔记本电脑体验。有关如何设置 SageMaker Studio 的详细信息,请参阅

让我们来看看如何运行笔记本:

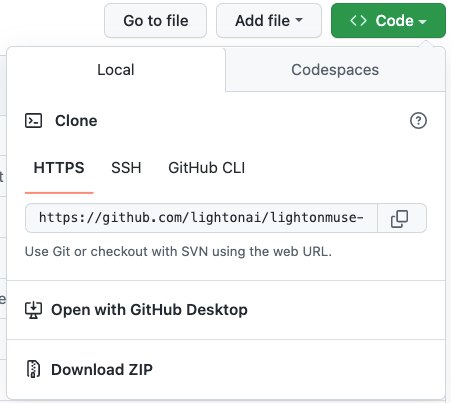

- 从本博客文章的 “发现” 部分转到模型卡,然后选择 “ 查看笔记本 ” 。你应该会看到在 GitHub 中打开了一个带有 Lyra-FR 笔记本的新选项卡。

-

在 GitHub 中,选择

lightonmuse-sagemaker-sdk

;这将带你进入存储库。选择

代码

按钮并复制 HTTPS 网址。

-

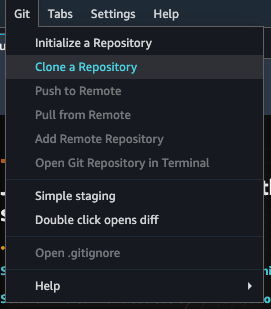

打开 SageMaker Studio。选择 “

克隆存储库

”, 然后粘贴从上面复制的 URL。

- 使用左侧的文件浏览器导航到 Lyra-FR 笔记本。

- 这款笔记本可以端到端运行,无需额外输入,还可以清理其创建的资源。我们可以看看 “使用 Create 进行情感分析” 的示例。此示例使用 Lyra Python SDK,通过给模型讲授一些示例,说明哪些文本应分为正面(正面)、负面(负面)或混合(减弱),从而演示少量学习。

-

你可以看到,使用 Lyra Python SDK,你所要做的就是提供 SageMaker 端点的名称和输入。SDK 为您处理所有解析、格式化和设置。

-

运行此提示符会返回最后一条语句是正面的。

清理

测试完端点后,请务必删除 SageMaker 推理端点并删除模型以避免产生费用。

结论

在这篇文章中,我们向您展示了如何使用亚马逊 SageMaker 发现、测试和部署 Lyra-FR 模型。立即申请 在 SageMaker 中

作者简介

*前述特定亚马逊云科技生成式人工智能相关的服务仅在亚马逊云科技海外区域可用,亚马逊云科技中国仅为帮助您发展海外业务和/或了解行业前沿技术选择推荐该服务。