简介

2021 年 11 月,亚马逊云科技 推出了

Karpenter

,这是一款根据阿帕奇许可证 2.0 获得许可的开源高性能 Kubernetes 集群自动扩缩器。

Karpenter 通过快速启动大小合适的计算资源来响应不断变化的应用程序负载,从而帮助提高应用程序可用性和集群效率。自发布以来,我们看到越来越多的客户从

Kubernetes 集群自动 扩缩器迁移到 K

arpenter。但是,对于运行具有Windows工作负载的异构亚马逊弹性Kubernetes服务(

Amazon

EKS )集群的客户来说,由于Karpenter直到现在都不支持Windows节点,它成了引人注目的焦点。

在 Karpenter 中,OSS

社区

在开始开发 Windows 工作负载方面 做得非常出色。亚马逊云科技 团队更进一步,审查了提议的设计,添加增强功能以改善客户体验,并将其与我们的内部持续集成 (CI) 流程整合。

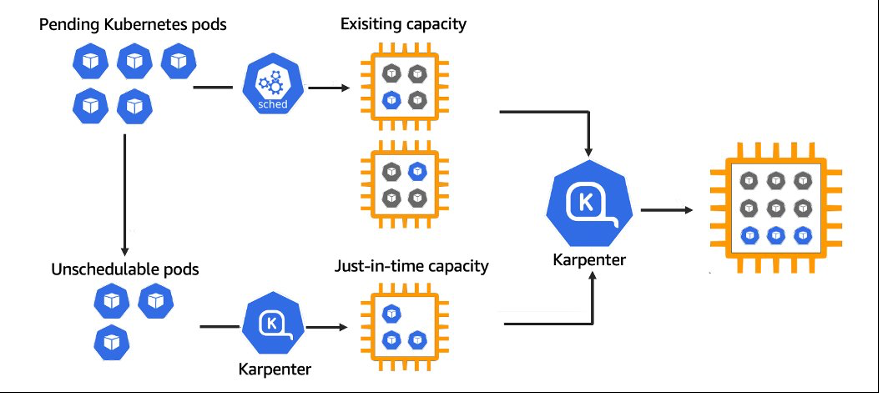

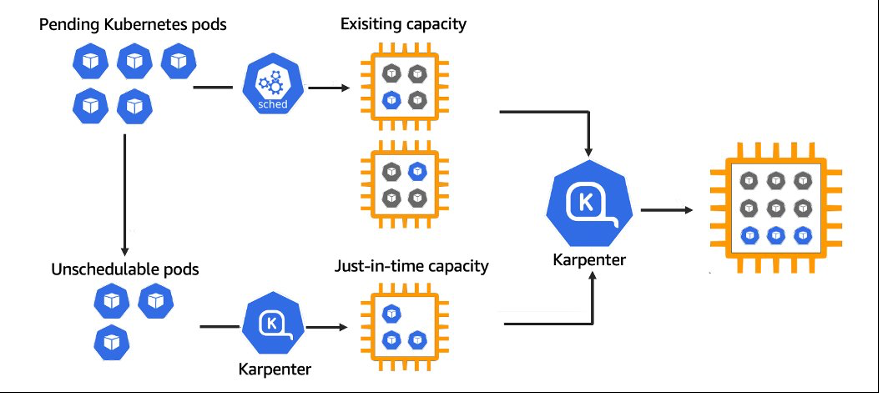

在集群中安装 Karpenter 时,它会观察未定期 Pod 的聚合资源请求,并决定在需要额外容量时启动新节点,同时决定在不再需要该容量时取消配置节点。通过这样做,Karpenter 减少了集群的调度延迟和基础设施成本。

图 1:Karpenter 高级调度

在这篇文章中,我们将重点介绍使用适用于亚马逊 EKS 的 Karpenter 在 Windows Server 2019 和 Windows Server 2022 中向外扩展/扩展。要了解有关 Karpenter 架构和组件的更多信息,请访问 Karpenter 网站。

先决条件

-

确保您使用有权创建和管理 A

mazon

EKS 的 亚马逊云科技 身份和访问管理 (

亚马逊云科技 IAM)

配置文件运行 eksctl 命令。 此 亚马逊云科技 IAM 安全主体在下面的 “入门” 部分中用于 亚马逊云科技 命令行接口 (

亚马逊云科技 CLI

) 配置。

-

确保你使用的是

eksctl v0.124.0 或更

高版本来操作 Karpenter。

-

按照

亚马逊 EK S 文档 中的 入门部分在

您的开发计算机 上安装 aws cli

、k

ubectl

和

eksctl

。

-

或者,你可以利用

Cloud9

或 C

loudshell

来处理部署和维护任务。

解决方案概述

-

创建操作系统变量以供在整个帖子中使用。

-

部署 Karpenter 服务需求。

-

使用适用于 Karpenter 的必要的 iamIdentityMappings 创建亚马逊 EKS 集群。

-

启用亚马逊 EKS Windows 支持。

-

安装带头盔的 Karpenter。

-

创建 Karpenter 供应器和节点模板。

-

测试适用于 Windows 的 Karpenter — 向外扩展。

-

在 Windows 版上测试 Karpenter — 扩大规模。

-

清理测试资源。

草率排练

1。创建操作系统变量以供在整个帖子中使用

export KARPENTER_VERSION=v0-c990a2d9fb10c1bfeffd5c6af64bf8575536d67e

export AWS_PARTITION="aws"

export CLUSTER_NAME="windows-karpenter-demo"

export AWS_DEFAULT_REGION="us-west-2"

export AWS_ACCOUNT_ID="$(aws sts get-caller-identity --query Account --output text)"

export TEMPOUT=$(mktemp)

2。创建 Karpenter 服务需求

Karpenter 直接与亚马逊弹性计算云 (Am

azon EC2

) API 终端节点集成,可根据现货中断或实例状态变化等事件采取特定操作。

以下命令自动部署必要的 亚马逊云科技 服务/组件,例如应用于使用 亚马逊云科技 CloudFormation 通过 Amazon SQS 队列发送的消息的

亚马逊 EventBridg

e 规则。

curl -fsSL https://karpenter.sh/v0.29/getting-started/getting-started-with-karpenter/cloudformation.yaml > $TEMPOUT \

&& aws cloudformation deploy \

--stack-name "Karpenter-${CLUSTER_NAME}" \

--template-file "${TEMPOUT}" \

--capabilities CAPABILITY_NAMED_IAM \

--parameter-overrides "ClusterName=${CLUSTER_NAME}"

成功执行

亚马逊云科技 CloudFormation 模板

后 ,您将看到以下输出:

Waiting for changeset to be created..

Waiting for stack create/update to complete

Successfully created/updated stack - Karpenter-windows-karpenter-demo

3。使用适用于 Karpenter 的必要的 iamIdentityMappings 创建亚马逊 EKS 集群

接下来,我们使用

eksctl 部署临时亚马逊 EK

S 集群, 以测试 Karpenter 与 Windows 的集成。必要的 亚马逊云科技 IAM 和 IdentityMapping 以服务账户的形式创建并添加到 Kubernetes ConfigMap 中。

eksctl create cluster -f - <<EOF

---

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: ${CLUSTER_NAME}

region: ${AWS_DEFAULT_REGION}

version: "1.27"

tags:

karpenter.sh/discovery: ${CLUSTER_NAME}

iam:

withOIDC: true

serviceAccounts:

- metadata:

name: karpenter

namespace: karpenter

roleName: ${CLUSTER_NAME}-karpenter

attachPolicyARNs:

- arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:policy/KarpenterControllerPolicy-${CLUSTER_NAME}

roleOnly: true

iamIdentityMappings:

- arn: "arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:role/KarpenterNodeRole-${CLUSTER_NAME}"

username: system:node:{{EC2PrivateDNSName}}

groups:

- system:bootstrappers

- system:nodes

managedNodeGroups:

- instanceType: m5.large

amiFamily: AmazonLinux2

name: ${CLUSTER_NAME}-linux-ng

desiredCapacity: 2

minSize: 1

maxSize: 10

EOF

export CLUSTER_ENDPOINT="$(aws eks describe-cluster --name ${CLUSTER_NAME} --query "cluster.endpoint" --output text)"

export KARPENTER_IAM_ROLE_ARN="arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:role/${CLUSTER_NAME}-karpenter"

echo $CLUSTER_ENDPOINT $KARPENTER_IAM_ROLE_ARN

eksctl 使用 亚马逊云科技 CloudFormation 来创建所有必要的资源来构建 Amazon EKS 集群。成功创建集群后,您将看到类似的输出。如果集群创建失败,则失败原因将在 亚马逊云科技 CLI 输出(或 亚马逊云科技 CloudFormation 控制台)中提供。

2023-06-14 06:20:19 [✔] all EKS cluster resources for "windows-karpenter-demo" have been created

2023-06-14 06:20:19 [ℹ] nodegroup "windows-karpenter-demo-linux-ng" has 2 node(s)

2023-06-14 06:20:19 [ℹ] node "ip-192-168-12-160.ec2.internal" is ready

2023-06-14 06:20:19 [ℹ] node "ip-192-168-53-156.ec2.internal" is ready

2023-06-14 06:20:19 [ℹ] waiting for at least 1 node(s) to become ready in "windows-karpenter-demo-linux-ng"

2023-06-14 06:20:19 [ℹ] nodegroup "windows-karpenter-demo-linux-ng" has 2 node(s)

2023-06-14 06:20:19 [ℹ] node "ip-192-168-12-160.ec2.internal" is ready

2023-06-14 06:20:19 [ℹ] node "ip-192-168-53-156.ec2.internal" is ready

2023-06-14 06:20:20 [ℹ] kubectl command should work with "/Users/bpfeiff/.kube/config", try 'kubectl get nodes'

2023-06-14 06:20:20 [✔] EKS cluster "windows-karpenter-demo" in "us-east-1" region is ready

4。启用亚马逊 EKS Windows 支持

要将 Windows 节点部署到我们的集群,我们需要启用

亚马逊 EKS Windows 支持

。

kubectl apply -f - <<EOF

---

apiVersion: v1

kind: ConfigMap

metadata:

name: amazon-vpc-cni

namespace: kube-system

data:

enable-windows-ipam: "true"

EOF

5。安装 Karpenter with Helm

接下来,我们将使用

Helm

来安装 Karpenter。

# Logout of helm registry to perform an unauthenticated pull against the public ECR

helm registry logout public.ecr.aws

helm upgrade --install karpenter oci://public.ecr.aws/karpenter/karpenter --version ${KARPENTER_VERSION} --namespace karpenter --create-namespace \

--set serviceAccount.annotations."eks\.amazonaws\.com/role-arn"=${KARPENTER_IAM_ROLE_ARN} \

--set settings.aws.clusterName=${CLUSTER_NAME} \

--set settings.aws.defaultInstanceProfile=KarpenterNodeInstanceProfile-${CLUSTER_NAME} \

--set settings.aws.interruptionQueueName=${CLUSTER_NAME} \

--set controller.resources.requests.cpu=1 \

--set controller.resources.requests.memory=1Gi \

--set controller.resources.limits.cpu=1 \

--set controller.resources.limits.memory=1Gi \

--wait

成功安装后,您将看到以下输出。

Release "karpenter" does not exist. Installing it now.

Pulled: public.ecr.aws/karpenter/karpenter:v0-c990a2d9fb10c1bfeffd5c6af64bf8575536d67e

Digest: sha256:33e2597488e3359653515bb7bd43a4ed6c1e811cb95c261175f8808a9ea4fc97

NAME: karpenter

LAST DEPLOYED: Wed Jun 14 08:16:36 2023

NAMESPACE: karpenter

STATUS: deployed

REVISION: 1

TEST SUITE: None

6。根据需要创建供应器

现在,我们在同一个亚马逊 EKS 集群中创建了两个 Karpenter

配置器

, 以支持 Windows Server 2019 和 Windows Server 2022。Karpenter 预配器对 Karpenter 可以创建的节点以及可以在这些节点上运行的 Pod 设置约束。

cat <<EOF | kubectl apply -f -

apiVersion: karpenter.sh/v1alpha5

kind: Provisioner

metadata:

name: windows2019

spec:

requirements:

- key: karpenter.sh/capacity-type

operator: In

values: ["on-demand"]

- key: kubernetes.io/os

operator: In

values: ["windows"]

limits:

resources:

cpu: 1000

providerRef:

name: windows2019

ttlSecondsAfterEmpty: 30

---

apiVersion: karpenter.k8s.aws/v1alpha1

kind: AWSNodeTemplate

metadata:

name: windows2019

spec:

subnetSelector:

karpenter.sh/discovery: ${CLUSTER_NAME}

securityGroupSelector:

karpenter.sh/discovery: ${CLUSTER_NAME}

amiFamily: Windows2019

metadataOptions:

httpEndpoint: enabled

httpProtocolIPv6: disabled

httpPutResponseHopLimit: 2

httpTokens: required

---

apiVersion: karpenter.sh/v1alpha5

kind: Provisioner

metadata:

name: windows2022

spec:

requirements:

- key: karpenter.sh/capacity-type

operator: In

values: ["on-demand"]

- key: kubernetes.io/os

operator: In

values: ["windows"]

limits:

resources:

cpu: 1000

providerRef:

name: windows2022

ttlSecondsAfterEmpty: 30

---

apiVersion: karpenter.k8s.aws/v1alpha1

kind: AWSNodeTemplate

metadata:

name: windows2022

spec:

subnetSelector:

karpenter.sh/discovery: ${CLUSTER_NAME}

securityGroupSelector:

karpenter.sh/discovery: ${CLUSTER_NAME}

amiFamily: Windows2022

metadataOptions:

httpEndpoint: enabled

httpProtocolIPv6: disabled

httpPutResponseHopLimit: 2

httpTokens: required

EOF

7。扩展部署

现在,我们的亚马逊 EKS 集群已经为运行 Windows 节点和 Karpenter 的所有必要组件做好了准备。我们扩展示例应用程序,以查看 Karpenter 根据需求自动向 Amazon EKS 集群添加节点。

7.1 运行以下代码来创建 Windows Server 2022 示例应用程序。

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: windows-server-iis-simple-2022

spec:

selector:

matchLabels:

app: windows-server-iis-simple-2022

tier: backend

track: stable

replicas: 0

template:

metadata:

labels:

app: windows-server-iis-simple-2022

tier: backend

track: stable

spec:

containers:

- name: windows-server-iis-simple-2022

image: mcr.microsoft.com/windows/servercore/iis:windowsservercore-ltsc2022

imagePullPolicy: IfNotPresent

command:

- powershell.exe

- -command

- while(1){sleep 2; ping -t localhost;}

nodeSelector:

kubernetes.io/os: windows

node.kubernetes.io/windows-build: 10.0.20348

EOF

每个 Pod 使用的 Windows 服务器版本必须与节点的版本相匹配。

如果要在同一个集群中使用多个 Windows 服务器版本,则应设置其他节点标签和

nodeSelector 字

段。

为了简

化这一点,Kubernetes 会自动为 Windows 节点添加一个名为 node.kubern

etes.io/windows-build 的标签。

此标签反映了需要匹配以实现兼容性的 Windows 主版本、次要版本号和内部版本号。以下是每个 Windows 服务器版本使用的值:

|

|

|

Product Name

|

Version

|

|

1

|

Windows Server 2019

|

10.0.17763

|

|

2

|

Windows Server 2022

|

10.0.20348

|

根据 Pod NodeSelector 中指定的编译版本,Karpenter 相应地使用操作系统启动新的 Windows 节点。例如,如果将编译版本指定为

10.0.17763

,则 Karpenter 会使用 Windows 2019 配置器启动 Windows 节点。

有关更多信息,请参阅在

Kubernet es 中运行 Windows 容器的 指南

。

7.2 运行以下命令来扩展你的 Windows Server 2022 示例应用程序。

kubectl scale deployment windows-server-iis-simple-2022 --replicas 10

7.3 你可以使用 Karpenter 日志来跟踪扩展进度。

kubectl logs -f -n karpenter -l app.kubernetes.io/name=karpenter -c controller

以下输出显示 Windows Server 2022 Karpenter 配置器从 0 个节点扩展到 1 个,以支持我们请求运行的 10 个副本。

2023-06-14T12:19:01.581Z INFO controller.machine_lifecycle launched machine

{"commit": "c990a2d", "machine": "windows2022-4hq46", "provisioner": "windows2022",

"provider-id": "aws:///us-east-1f/i-039507775a01898e6", "instance-type": "c6a.xlarge",

"zone": "us-east-1f", "capacity-type": "on-demand", "allocatable": {"cpu":"3920m",

"ephemeral-storage":"44Gi","memory":"6012Mi","pods":"110","vpc.amazonaws.com/

PrivateIPv4Address":"14"}}

7.4 运行以下命令来跟踪 Pod 的部署进度。

kubectl rollout status deploy/windows-server-iis-simple-2022

你将看到在我们新的 Karpenter 预置的 Windows 工作节点上创建的 10 个副本。

Waiting for deployment "windows-server-iis-simple-2022" rollout to finish: 0 of 10 updated replicas are available...

Waiting for deployment "windows-server-iis-simple-2022" rollout to finish: 1 of 10 updated replicas are available...

Waiting for deployment "windows-server-iis-simple-2022" rollout to finish: 2 of 10 updated replicas are available...

Waiting for deployment "windows-server-iis-simple-2022" rollout to finish: 3 of 10 updated replicas are available...

Waiting for deployment "windows-server-iis-simple-2022" rollout to finish: 4 of 10 updated replicas are available...

Waiting for deployment "windows-server-iis-simple-2022" rollout to finish: 5 of 10 updated replicas are available...

Waiting for deployment "windows-server-iis-simple-2022" rollout to finish: 6 of 10 updated replicas are available...

Waiting for deployment "windows-server-iis-simple-2022" rollout to finish: 7 of 10 updated replicas are available...

Waiting for deployment "windows-server-iis-simple-2022" rollout to finish: 8 of 10 updated replicas are available...

Waiting for deployment "windows-server-iis-simple-2022" rollout to finish: 9 of 10 updated replicas are available...

deployment "windows-server-iis-simple-2022" successfully rolled out

7.5 运行以下代码来扩展你的 Windows Server 2019 部署。

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: windows-server-iis-simple-2019

spec:

selector:

matchLabels:

app: windows-server-iis-simple-2019

tier: backend

track: stable

replicas: 0

template:

metadata:

labels:

app: windows-server-iis-simple-2019

tier: backend

track: stable

spec:

containers:

- name: windows-server-iis-simple-2019

image: mcr.microsoft.com/windows/servercore/iis:windowsservercore-ltsc2019

imagePullPolicy: IfNotPresent

command:

- powershell.exe

- -command

- while(1){sleep 2; ping -t localhost;}

nodeSelector:

kubernetes.io/os: windows

node.kubernetes.io/windows-build: 10.0.17763

EOF

7.6 运行以下命令来扩展你的 Windows Server 2019 示例应用程序。

kubectl scale deployment windows-server-iis-simple-2019 --replicas 10

在请求调度更多容器时,Karpenter 推出了一款新的 Windows Server 2019 工作程序。此过程与 Windows Server 2022 相同,你可以重复使用上述步骤来跟踪启动 Windows Server 2019 工作节点的进度。

8。扩大部署规模

Karpenter 根据需求处理 Windows 节点的向外扩展和向内扩展。现在,我们将拆除示例应用程序,然后观看 Karpenter 终止我们的 Windows 节点。

8.1 运行以下命令删除您的示例应用程序部署。

kubectl delete deployment windows-server-iis-simple-2022

kubectl delete deployment windows-server-iis-simple-2019

Karpenter 早些时候启动的 Windows 实例现在将终止。你可以使用 Karpenter 日志来跟踪缩小规模的进度。

kubectl logs -f -n karpenter -l app.kubernetes.io/name=karpenter -c controller

终止所有 pod 后,Karpenter 会删除所有空闲实例。

2023-06-20T16:27:12.878Z DEBUG controller.node added TTL to empty node {"commit": "c990a2d", "node": "ip-192-168-99-4.ec2.internal", "provisioner": "windows2022"}

2023-06-20T16:27:15.140Z DEBUG controller.node added TTL to empty node {"commit": "c990a2d", "node": "ip-192-168-88-252.ec2.internal", "provisioner": "windows2019"}

2023-06-20T16:27:42.051Z INFO controller.deprovisioning deprovisioning via emptiness delete, terminating 1 machines ip-192-168-99-4.ec2.internal/c6a.xlarge/on-demand {"commit": "c990a2d"}

2023-06-20T16:27:42.138Z INFO controller.termination cordoned node {"commit": "c990a2d", "node": "ip-192-168-99-4.ec2.internal"}

2023-06-20T16:27:42.478Z INFO controller.termination deleted node {"commit": "c990a2d", "node": "ip-192-168-99-4.ec2.internal"}

2023-06-20T16:27:42.751Z INFO controller.machine_termination deleted machine {"commit": "c990a2d", "machine": "windows2022-4hq46", "node": "ip-192-168-99-4.ec2.internal", "provisioner": "windows2022", "provider-id": "aws:///us-east-1f/i-039507775a01898e6"}

2023-06-20T16:27:54.105Z INFO controller.deprovisioning deprovisioning via emptiness delete, terminating 1 machines ip-192-168-88-252.ec2.internal/c6a.xlarge/on-demand {"commit": "c990a2d"}

2023-06-20T16:27:54.177Z INFO controller.termination cordoned node {"commit": "c990a2d", "node": "ip-192-168-88-252.ec2.internal"}

2023-06-20T16:27:54.480Z INFO controller.termination deleted node {"commit": "c990a2d", "node": "ip-192-168-88-252.ec2.internal"}

2023-06-20T16:27:54.754Z INFO controller.machine_termination deleted machine {"commit": "c990a2d", "machine": "windows2019-khmc5", "node": "ip-192-168-88-252.ec2.internal", "provisioner": "windows2019", "provider-id": "aws:///us-east-1a/i-0978aeb1680f37d7c"}

2023-06-20T16:31:21.596Z DEBUG controller.awsnodetemplate discovered subnets {"commit": "c990a2d", "awsnodetemplate": "windows2019", "subnets": ["subnet-05d7fed709f082b75 (us-east-1a)", "subnet-0109ebad1a6808805 (us-east-1f)", "subnet-0ff0ebe5e1a8630f1 (us-east-1a)", "subnet-0d01b14a3e9c91d1f (us-east-1f)"]}

2023-06-20T16:33:19.192Z DEBUG controller.deprovisioning discovered subnets {"commit": "c990a2d", "subnets": ["subnet-05d7fed709f082b75 (us-east-1a)", "subnet-0109ebad1a6808805 (us-east-1f)", "subnet-0ff0ebe5e1a8630f1 (us-east-1a)", "subnet-0d01b14a3e9c91d1f (us-east-1f)"]} discovered instance types {"commit": "c990a2d", "count": 649}

正在清理

完成后,清理与示例集群部署相关的资源,以避免产生不必要的费用。

eksctl delete cluster --name ${CLUSTER_NAME} --region us-west-2

如果此命令超时,则可以再次运行上面的命令以显示集群已成功删除。

结论

在这篇文章中,我们展示了你可以利用 Karpenter 在亚马逊 EKS 上无缝地向外扩展/扩展 Windows 工作节点。客户不再需要在具有 Windows 和 Linux 节点的异构 Amazon EKS 集群上维护两个自动扩展器解决方案。

对 t

opikachu

大声疾呼 ,他主动开始了插件的开发。