我们使用机器学习技术将英文博客翻译为简体中文。您可以点击导航栏中的“中文(简体)”切换到英文版本。

通过优化的写入提高适用于 MySQL 的 Amazon RDS 和 MariaDB 实例以及 MySQL 多可用区数据库集群上的应用程序性能

什么是优化写入?

默认情况下,流行的 Linux 文件系统使用 4 KB 的块大小,因为存储设备通常为与 4 KB 块对齐的写入提供原子性。但是,默认情况下,MySQL 和 MariaDB(InnoDB 存储引擎)使用 16 KB 的页面大小来存储表数据。这意味着从数据库写入的每个页面都涉及对底层文件系统的多个 4 KB 块写入。如果在写入事务期间出现操作系统崩溃或断电,它可能会遇到不完整或

如果在页面写入过程中出现操作系统崩溃或存储子系统故障,InnoDB 可以在崩溃恢复过程中从双写缓冲区中找到页面的良好副本。对双写缓冲区的写入是使用单个

fsync ()

系统调用在大型顺序区块中执行的,这会给引擎带来额外的开销并限制数据库系统的总体吞吐量。

借助优化写入功能,文件系统可以写入 16 KB 的块(页面)来提供写入保护,这意味着不再需要 InnoDB 中的双写缓冲区来提供撕毁页面保护。通过禁用双写缓冲区,数据库引擎可以减少写入开销。这可以改善事务处理和延迟。实际更改是使用 亚马逊云科技 Nitro 实例(硬件层)的

如何利用优化的写入

适用于 MySQL 8.0.30 及更高版本的亚马逊 RDS 以及适用于 MariaDB 10.6.10 及更高版本的亚马逊 RDS 支持优化写入。默认情况下,在运行支持的引擎版本和实例类的实例和多可用区集群上,此功能处于启用状态。有关支持的实例类列表,请参阅适用于

如果需要,可以通过将参数

rds.optimized_writes 设置为 OFF 来禁用数据库实例上的 RDS 优化

写入功能。

要了解当前与该功能相关的限制,请参阅

有效的 IOPS 利用率

如果没有优化写入,引擎需要向双写缓冲区执行额外的页面写入。它会给实例带来额外的 I/O 负载,并根据实例类别、存储和数据库配置限制实例的总吞吐量。但是,启用优化写入后,通过保持硬件层的原子性,引擎在没有双写缓冲区的情况下运行,从而避免了额外的写入。该引擎可以有效地使用可用的 IOPS 来实现更高的吞吐量并提高数据库的整体写入性能。

对双写缓冲区的写入不会导致 I/O 开销的两倍或两倍的 IO 操作,而且这些操作是通过单个

f

sync () 操作在大型顺序区块中执行的。 除非将页面写入双写缓冲区,否则引擎不会刷新对相应数据文件的更改。这会减慢具有高并发工作负载的写入密集型实例上的数据刷新操作。在这里,由于双写缓冲区,高并发工作负载的开销呈线性增加。通过禁用双写缓冲区,可以显著提高数据库吞吐量,因为它消除了额外的写入操作。

在多可用区实例上启用此功能(禁用双写缓冲区时)时,将双写缓冲区写入复制到辅助实例的额外开销也将消除。与在禁用该功能的情况下运行的实例相比,这为在启用了优化写入功能的多可用区实例上运行的工作负载提供了更好的写入性能。

对于

我们将研究本文后面提供的基准测试,以了解启用和禁用优化写入的实例之间的总体吞吐量差异以及相应的 IOPS 利用率。

确定可以从优化写入中受益的工作负载

在为工作负载选择 RDS Optimized Writes 之前,请务必检查某些方面,这些方面可以帮助您了解在数据库层获得的整体改进。

我们可以监控写入的页数和对双写缓冲区执行的写入次数。这可以使我们对实例的额外负担有所澄清。

- innodb_dblwr_writes — 已执行的双写操作 的数量

- innodb_dblwr_pages_writed — 为双写操作写入 的页数

-

innodb_data_fsyncs

— 迄今为止执行的 fsync () 操作总 数 -

innodb_data_pending_fsyncs — 当前待处理 fsync

() 操作的数量

我们可以通过使用

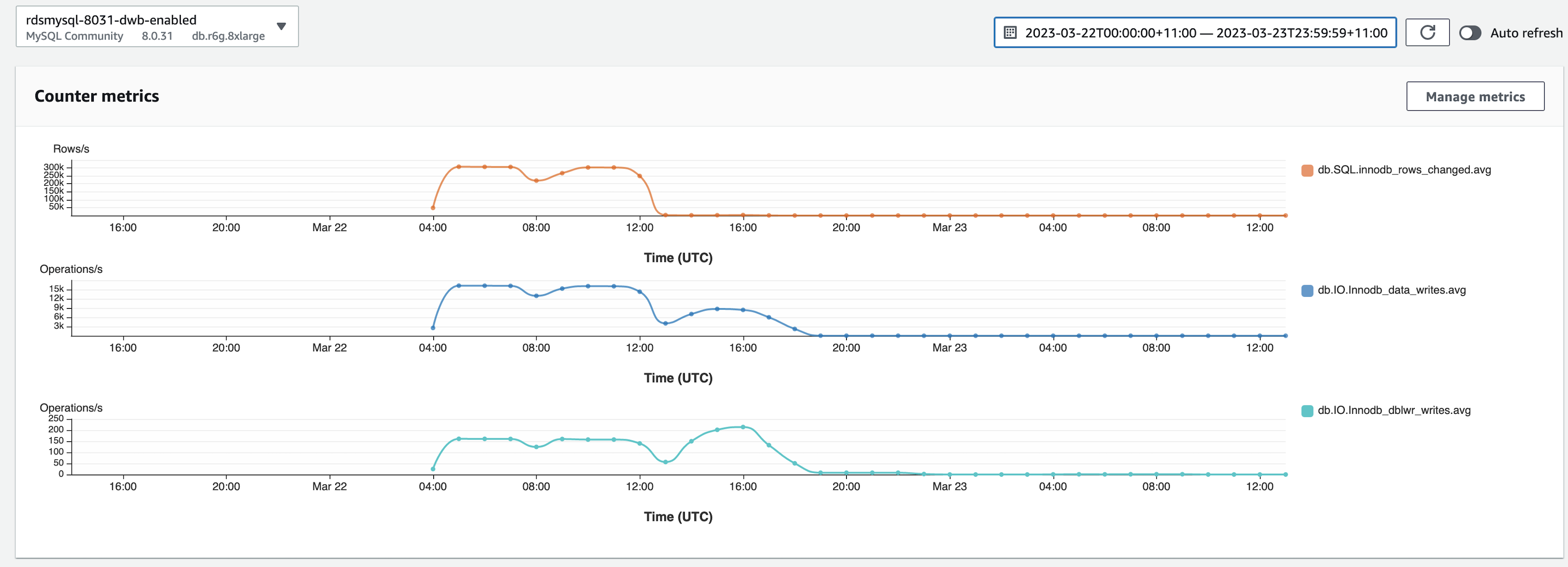

例如,下图显示了在禁用优化写入的实例(启用双写缓冲区的情况下运行的实例)上运行示例工作负载期间的指标。

innodb_rows_changes 、innodb_d

指标来衡量双写缓冲区的使用情况。

at

a_writes 和 innodb_dblwr_writes

在 Amazon RDS for MySQL (8.0) 上,双写缓冲区位于系统表空间之外。双写缓冲区数据存储在数据目录内的多个文件中。在实例上启用和配置 perf orman

ce_schema

后,我们可以使用以下查询监视双写文件上的 I/O 延迟。它可以帮助您检查文件的写入次数以及与相应写入操作相关的延迟。

您还可以使用以下查询在 RDS for MySQL 或 RDS for MariaDB 实例上检查双写缓冲区上的 I/O 等待时间。根据实例上运行的工作负载的类型,doublewrite 缓冲区可能是平均延迟较高的等待事件之一,表示存在争用。

从前面的查询输出中,您可以监控特定事件的等待时间,并将其与实例上的其他热门等待事件进行比较。

在 MySQL 中,我们看到等待

/io/file/innodb/innodb_dblwr_file 或 wait/synch/mutex/innodb/dblwr_mutex 事件的等待时间更长

。

在 MariaDB 中,我们可以相应地监控 wait/synch/mutex/innodb/buf_dblwr_mutex 的等待时间。

考虑监控您的

无论如何,建议将应用程序工作负载的性能与 Optimized Writes 实例进行比较,以更好地了解可以实现的性能提升。性能提升可以用数据库实例实现的总体吞吐量或数据库实例在特定时间段内处理的写入查询数量来衡量。

优化写入的关键方面

在本节中,我们将讨论使用优化写入时需要考虑的一些关键方面:

- 该功能可以使使用 InnoDB 引擎的表格受益,因为双写缓冲区是在 MySQL 和 MariaDB 中为 InnoDB 引擎实现的。如果数据库实例使用任何其他存储引擎,例如内存或 MyISAM,则使用优化写入功能对这些表进行操作的性能提升是看不到的。

- 此功能的写入性能改进应根据数据库实例在特定时间段内实现的总体写入吞吐量来衡量,而不是比较单个查询的性能。通过禁用双写缓冲区,可以提高后台刷新性能。InnoDB 会根据工作负载自动调整后台刷新速率,此功能有助于跟上工作负载的变化。

- 此功能旨在帮助在数据库实例上执行大量写入操作的应用程序。数据库读取查询 (SELECT) 直接从数据文件读取页面,而不从用于崩溃恢复过程的双写缓冲区读取任何页面。因此,禁用双写缓冲区不会对只读查询的性能产生任何影响。

- 此功能有助于避免额外的写入操作。不建议更改(减少)分配给实例的 IOPS 数量,因为这可能会影响数据库实例的整体性能,因为每个写入操作(尤其是更新和删除操作)都会从相应的数据文件中执行读取。此外,数据库需要提供常规读取查询 (SELECT) 以满足应用程序需求。

- 此功能有助于提高在数据库上运行的写入操作的吞吐量。为了更好地利用同样的优势,应用程序应该能够每秒推送更多的写入操作。此外,工作负载不应导致任何锁定冲突,以免导致超时或死锁,并最终影响应用程序的整体吞吐量。

优化写入的用例

以下用例是 RDS 优化写入的候选用例:

- 提供大量并发写入事务的应用程序可以利用此功能。对于预计写入流量会突然激增的应用程序,它也很有帮助。

- 计划在不更改应用程序代码或架构的情况下扩展写入吞吐量的应用程序可以使用它。在许多情况下,在实施分区或扩展等长期计划以增强应用程序的写入可扩展性之前,Optimized Writes 可以随时使用该解决方案。此外,在具有分片数据库的应用程序上使用此功能可以帮助提高相应分片数据库实例的吞吐量。

-

在只读副本实例上启用此功能可以提高实例的写入性能,从而帮助最大限度地减少复制延迟。除了启用此功能外, 如果工作负载可以更好地使用

并行复制来最大限度地减少复制延迟,则可以考虑启用并行 复制。 -

使用多可用区实例和

多可用区集群部署 的应用程序 可以利用此功能。 -

经常执行数据加载操作(LOA

D DATA LOCAL INFILE )或在表中批量插入的应用程序可以从该功能中受益。

性能改进

根据应用程序工作负载评估用例和工作负载模式后,建议对优化写入实现的写入性能改进进行基准测试。

考虑进行快速基准测试,以了解启用和禁用优化写入的实例之间的写入性能。正如我们在本文前面讨论的那样,当使用支持的引擎版本、实例类和参数时,该功能默认处于启用状态。

但是,如果需要,您可以通过将

此外,我们现在知道启用优化写入意味着引擎上的双写缓冲区被禁用,反之亦然。

rds.optimized_writes 参数更改为 OF

)来禁用相同的功能。

F

(默认值为 AUTO

在此基准测试中,我们启动了两个具有以下配置的 RDS for MySQL 实例:

- 引擎版本 — 8.0.31

- 实例类 — db.r6g.8xlarge

- 存储类型 — IO1

- 预配置 IOPS — 50,000

- 多可用区 — 已启用

- 自动备份 -已启用

在其中一个实例上,考虑通过在自定义参数组中禁用

rds.optimized_writes 来禁用优化

写入。有关更多信息,请参阅

当两个实例都处于活动状态时(一个实例使用默认启用优化写入的默认参数组运行,另一个实例使用明确禁用优化写入功能的自定义参数组),我们使用

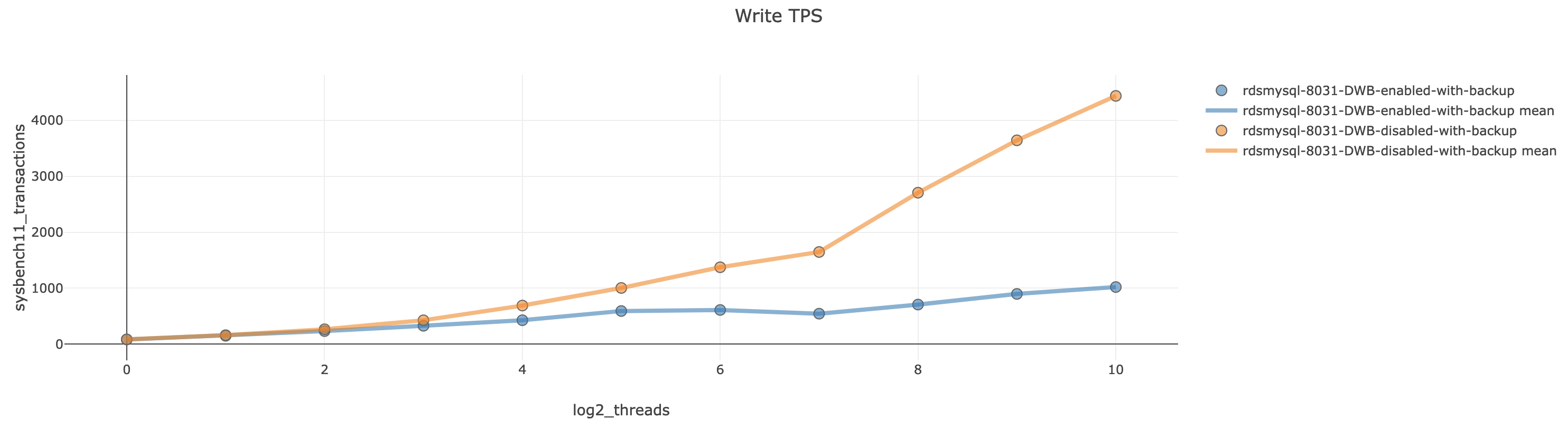

下图说明了 sysbench 在各自的线程数下产生的每秒事务吞吐量。此处,橙线表示在启用优化写入(禁用 DWB)的情况下运行的实例的吞吐量,蓝线表示禁用 “优化写入”(启用 DWB)的实例的吞吐量。

正如我们所见,当运行工作负载的活跃线程数量增加时,启用了优化写入的实例的吞吐量增加会大大提高。当活动线程的数量增加时,Optimized Writes 实例的吞吐量开始进一步增加。另一方面,禁用该功能的实例的性能保持稳定,没有任何明显的变化。

出于演示目的,我们可以在禁用二进制日志的情况下尝试相同的工作负载。二进制日志在 MySQL 中有许多有用的用例,但它可能会在写入密集型工作负载上引入写入放大和争用。在 Amazon RDS for MySQL 中,启用自动备份以提供时间点恢复 (PITR) 功能时,将启用二进制日志记录。

在生产实例上,始终建议启用自动备份(二进制日志),它提供自动快照、PITR 和创建只读副本等功能。

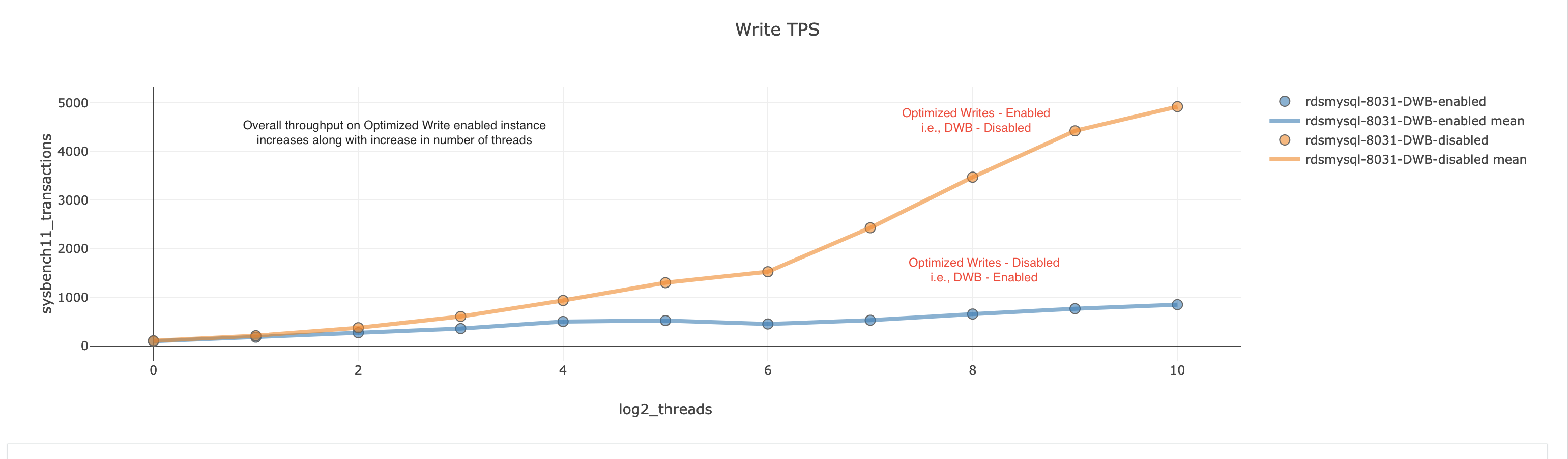

在下图中,我们可以看到,启用了优化写入功能的实例的总体吞吐量随着活动线程数量的增加而增加。

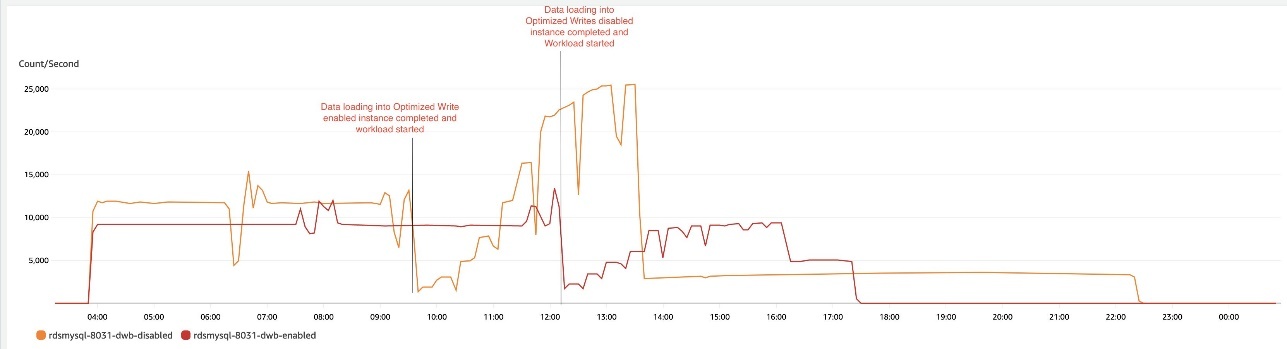

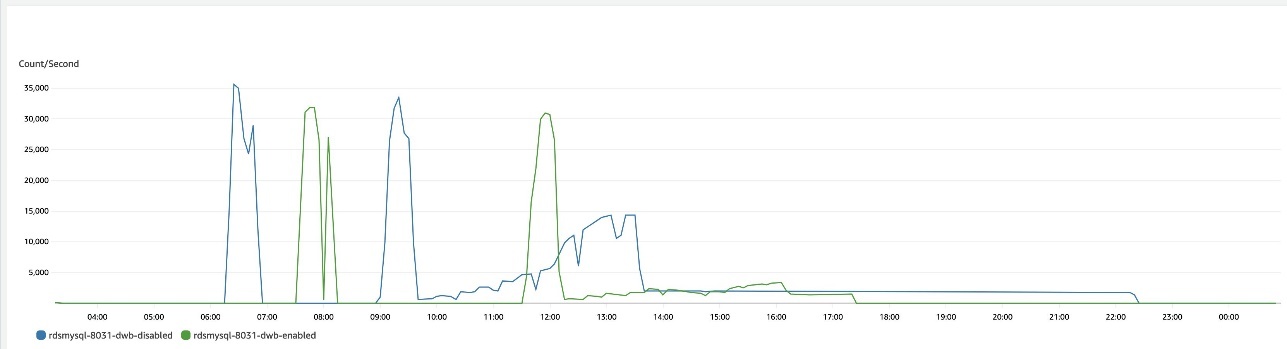

以下两张图说明了启用优化写入(禁用 DWB)和禁用优化写入(启用 DWB)的实例上的写入 IOPS 和读取 IOPS 利用率。

第一张图显示,与禁用优化写入的实例相比,启用了优化写入(禁用 DWB)的实例可以推送更多的 IOPS 并更快地完成数据加载。由于 DWB 已禁用,因此实例可以直接写入数据文件,从而减少额外的 I/O 开销和

fsync ()

调用,从而改善了引擎的写入延迟。这有助于引擎处理更多的写入次数,进而提高 IOPS 利用率。应用程序可以有效地使用 RDS 实例上的 IOPS 来实现更好的吞吐量。

在前面的示例中,您可能还注意到,启用优化写入后,该实例使用了更多的读取 IOPS。当需要修改数据库页面时,当要修改的页面在缓存中不可用时,引擎必须从磁盘读取页面。在此示例中,需要增加读取次数才能满足我们增加的 DML 吞吐量。

总体而言,启用了优化写入的实例可以在该实例上处理更多事务,这有助于提高应用程序的写入吞吐量。可以实现的收益量取决于在数据库实例上运行的工作负载的类型。

除优化写入以外,运行高并发工作负载的最佳实践

考虑以下最佳实践:

-

按照 Amazon RDS

操作指南,适当配置您的 R DS 实例类和存储,以避免资源争用 。例如,配置为使用 r6g.large 的 RDS 实例在充分利用该功能之前,可能无法帮助处理应用程序的全部工作负载并达到最大 CPU 利用率。 - 使用适当的存储和实例配置,以避免由于工作负载变化而导致的资源限制。

-

确保您的应用程序工作负载分布在不同的数据库对象之间。如果架构使用有限的对象,则可能会导致

锁定问题 。这可能导致死锁和超时并降低应用程序吞吐量。确保将您的数据库配置为 有效管理事务 并在 InnoDB 引擎内 正确处理死锁 。 - 对于启用了优化写入的实例,可以考虑使用多可用区,因为它有助于消除向辅助(备用)实例写入双写缓冲区相关页面以提高写入性能的开销。与禁用优化写入的多可用区实例相比,启用优化写入的多可用区实例的写入性能提高更高。

结论

在这篇文章中,我们展示了优化写入如何提高写入密集型工作负载的性能。我们还讨论了常见用例和最佳实践。亚马逊 RDS 优化写入功能在适用于 MySQL 的 8.0.30 及更高版本的亚马逊 RDS 和适用于 MariaDB 版本 10.6.10 及更高版本的亚马逊 RDS 上可用。我们鼓励您在 MySQL 和 MariaDB 工作负载中试用这项新功能,看看它能否提高写入性能并帮助扩展应用程序的整体吞吐量。

有关该功能的完整详细信息,请参阅

作者简介

Chelluru Vidyadhar

是亚马逊网络服务亚马逊 RDS 团队的数据库工程师。

Chelluru Vidyadhar

是亚马逊网络服务亚马逊 RDS 团队的数据库工程师。

*前述特定亚马逊云科技生成式人工智能相关的服务仅在亚马逊云科技海外区域可用,亚马逊云科技中国仅为帮助您发展海外业务和/或了解行业前沿技术选择推荐该服务。