我们使用机器学习技术将英文博客翻译为简体中文。您可以点击导航栏中的“中文(简体)”切换到英文版本。

亚马逊云科技 发布开源软件 Palace,用于对量子计算硬件进行基于云的电磁学模拟

今天,我们将介绍 P

我们将Palace 作为开源项目

我们为什么要建宫殿?

计算建模通常要求科学家和工程师在模型保真度、挂钟时间和计算资源之间做出妥协。基于云的 HPC 的最新进展已

Palace 使用来自科学计算界的可扩展算法和实现,并支持计算基础设施的最新发展,以提供最先进的性能。在亚马逊云科技上,这包括 用于

最后,我们之所以建立 Palace,是因为尽管有许多用于计算物理学领域广泛应用的高性能开源工具,但用于大规模并行、基于有限元的计算电磁学的开源解决方案却很少。Palace 支持多种仿真类型:特征模式分析、频域和时域驱动仿真以及用于集总参数提取的静电和静磁模拟。作为一个开源项目,希望为工业相关问题添加新功能的开发人员也可以对其进行完全扩展。Palace 的大部分内容都得益于

Palace 为支持基于云的数值仿真和 HPC 的开源软件生态系统锦上添花,它允许为仿真服务开发自定义解决方案和云基础架构,并为您提供比现有替代方案更多的选择。

量子硬件设计的电磁学仿真示例

在本节中,我们介绍两个示例应用程序,它们演示了 Palace 的一些关键功能及其作为数值模拟工具的性能。对于所有展示的应用程序,我们将基于云的 HPC 集群配置为在亚马逊 Linux 2 操作系统上使用 GCC v11.3.0、OpenMPI v4.1.4 和 EFA v1.21.0 编译和运行 Palace。在每种情况下,我们都使用

Transmon 量子比特和读出谐振器

第一个示例考虑了超导量子器件设计中遇到的一个常见问题:仿真与读出谐振器耦合的单个 transmon 量子比特,并使用端接的共面波导 (CPW) 传输线用于输入/输出。超导金属层被建模为 c 平面蓝宝石衬底顶部的无限薄、完美的导电表面。

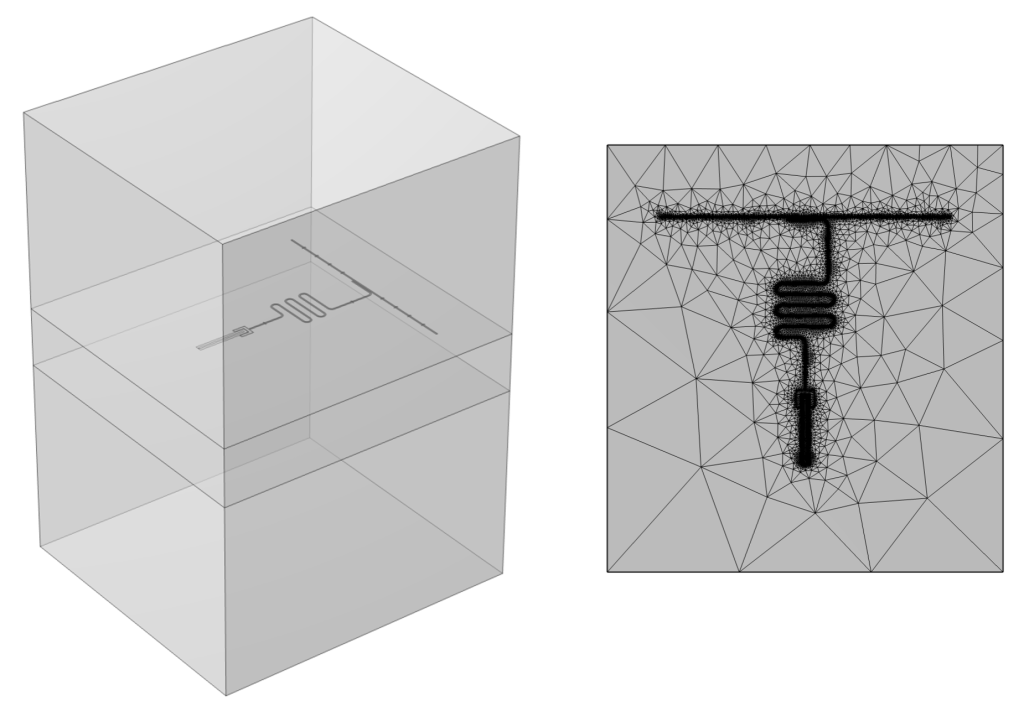

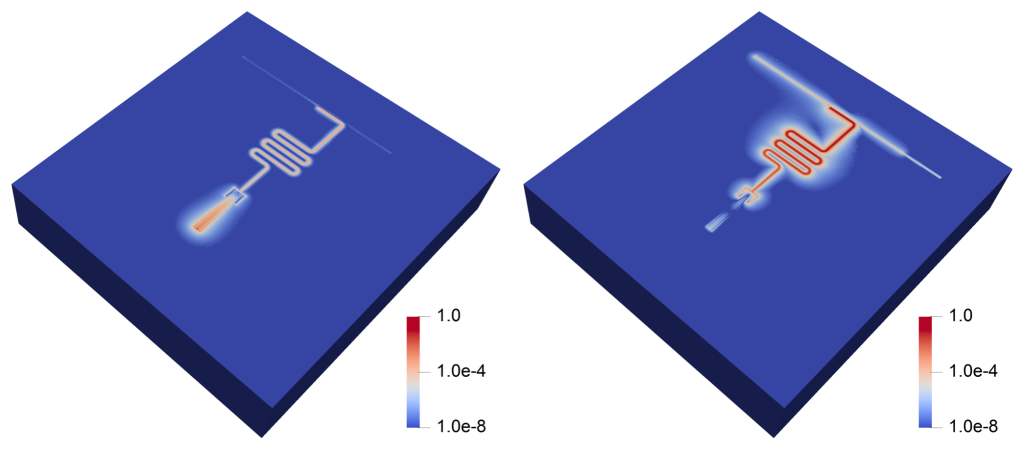

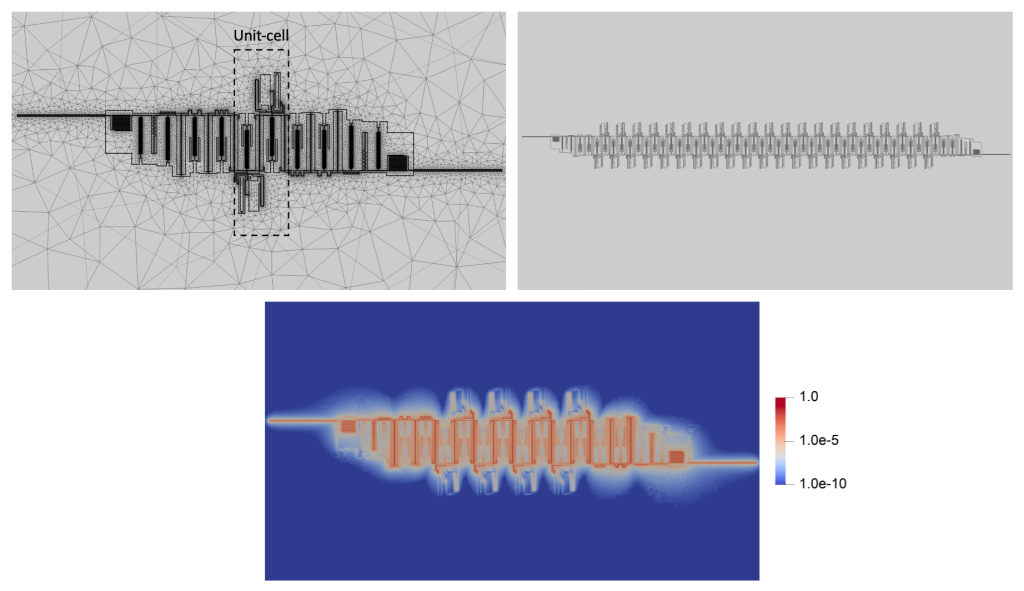

特征模态分析用于计算线性化发射和读出谐振器模式频率、衰减率以及相应的电场和磁场模式。考虑了两个有限元模型:具有2.462亿自由度的精细模型,以及具有1,550万自由度的粗糙模型,与精细模型相比,计算频率相差1%。对于感兴趣的读者来说,主导的麦克斯韦方程是在精细模型中使用三阶符合 H(curl)的 Nédélec 元素在四面体网格上使用粗糙模型中类似的一阶元素进行离散化的。图 1 显示了 transmon 模型的 3D 几何形状以及用于仿真的网格视图。图 2 还显示了两种计算特征模态中每种特征模态的磁场能量密度的可视化。

图 1: transmon 量子比特和读出谐振器几何结构的 三维仿真模型(左)。右侧是用于离散化的表面网格的视图。

图 2:

使用 P

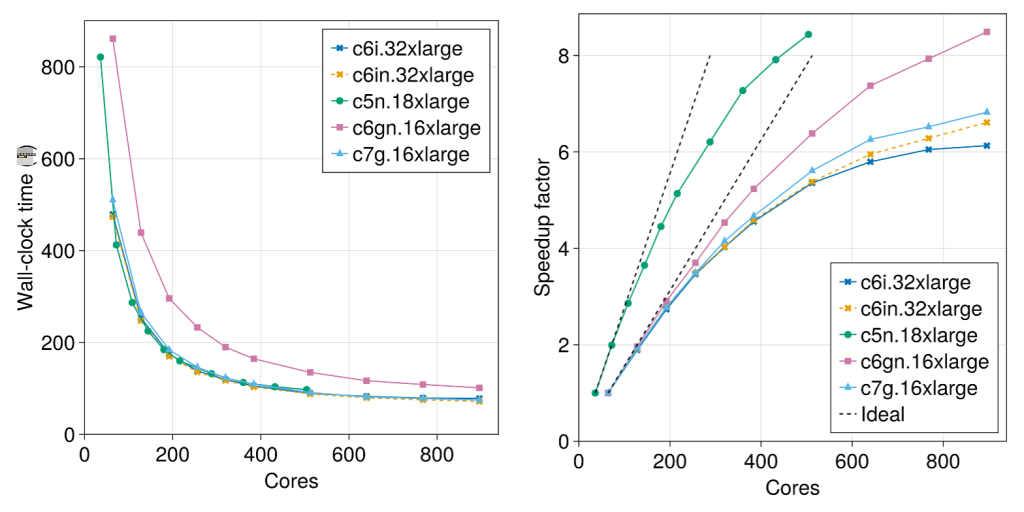

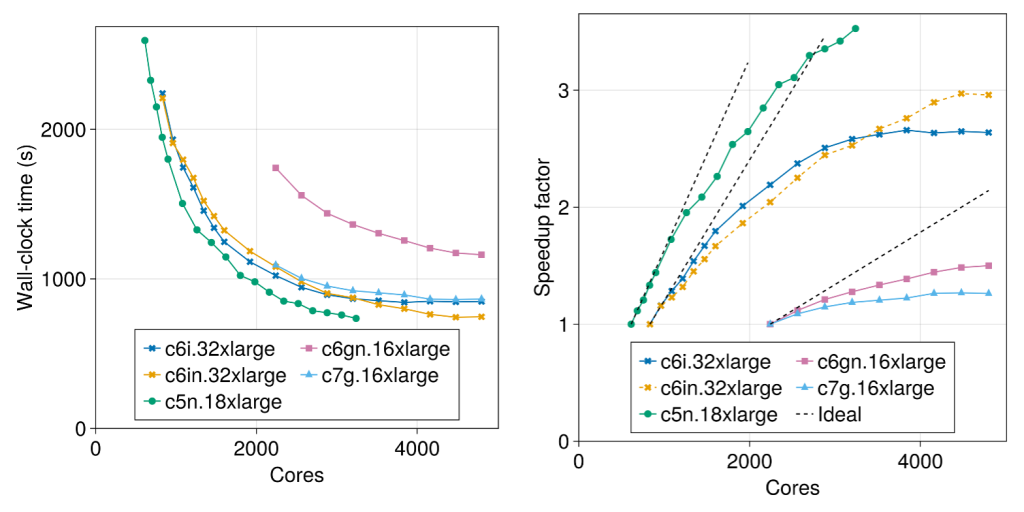

对于这两种模型中的每一个模型,我们都扩大了用于模拟的内核数量,以研究 Palace 在 亚马逊云科技 上使用各种 EC2 实例类型时的可扩展性。图 3 绘制了粗糙模型的仿真挂钟时间和计算出的加速系数,而图 4 则绘制了高保真精细模型的仿真挂钟时间和计算出的加速系数。我们观察到,借助 EC2 的可扩展性,粗模型和精细模型的仿真时间分别约为 1.5 分钟和 12 分钟。另请注意,采用最新一代 亚马逊云科技 Graviton3 处理器的 c7g.16xlarge 实例类型的性能比上一代 c6gn.16xlarge 有所提高,通常与基于英特尔的最新实例类型的性能相当。

图 3: 仿真拥有 1550 万自由度的粗糙传输量子比特示例模型的挂钟时间和加速系数。

图 4: 仿真精细传输量子比特示例模型的挂钟时间和加速系数,自由度为 2.462 亿。

超导超材料波导

演示 Palace 功能和性能的第二个示例涉及基于集合元件微波谐振器链的超导超材料波导的仿真。构建该模型的目的是预测张等人,《科学379》(2023)

我们考虑复杂度不断增加的模型,这些模型从单个单元开始(见下图 5),自由度为 2.422 亿,然后增加到 21 个单位单元,自由度为 14 亿。仿真该设备的复杂性来自于长度尺度范围很大的几何特征,相对于模型的总长度 2 cm,轨迹宽度为 2 μm。用于仿真的 EC2 实例数量随着超材料单元数量的增加而增加,以保持每个处理器的恒定自由度。

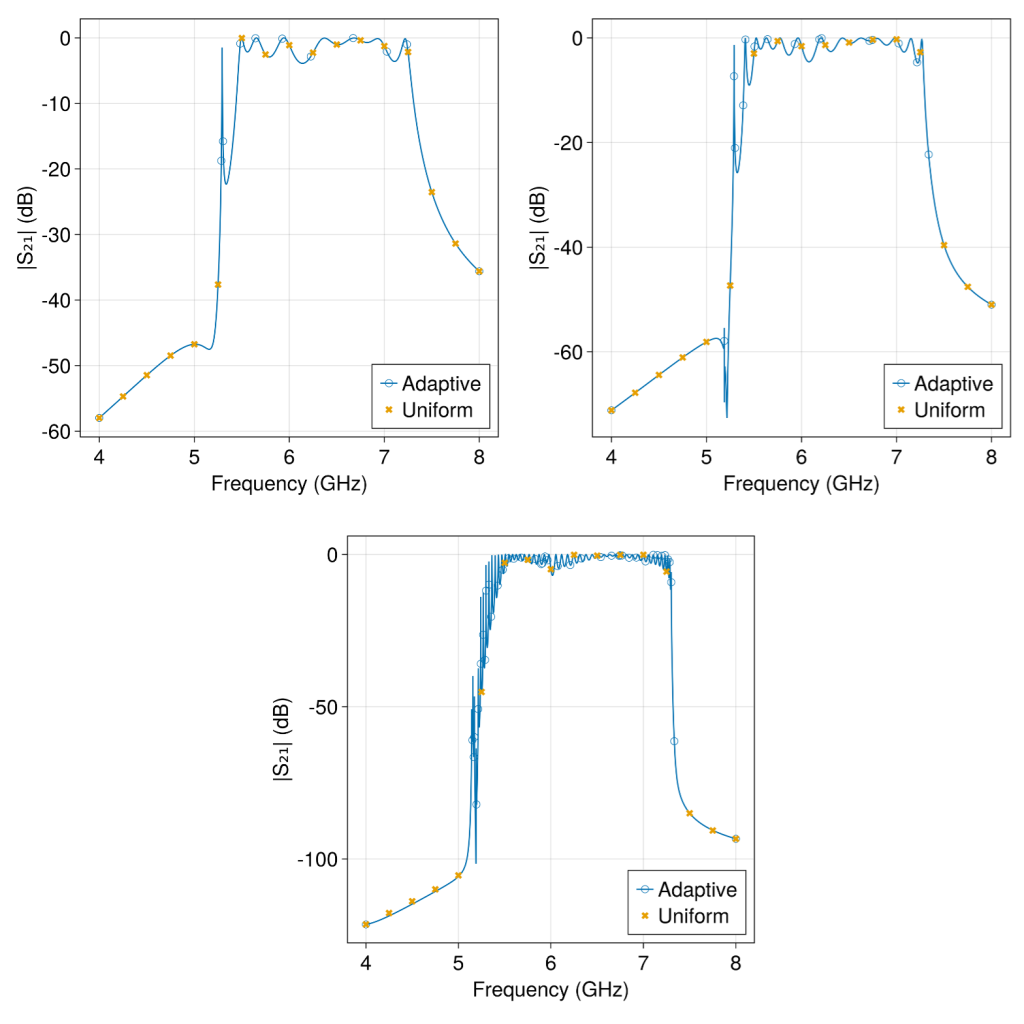

图 5 显示了 1、4 和 21 单元单元仿真案例的超材料波导几何结构。图 6 绘制了每个仿真案例的计算滤波器响应,我们看到随着单位单元重复次数的增加,频率响应变得更加复杂。使用自适应快速频率扫描算法计算出的解是针对几个均匀采样的频率进行检查的,这两种解决方案在整个频段上都显示出良好的一致性。

图 5: 用于超材料波导仿真的 1、4 和 21 单元单元重复模型,两端均有设计的锥度。4 单元电池重复(下图)可视化电场能量密度,该密度以 Palace 在 6 GHz 下计算的解中按整个计算域中的最大值进行缩放。

图 6: 1(左上角)、4(右上角)和 21 个单位单元重复模型在 4 到 8 GHz 范围内的 模拟传输。空圆表示 Palace 的自适应快速频率扫描算法使用的自动采样频率。还绘制了在几个均匀间隔的频率下计算的频率响应的解,以证明自适应快速频率扫描的准确性。

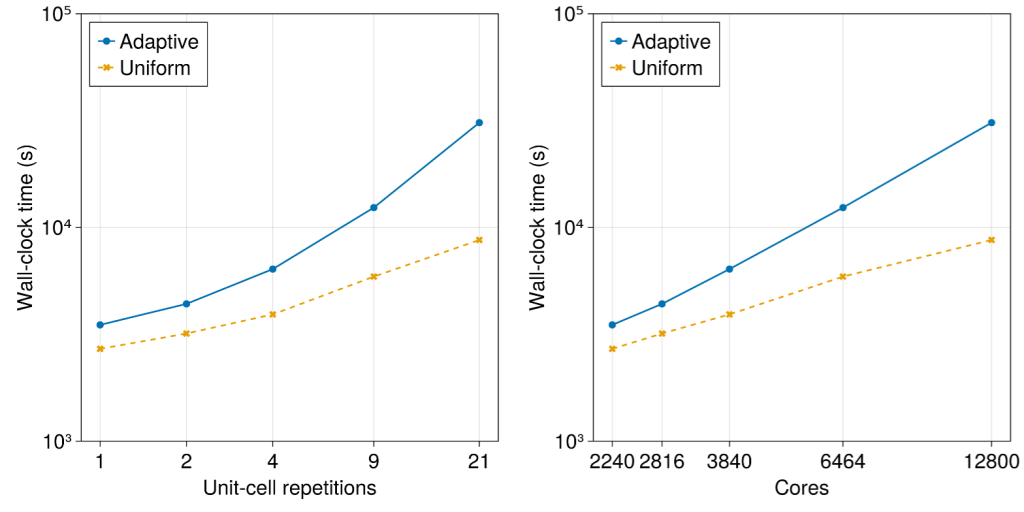

我们在图 7 中绘制了随着模型变得越来越复杂,在越来越多的内核上运行仿真所需的挂钟时间。所有模型都在 c6gn.16xlarge 实例上进行模拟,最大的案例使用 200 个实例或 12,800 个内核。使用自适应快速频率扫描时,挂钟仿真时间更长,但这是因为均匀扫描仅在 17 个采样频率下提供频率响应,而快速自适应扫描的分辨率要高得多,使用 4001 个点。对于 21 个单位单元的重复,如果按顺序对每个频点进行采样,则均匀频率扫描大约需要 27 天才能达到相同的 1 MHz 精细分辨率。

图 7: 超材料波导示例的 仿真挂钟时间,因为单位单元重复次数以及相应地使用的内核数量增加。

最后一点是,尽管每个内核的自由度数大致保持不变,但随着模型复杂度的增加,仿真挂钟时间的增加是由于为每个频率组装的线性方程组变得越来越难以求解,收敛所需的线性求解器迭代次数也越来越多。同样,随着模型中单位单元数量的增加,自适应频率扫描需要更多的频率样本,因此需要更多的挂钟仿真时间来维持规定的误差容差。

结论和后续步骤

这篇博客文章介绍了新发布的用于计算电磁学仿真的开源有限元代码Palace。Palace 获得 Apache 2.0 许可证,来自更广泛的数值模拟和 HPC 社区的任何人均可免费使用。其他信息可以在

我们还介绍了一些示例应用程序的结果,这些应用程序在各种 EC2 实例类型和内核数量上运行 Palace。在 亚马逊云科技 上开始使用 Palace 的最简单方法是使用支持 亚马逊云科技 的 亚马逊云科技 ParallelClust

*前述特定亚马逊云科技生成式人工智能相关的服务仅在亚马逊云科技海外区域可用,亚马逊云科技中国仅为帮助您发展海外业务和/或了解行业前沿技术选择推荐该服务。