我们使用机器学习技术将英文博客翻译为简体中文。您可以点击导航栏中的“中文(简体)”切换到英文版本。

自动对亚马逊 Aurora PostgreSQL 进行基准测试 — 第 2 部分

这篇文章是

总而言之,优化数据库是新的和现有的应用程序工作负载的一项重要活动。您需要考虑成本、运营、性能、安全性和可靠性。进行基准测试有助于解决这些问题。使用

在这篇文章中,我们为您提供了一个用于自动化解决方案的

解决方案概述

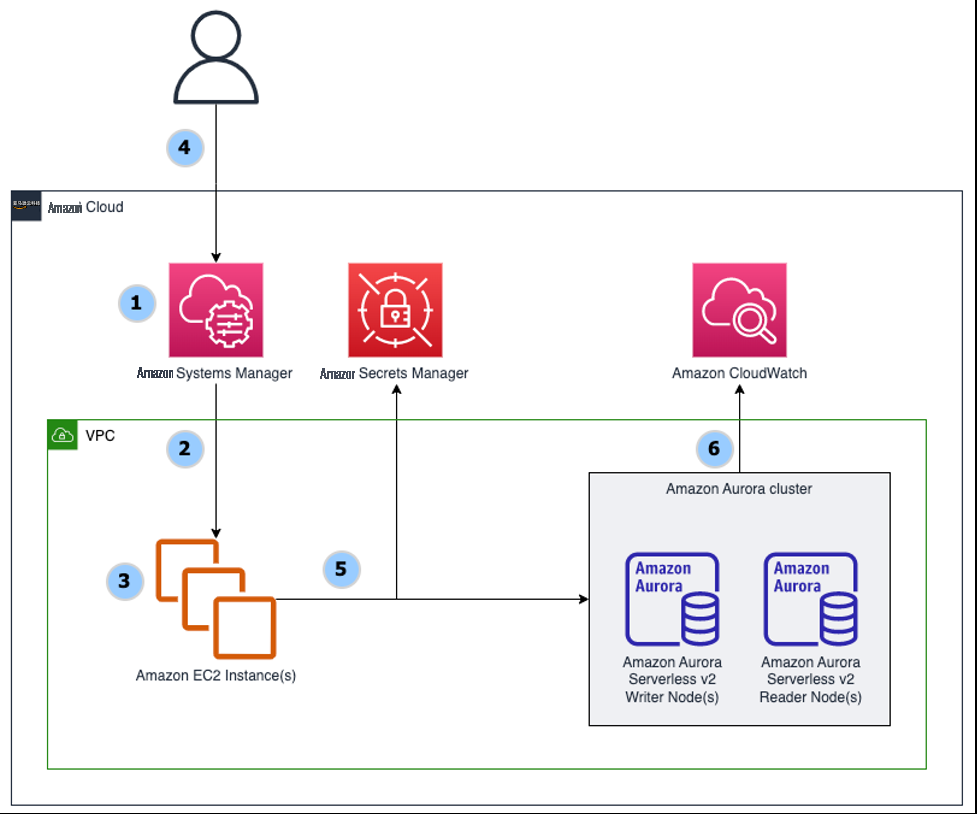

该解决方案提供了跨

-

CloudFormation 模板部署了

亚马逊云科技 Systems Manager 文档(SSM 文档)。 - 创建了定义亚马逊 Linux 2 映像和配置设置的 Amazon EC2 启动模板。实例按标签分为 “读取” 或 “写入” 类别。

- 亚马逊 EC2 实例是使用启动模板和亚马逊 EC2 Auto Scaling 组配置的。

- 用户通过选择系统管理器文档、使用系统管理器运行命令并指定标签来启动基准测试。

- Amazon EC2 实例接收命令,检索数据库密钥,并在规定的时间内在用户的数据库上运行。

-

Amazon CloudWatch 和性能洞察 捕捉了数据库活动。

先决条件

在本演练中,您应该具备以下先决条件:

-

一个

亚马逊云科技 账户 -

具有三个@@

私有子网的亚马逊虚拟私有云 (亚马逊 VPC) -

一个

EC2 密钥对 -

A

urora PostgreSQL 无服务器 v2 数据库集群 版本 13.7 或更高版本 -

亚马逊云科技 Secrets Manager 的 秘密 -

EC2 和 RDS 安全组 -

亚马逊云科技 System

s Manager 配置为对您的实例执行操作 -

可以连接到您的 Aurora 集群的

psql 客户端 - 熟悉 PostgreSQL、亚马逊 Aurora、亚马逊 VPC、亚马逊 EC2 和 IAM

-

本文 第 1 部分中定义的 数据库架构

创建数据库架构

为了演示如何根据您的架构和数据访问模式自定义基准测试,我们使用三个表和三个序列而不是默认的 pgbench TPC-B 基准测试。按照

部署解决方案

您可以使用本文中提供的CloudFormation模板来部署解决方案。在此解决方案中,我们在您的账户中创建的资源是:

- EC2 实例启动模板

- IAM 实例配置文件

- EC2 自动扩展组

- IAM 角色

- 系统管理器文档(SSM 文档)

选择 L

aunch Stack

以

在美国

东部 1 地区部署 CloudFormation 模板:

![]()

或者,你可以手动创建堆栈:

- 在 亚马逊云科技 CloudFormation 控制台上,选择 创建堆栈 。

-

输入堆栈的

亚马逊 Simple Storage Servic e (亚马逊 S3)位置: -

选择 “

下一步

” 。

输入堆栈名称。记下堆栈名称,稍后在运行 Systems Manager 命令文档时将需要它。

-

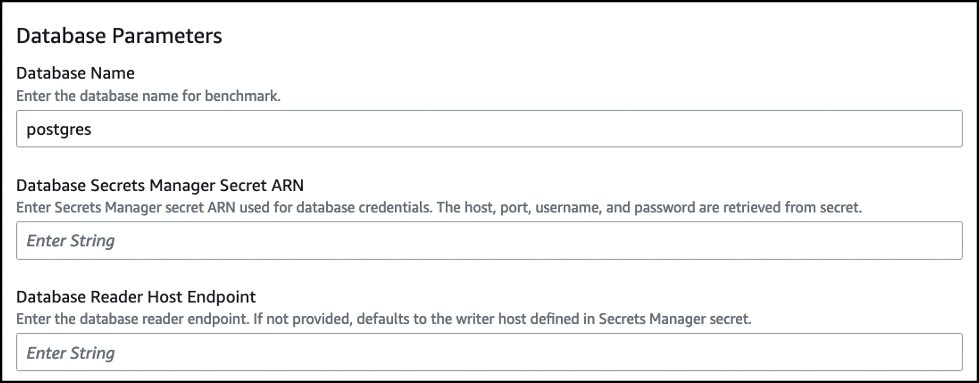

提供数据库连接的参数:

- 数据库名称 — 输入 PostgreSQL 数据库的名称。

-

数据库密钥 ARN

— 输入您用于数据库

的 亚马逊云科技 Secrets Manager 密钥 ARN。主机、端口、用户名和密码是在基准测试运行时从密钥中检索的。 -

数据库读取器主机端点

-输入数据库读取器端点。这是一个可选参数,如果未提供,则默认为 Secrets Manager 密钥中定义的编写器主机。

-

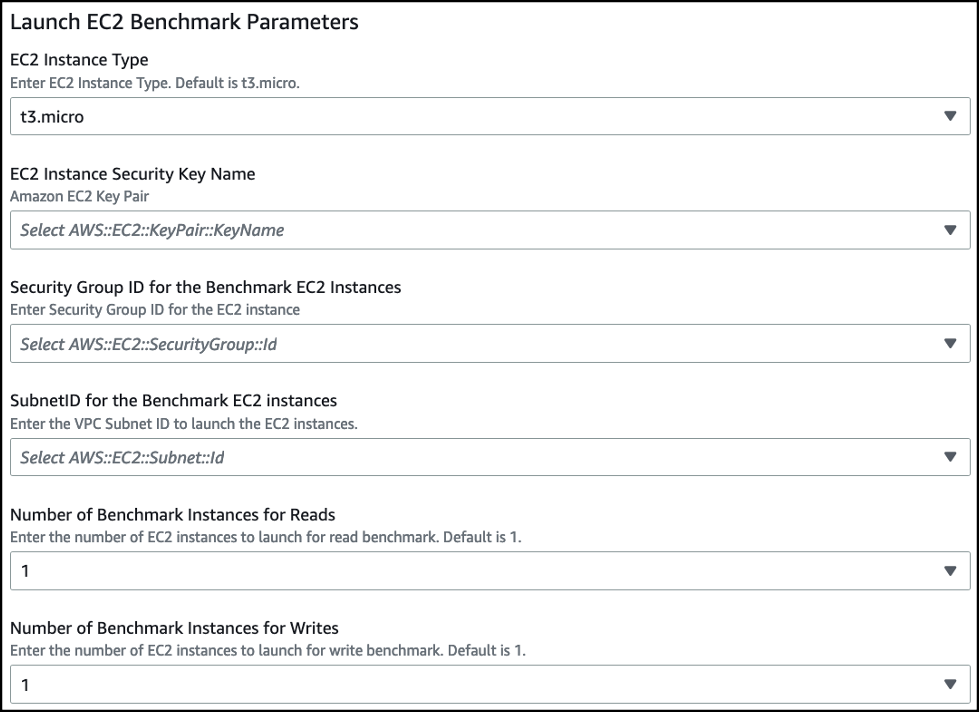

提供 EC2 实例启动的参数:

- EC2 实例类型 — 输入运行基准测试的 EC2 实例类型。默认为 t3.micro。

- EC2 实例安全密钥名称 — 选择您的 EC2 安全密钥名称。

- 基准 EC2 实例的安全组 ID — 选择要分配给 EC2 实例的安全组。安全组必须提供 EC2 实例和您的数据库之间的访问权限。

- 基准 EC2 实例的 subnetID — 选择启动实例的 VPC 中的子网 ID。子网必须有数据库的网络路径。

- 用于读取的基准实例 数 — 在基准测试中生成读取交易的 EC2 实例数量。

-

用于写入的基准实例

数 — 在基准测试中生成写入交易的 EC2 实例数量。

-

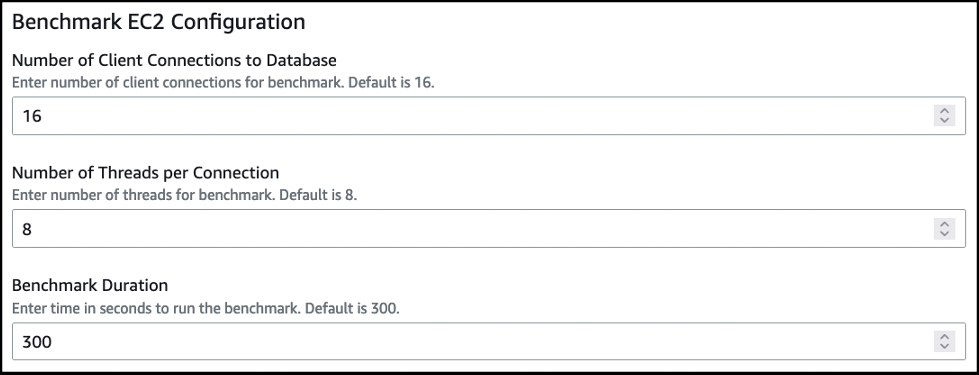

提供用于模拟多个客户端读取和写入 PostgreSQL 数据库的 pgbench 参数:与数据库

- 的客户端连接 数 — 每个 EC2 实例读取器/写入器对 PostgreSQL 数据库 的客户端连接数。 每个连接

- 的线程数 — 每个连接 在 PostgreSQL 数据库上运行的线程数。

-

基准测试持续时间

-基准测试的运行时长,以秒为单位。

- 指定所有参数后,选择 Nex t 继续。

- 在 “ 配置堆栈选项 ” 页面上,选择 “ 下一步 ” 继续。

-

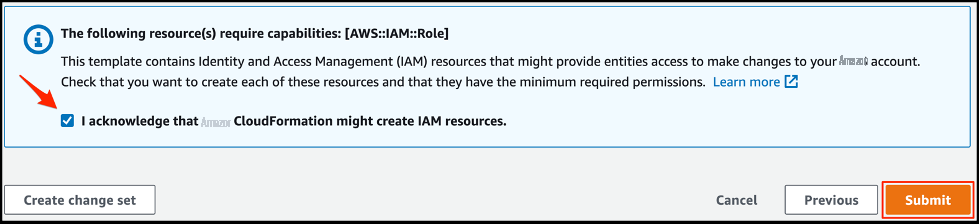

在

审核

页面上,选择

我承认 亚马逊云科技 CloudFormation 可能会创建 IAM 资源

, 然后选择 提交。

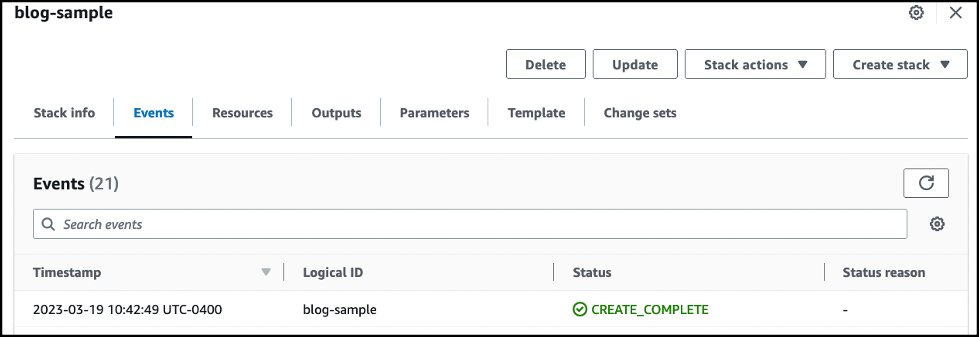

在 亚马逊云科技 CloudFormation

事件

选项卡上,等待大约 5 分钟,直到看到 C

在 亚马逊云科技 CloudFormation

事件

选项卡上,等待大约 5 分钟,直到看到 C

REATE_COMPLETE 状态。正确的事件是逻辑 ID 与 CloudFormation 模板名称相匹配的事件。

-

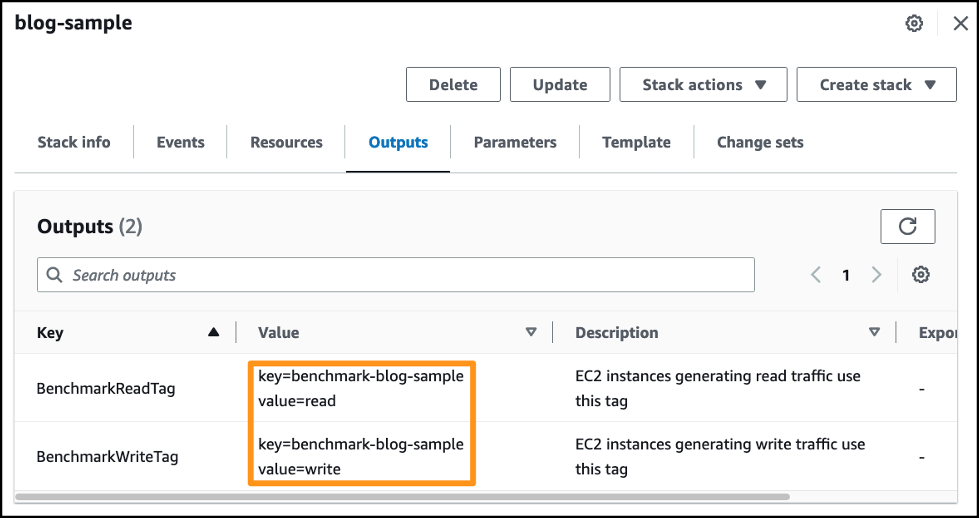

在 亚马逊云科技 CloudFormation 的

输出

选项卡上,您可以查看运行基准测试时使用的标签。

运行基准测试

使用 CloudFormation 模板部署资源后,您将拥有两个 EC2 Auto Scaling 组,一个用于读取,一个用于写入,每个组有一个 EC2 实例。EC2 实例预先配置了脚本和相应的参数,可以使用 pgbench 运行基准测试。

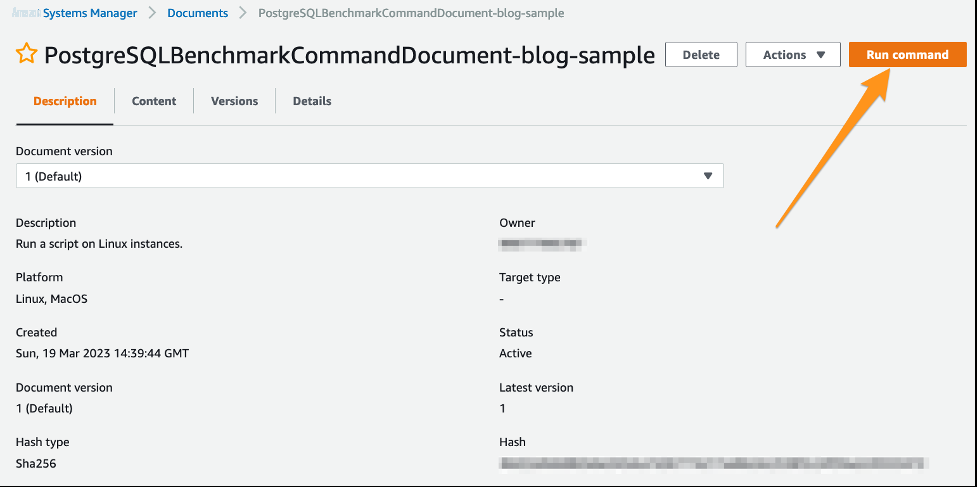

要运行这些命令,请使用系统管理器命令功能。此功能允许您使用命令文档对目标 EC2 实例运行命令。系统管理器文档(SSM 文档)定义了系统管理器对您的托管实例执行的操作。

在早期步骤中用于部署资源的 CloudFormation 模板部署了一个 SSM 文档,其中包含的操作包括 pgbench 命令和 CloudFormation 堆栈部署期间提供的参数。我们使用 SSM 文档在目标 Aurora PostgreSQL 数据库上运行基准测试。

要运行基准测试,请完成以下步骤:

-

在 Systems Manager 控制台上, 在导航窗格的 “

共享资源

” 下,选择 “

文档

” 。

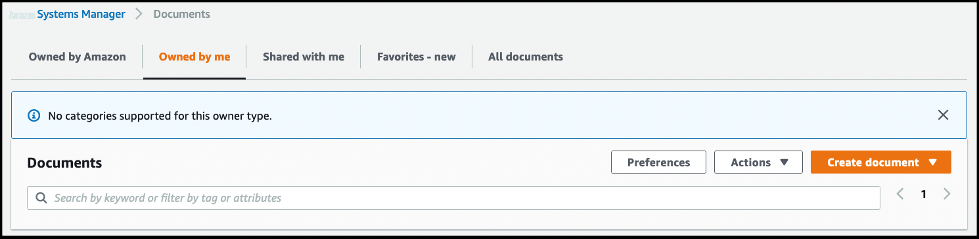

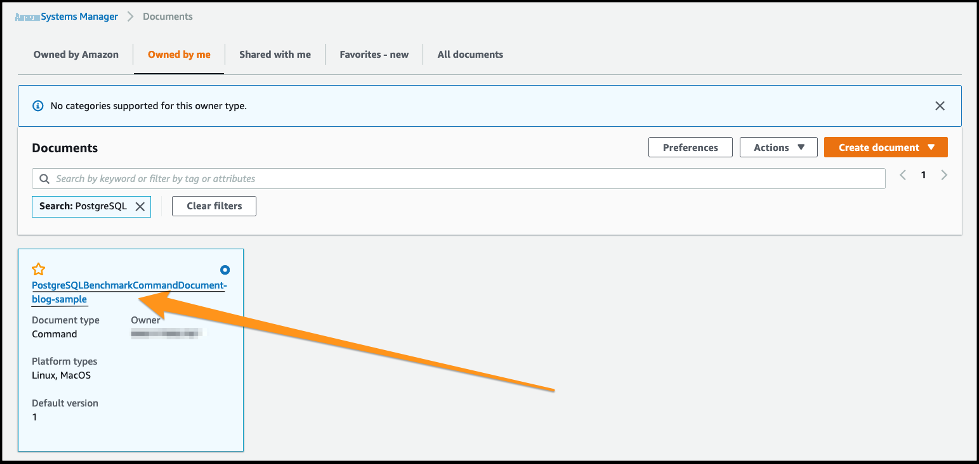

-

选择 “

我 所有

”,按所有者筛选结果 。

-

堆栈名称将添加到命令文档名称的末尾。

选择 SSM 文档超链接 postgreSQLBenchmarkCommanddocumnedBlog-Sample 。

-

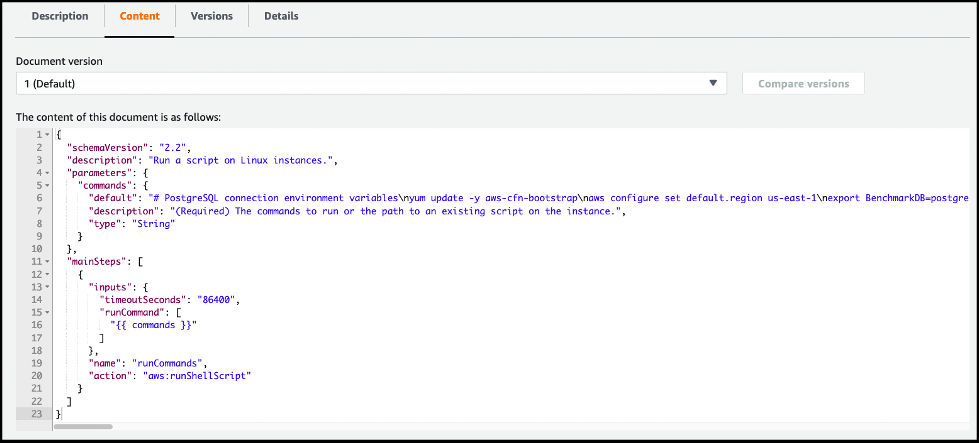

在 “

内容

” 选项卡上查看命令文档的详细信息。

-

选择 “

运行命令

” 。

-

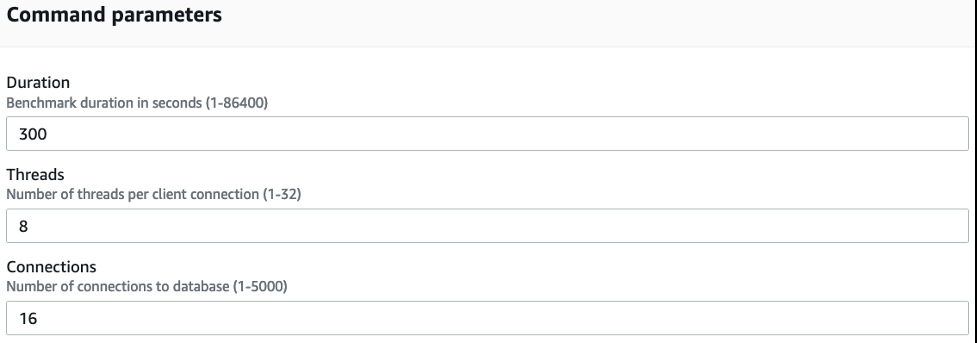

SSM 文档将时长、连接和线程默认设置为您在启动 CloudFormation 模板时指定的值。在运行 SSM 文档之前,您可以更改这些值。

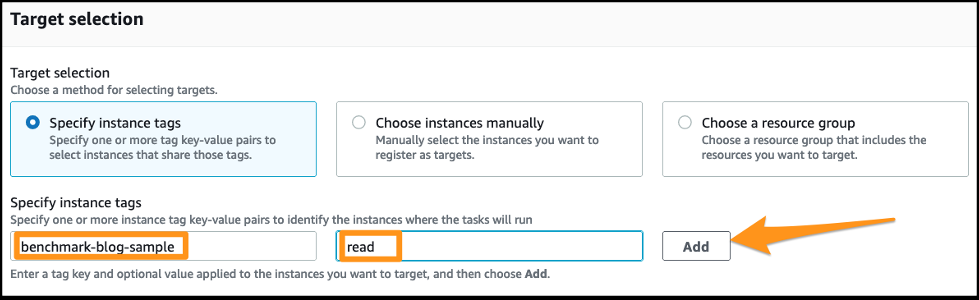

- 对于 目标选择 ,选择 指定实例标签 。

实例标签用于选择要在其上运行 SSM 命令文档的实例。标签用于标识 EC2 实例的

读取

和

写入

组。我们添加了标签 b

enchmark-{堆栈名称}

和以下值:

- 读取 — 具有此标签值的 EC2 实例使用 SQL 选择语句运行 pgbench 命令。

- 写入 — 具有此标签值的 EC2 实例使用插入、删除和更新 SQL 语句运行 pgbench。

-

输入标签键

基准-{堆栈名称},读取值 ,然后选择 添加 。

我们在这篇文章中使用了基准博客样本。

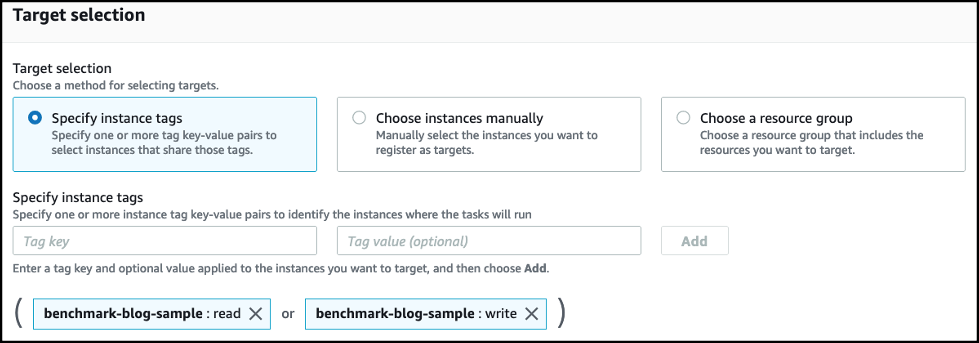

-

添加第二个实例标签密钥作为基准测试博客样本,写入值。

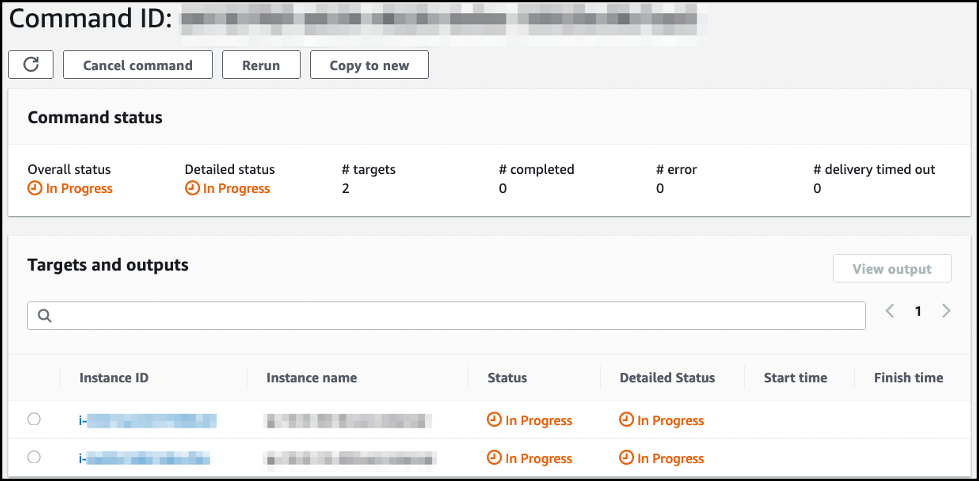

- 添加实例标签后,向下滚动到页面末尾并选择 Run 。

-

现在,您有一组发送读取流量的 EC2 实例和另一组发送写入流量的实例。

-

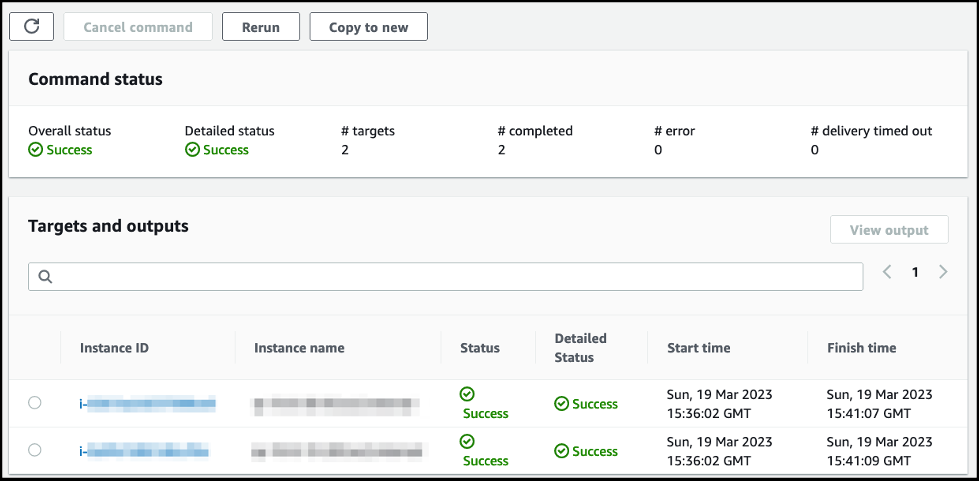

基准测试完成 后,状态更改为 “

成功

”。

-

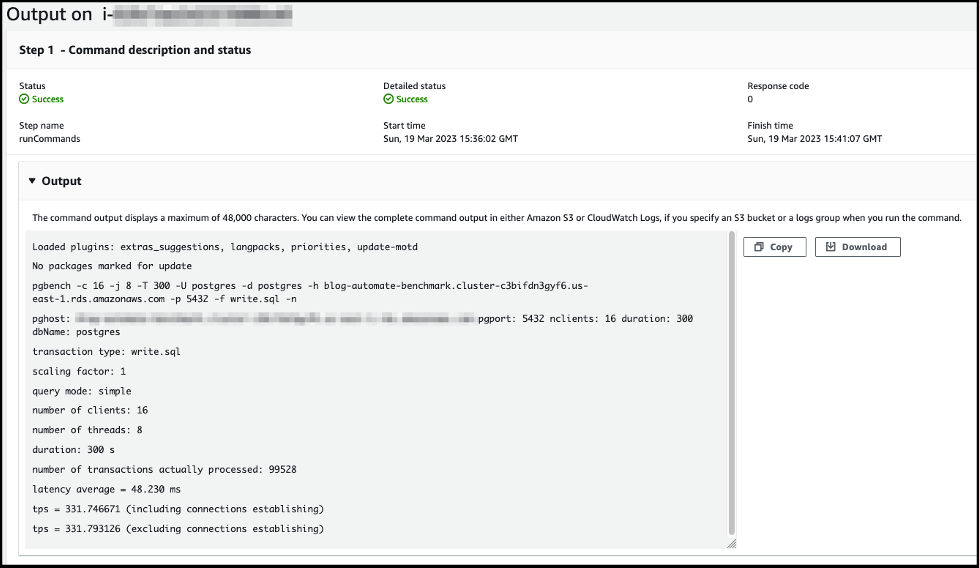

选择实例 ID 以查看基准测试脚本的输出。

故障排除

如果您的 EC2 实例未出现在系统管理器控制台的托管实例下:

- 对于私有子网,您的实例必须能够使用 NAT 网关访问互联网,或者您可以配置 VPC 终端节点以访问 Systems Manager。

- 检查您的安全组是否提供了 EC2 实例和数据库之间的访问权限。

- 检查分配给您的 VPC 私有子网的 NACL 允许网络流量。

-

查看

亚马逊云科技 Systems Manager 快速设置 文档。 -

如 需更多帮助,请参阅

https://repost.aws/knowledge-center/systems-manager-ec2-instance-not-appear 。

当

成功

” 时,你需要对错误进行故障排除 。以下是常见错误,可以帮助解决:

-

访问被拒绝— 启动命令的 亚马逊云科技 身份和访问管理 (IAM) 用户或角色无权访问 EC2 实例。检查分配给该角色 的 权限 。 -

失败-基准测试脚本报告了一个错误。查看基准测试脚本输出以了解有关错误的详细信息。-

连接超时-验证您选择了正确的子网,并且安全组提供了 EC2 实例和数据库之间的访问权限。 有关解决连接问题的帮助,请参阅https://aws.amazon.com/premiumsupport/knowledge-center/rds-cannot-connect/ ... -

服务器名称未知 SQL 错误-验证 Secrets Manager 密钥的用户名、密码、主机和端口的密钥/值是否正确。 -

权限被拒绝 SQL 错误-验证在 Secrets Manager 密钥中定义的数据库用户名是否具有对数据库表的读取、写入和删除权限,以及使用序列的权限。 -

关系不存在 SQL 错误— 验证您是根据本文 第 1 部分 创建架构的 。检查您在 CloudFormation 参数数据库名称中提供的数据库名称是否正确。

-

监控

在进行基准测试时,有多种工具可用于

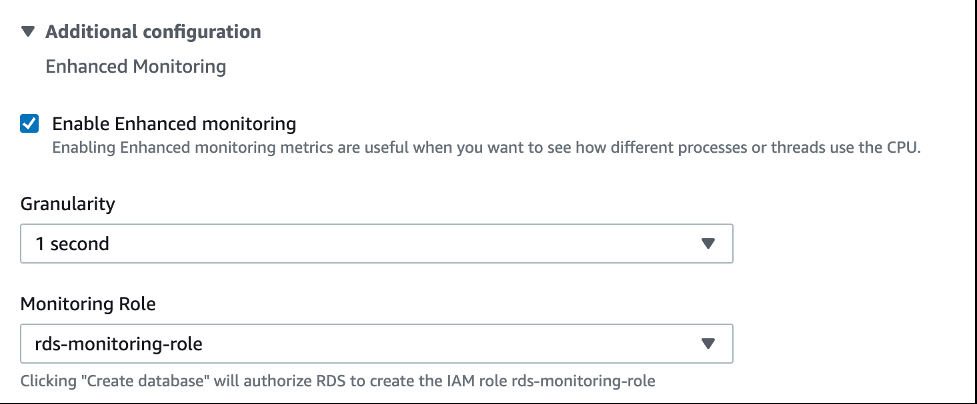

要获得详细的监控和日志记录,您可以启用增强监控并将 PostgreSQL 日志导出到 CloudWatch。使用这些设置,您可以从一个地方搜索、创建警报和所有数据库日志的统一详细视图。将日志导出到 CloudWatch 可确保您的日志存储在高度耐用的存储空间中。要了解更多信息,请参阅

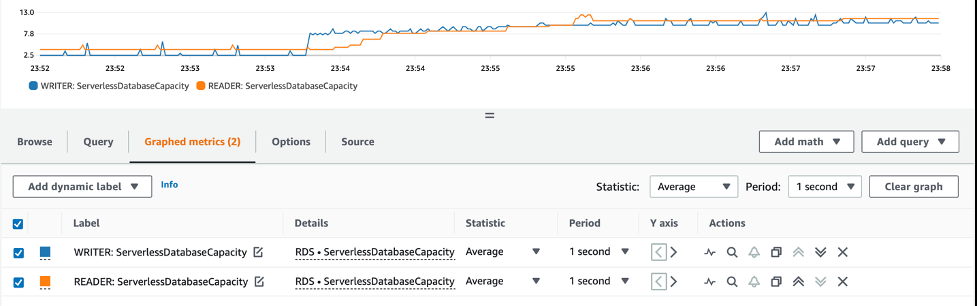

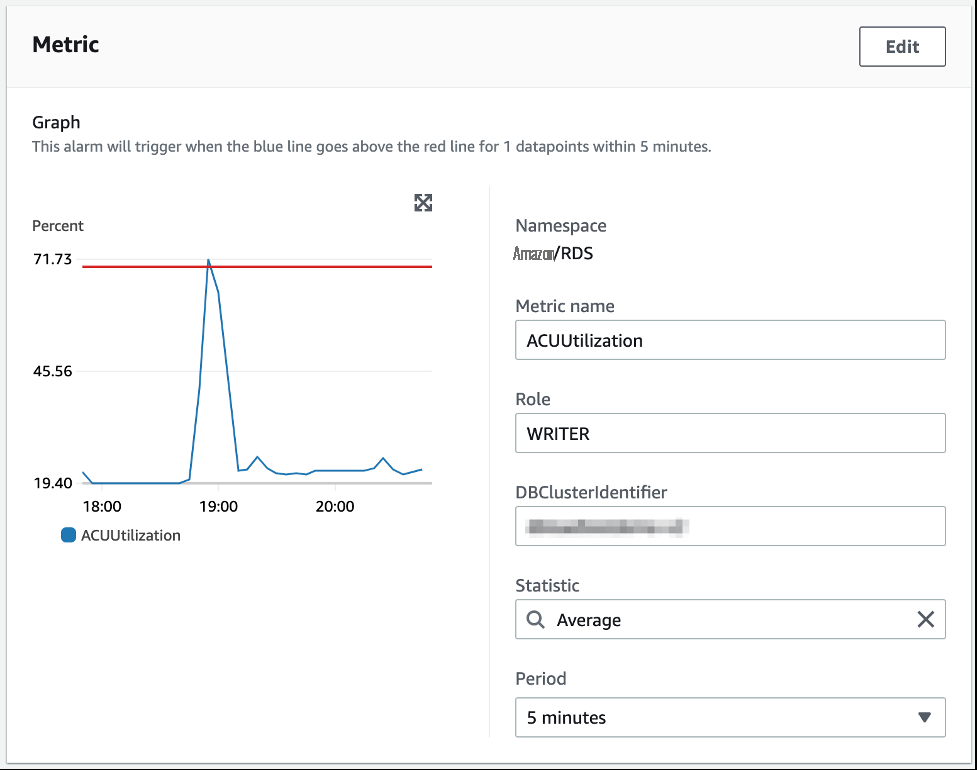

在以下屏幕截图中,您将看到运行此基准测试期间的监控示例。它基于 Aurora Serverless v2,具有一个写入器和一个读取器,两者的配置均为 1-16 个

与 ACU 相关的指标仅适用于 Aurora 无服务器。如果你使用非无服务器数据库关注这篇文章,你将看不到 ACU 指标。

要启用增强监控,请在数据库配置中选择

要启用日志导出,请在数据库配置 中的 日志导出 下选择 PostgreSQL 日 志。

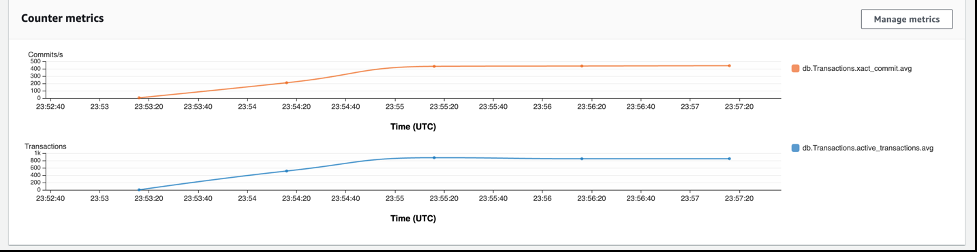

借助 Performance Insights,你可以以图形形式看到开箱即用的各种指标,例如 db.transactions.

xact_commit.avg 和

,它们可以表明数据库的负载正在增加。

d

b.transactions.actions.avg

性能见解:

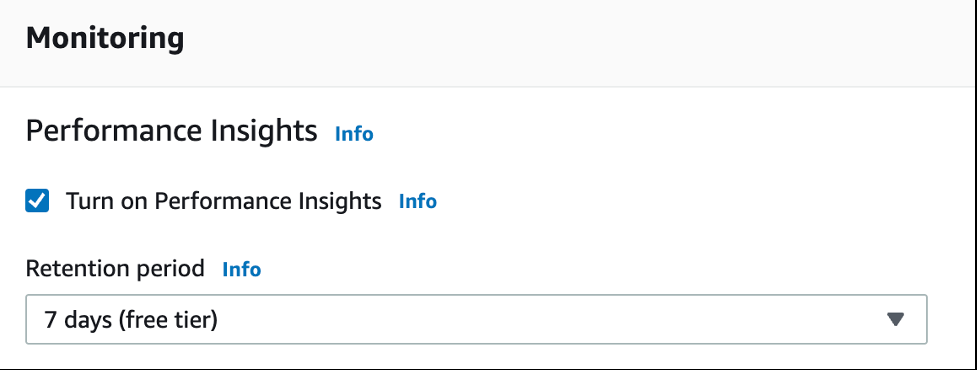

可以在创建数据库时启用此功能,也可以在以后在配置中启用此功能。有关更多信息,请参阅开启

要了解有关绩效洞察及其指标的更多信息,请参阅绩

捕获数据库负载的另一种方法是使用 CloudWatch。您可以查看自定义指标,这有助于可视化您的数据库正在使用的 ACU 数量。

ServerlessDatabaseCapacity

指标显示了 ACU 数量的使用情况。

另一个需要考虑的指标是

Acu

Ulisitation ,它以百分比形式显示 ACU 利用率与数据库的最大预配置 ACU 的比率。这有助于对无服务器数据库进行基准测试和调整其规模。此外,您可以根据这些指标配置警报。有关更多信息,请参阅

有关通过

清理

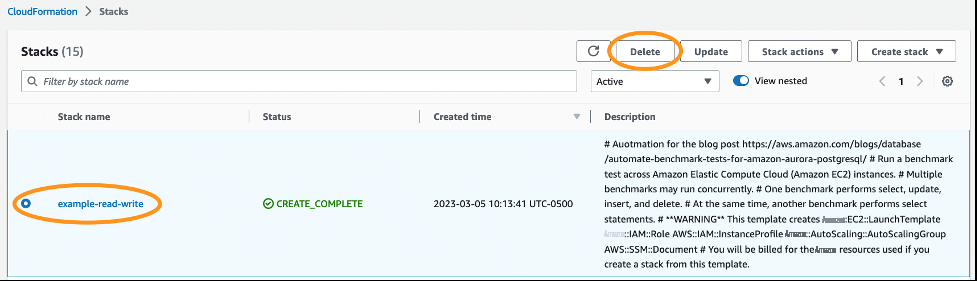

为避免将来产生费用,请删除您在关注这篇文章时创建的资源。从 CloudFormation 控制台

堆栈删除完成后,堆栈状态更改为

DELETE_COMPLETE ,并删除

CloudFormation 模板创建的资源。

如果您为这篇文章创建了先决条件,则还需要将其删除。

结论

在这篇文章中,我们提供了一种使用

在针对数据库运行基准测试时,重要的是要

作者简介

安德鲁·洛

夫是 亚马逊云科技 的高级数据实验室解决方案架构师。他热衷于帮助客户构建架构良好的解决方案,以满足他们的业务需求。他喜欢与家人共度时光、下好棋、做家居装修项目和编写代码。

安德鲁·洛

夫是 亚马逊云科技 的高级数据实验室解决方案架构师。他热衷于帮助客户构建架构良好的解决方案,以满足他们的业务需求。他喜欢与家人共度时光、下好棋、做家居装修项目和编写代码。

安德烈·哈斯 是 亚马逊云科技

的高级数据实验室解决方案架构师。他在数据库和数据分析领域拥有20多年的经验。安德烈喜欢在周末或有机会的时候与家人一起露营、徒步旅行和探索新地方。他还喜欢科技和电子产品。

安德烈·哈斯 是 亚马逊云科技

的高级数据实验室解决方案架构师。他在数据库和数据分析领域拥有20多年的经验。安德烈喜欢在周末或有机会的时候与家人一起露营、徒步旅行和探索新地方。他还喜欢科技和电子产品。

Mike Park

s 是一位高级解决方案架构师,专长于 亚马逊云科技 的数据库。他在企业 IT 行业拥有超过 15 年的经验,在他的职业生涯中参与过多次数据库工作。他喜欢为客户解决问题并帮助他们成功完成使命。在业余时间,他喜欢跑步和户外活动。

Mike Park

s 是一位高级解决方案架构师,专长于 亚马逊云科技 的数据库。他在企业 IT 行业拥有超过 15 年的经验,在他的职业生涯中参与过多次数据库工作。他喜欢为客户解决问题并帮助他们成功完成使命。在业余时间,他喜欢跑步和户外活动。

*前述特定亚马逊云科技生成式人工智能相关的服务仅在亚马逊云科技海外区域可用,亚马逊云科技中国仅为帮助您发展海外业务和/或了解行业前沿技术选择推荐该服务。