我们使用机器学习技术将英文博客翻译为简体中文。您可以点击导航栏中的“中文(简体)”切换到英文版本。

亚马逊 DynamoDB 交易框架

开发人员熟悉 ACID 事务的概念,但在使用 DynamoDB 事务 API 时他们有时会遇到困难,因为 SQL 中的事务以不同的格式呈现和提交。

在这篇文章中,我们介绍了一个框架,该框架使用分层方法以开发人员熟悉的格式标准化和呈现 DynamoDB 事务。在这篇文章中,我们使用 C# 中的 ASP.NET Core 来展示如何使用该框架。你可以在任何其他平台上或使用不同的编程语言来实现这种设计模式。

该框架的目的是:

- 以开发者熟悉的格式呈现 DynamoDB API

- 将交易流程与专用的单一责任框架分离,供多个客户端应用程序使用

- 提供接口隔离,因此框架的客户无需担心 DynamoDB 交易 API 的格式

框架设计概述

这篇文章中介绍的事务框架将 DynamoDB API 封装到一个通用的事务范围类中,该类维护要提交或回滚的事务项目。该框架从

TransactExt

ension s 类允许将数据库放置、更新和删除操作的事务操作请求添加到 TransactScope 实例。

交易工作流程概述

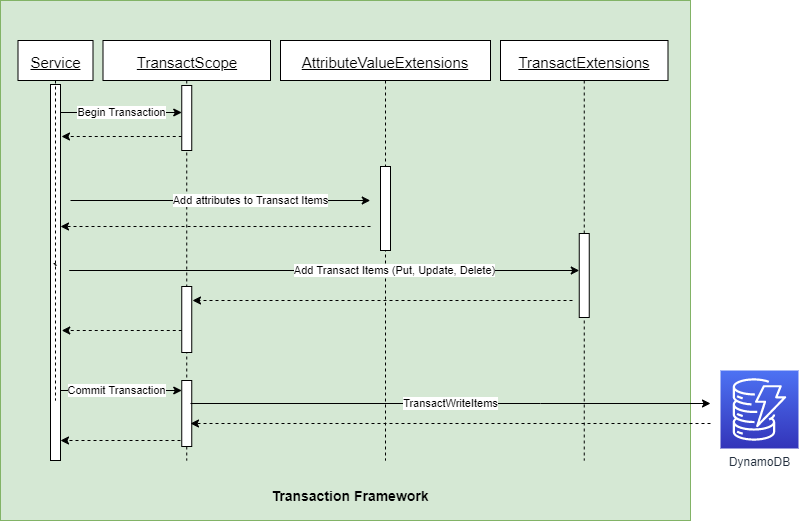

下图说明了交易工作流程,以下段落对此进行了描述。服务组件代表客户端应用程序,它了解开始、提交或回滚事务的业务逻辑。

当典型的工作流程启动时,

TransactScop

e 类会初始化其内部 DynamoDB 客户端对象和操作容器。该操作以 DynamoDB 表项为目标,用于添加、更新或删除操作。这些操作可以针对不同表中的项目,但不能针对不同的 亚马逊云科技 账户或区域。任何两个操作都不能针对同一个项目。例如,您不能在同一笔交易中对同一商品进行状况检查和更新操作。

我们将继续将其称为 “服务” 的用户应用程序使用Att

ributeValueExtensions 向项目添加各种类型的属性,然后通过TransactEx

tensions将项目添加到T

rans

actScope中的容器中。

项目操作完成后,该服务可以将项目提交到 DynamoDB 表中,这样项目要么全部成功,要么全部失败。该服务可以通过移除

TransactScope 对象来回滚作用域

并清理容器。

使用框架的用例示例

此示例通过在原子事务中添加、删除和更新操作来演示框架的使用模式。它使用交易框架和分层方法来说明三种不同产品类型的交易操作:

专辑

、

书籍

和

电影

。

这三种产品类型的项目保留在三个 DynamoDB 表中:

相册

、

书籍

和电影。

对于专辑表,每个专辑产品都有一个 “

艺术家

” 和 “

标题

” 属性,其中 “

艺术家

” 是

专辑

表的分区键 (PK),

标题

是排序键 (SK)。这种方法允许用户搜索艺术家的专辑。例如,你可以查询艺术家约翰·塞巴斯蒂安·巴赫的专辑,或者获取专辑标题 6 Partitas。图书具有 “

作者

” 、“

出版日期

” 和 “书

名

” 属性 ,其中

作者

是

图书

表的 PK,

标题

是 SK。

例如,您可以从作者荷马那里查询《奥德赛》一书,然后获取 “

电影产品具有

出版日期

” 为 1614 年,“语言” 为 “英语” 的属性。

导演

、

流派

和

标题

等属性 ,其中导演是

电影

桌的 PK,

标题

是 SK。例如,你可以查询查理·卓别林的电影《小子》,然后得知它属于 喜剧、戏剧和儿童

题材类型

。

另一种方法是使用通用 PK 和 SK 将具有不同前缀的不同实体存储在同一个表中,例如

A #

代表专辑歌手,

B#

代表图书作者,

M#

代表电影导演。例如,

A #Johann 塞巴斯蒂安·巴赫

、

B #Homer 和

M #Charlie 卓别林

。在此示例中,我们将继续使用 3 个不同的表。

我们使用以下前端用户界面(在以下

- 通过选择 “ 创建产品表”(图书、相册、电影) 来创建这三个表 。

- 选择 “ 开始交易 ” 以开始新的交易。

- 在 “ 产品类型 ” 下拉菜单 上选择 “ 相册 ” 、“图 书 ” 或 “ 电影 ”。

- 在产品 类型 下拉列表中添加相应 的产品 属性。

- 选择 “ 添加项目 ” 或 “ 删除项目 ” ,在相应的表格中添加项目或从中移除项目。

- 要更新项目,请输入产品 PK 和 SK,然后选择 “ 检索项目 ” 以获取项目的最新版本,根据需要更改属性,然后选择 “ 添加项目 ” 。

- 选择 “ 提交事务 ” 将事务项提交到表中,或选择 “ 取消事务 ” 以回滚事务。

分层方法

我们使用接口和实现方法使用三层(前端、服务和数据提供商)来实现此示例。接口定义了层的原型,实现包含详细信息。然后,前端层通过其接口与服务层进行交互以创建表;开始事务;添加、更新或删除项目;然后提交或回滚事务。接下来,服务层具有核心业务逻辑,它与

专辑

、

图书

和

电影

产品类型管理的数据提供者层和交易框架进行交互。数据提供者层将 DynamoDB API 与服务层分离,用于 DynamoDB 项目操作。

创建表

选择 “

创建产品表”(图书、相册、电影)

以启动

我们使用

开始交易

选择 “

开始交易

” 以启动 be

ProductService

函数使用

TransactScope 使用 Begin 方法

初始化 Trans

添加交易项目

你可以访问

属性扩展

这个常见的扩展类用于通过将实体对象转换为 亚马逊云科技 A

关于交易的一些评论:

- 标准化数据结构是 ACID 要求的主要驱动力。使用 DynamoDB,其中许多要求是通过将项目聚合存储为一组项目或行来满足的。每个单项操作都是 ACID,因为没有两项操作可以部分影响物品。

- 避免使用事务来维护 DynamoDB 上的标准化数据集。

- 交易是提交不同项目的更改或执行有条件的批量插入/更新的绝佳用例。

-

在处理交易 时,请考虑以下

最佳实践 。 - 事务可以在多个表中发生,但两个操作不能同时针对 DynamoDB 中的同一个项目。

- 每笔交易中最多有 100 个操作请求。

- 每笔交易仅限于一个 亚马逊云科技 账户或区域。

结论

在这篇文章中,我们介绍了一个框架,该框架将使用类似于 SQL 数据库中事务的方法,帮助开发人员在 DynamoDB 中扩展对事务的使用。该框架构建了一个通用、可重复使用的框架,用于封装 DynamoDB 事务 API,使服务层能够处理常规的项目操作,而无需了解或理解 DynamoDB 事务 API 的详细信息。这允许在任何时候启动事务,并且在使用 DynamoDB 等 NoSQL 数据库时更容易启动事务。

该框架中有两个基本概念:事务作用域,它将标准项目操作转换为事务项目,以及用于将实体对象转换为 DynamoDB 属性值的扩展类。为了展示该框架的用法,我们使用该框架和分层方法创建了一个示例应用程序来管理三个不同的表。

我们使用.Net Core 和 awssdk.dynamodbv2 以 C# 编程语言编写了示例代码。您可以在

作者简介

Jeff Chen,

亚马逊云科技 专业服务 首席架构师。作为技术负责人,他与客户和合作伙伴合作制定云迁移和优化计划。在业余时间,杰夫喜欢与家人和朋友共度时光,探索乔治亚州亚特兰大附近的山脉。

Jeff Chen,

亚马逊云科技 专业服务 首席架构师。作为技术负责人,他与客户和合作伙伴合作制定云迁移和优化计划。在业余时间,杰夫喜欢与家人和朋友共度时光,探索乔治亚州亚特兰大附近的山脉。

Esteban Serna ,Sa Dyn

amoDB 高级专家。在过去的15年中,Esteban一直与数据库合作,帮助客户选择合适的架构来满足他们的需求。刚从大学毕业的他开始部署支持分布式地点的联络中心所需的基础设施。自从 NoSQL 数据库问世以来,他就爱上了它们,并决定专注于它们,因为集中计算已不再是常态。如今,Esteban 专注于帮助客户使用 DynamoDB 设计需要个位数毫秒延迟的分布式大规模应用程序。有人说他是一本开放的书,他喜欢与他人分享自己的知识。

Esteban Serna ,Sa Dyn

amoDB 高级专家。在过去的15年中,Esteban一直与数据库合作,帮助客户选择合适的架构来满足他们的需求。刚从大学毕业的他开始部署支持分布式地点的联络中心所需的基础设施。自从 NoSQL 数据库问世以来,他就爱上了它们,并决定专注于它们,因为集中计算已不再是常态。如今,Esteban 专注于帮助客户使用 DynamoDB 设计需要个位数毫秒延迟的分布式大规模应用程序。有人说他是一本开放的书,他喜欢与他人分享自己的知识。

*前述特定亚马逊云科技生成式人工智能相关的服务仅在亚马逊云科技海外区域可用,亚马逊云科技中国仅为帮助您发展海外业务和/或了解行业前沿技术选择推荐该服务。